LDraw Parts Library 2026-01 - Packaged for Linux

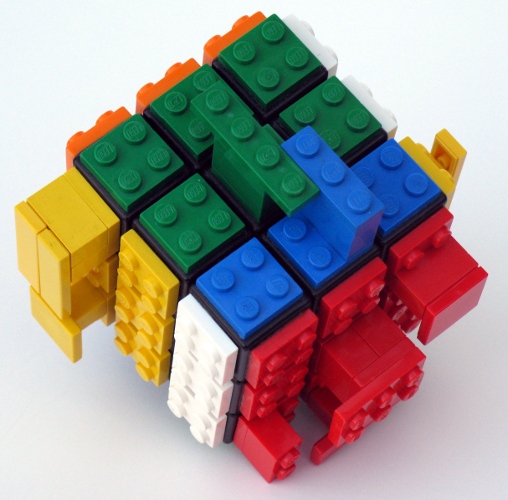

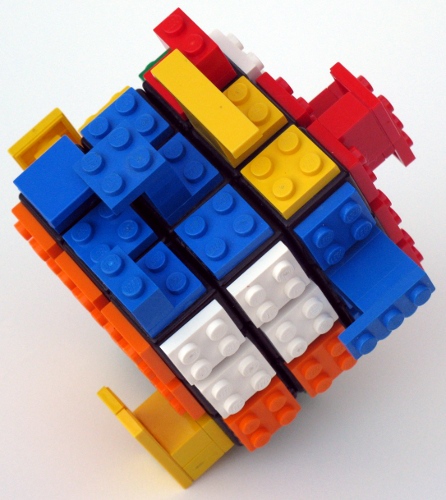

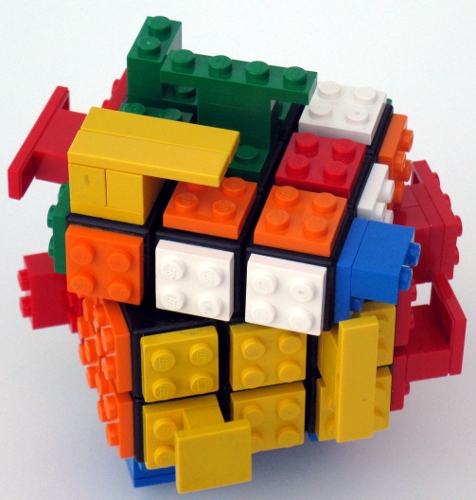

LDraw.org maintains a library of Lego part models upon which a number of related tools such as LeoCAD, LDView and LPub rely.

I packaged the 2026-01 parts library for Fedora 43 to install to /usr/share/ldraw; it should be straight-forward to adapt to other distributions.

The *.noarch.rpm files are the ones to install, and the .src.rpm contains everything so it can be rebuilt for another rpm-based distribution.

ldraw_parts-202601-ec1.fc43.src.rpm

ldraw_parts-202601-ec1.fc43.noarch.rpm

ldraw_parts-creativecommons-202601-ec1.fc43.noarch.rpm

ldraw_parts-models-202601-ec1.fc43.noarch.rpm

See also LDrawPartsLibrary.

LDraw Parts Library 2025-12 - Packaged for Linux

LDraw.org maintains a library of Lego part models upon which a number of related tools such as LeoCAD, LDView and LPub rely.

I packaged the 2025-12 parts library for Fedora 43 to install to /usr/share/ldraw; it should be straight-forward to adapt to other distributions.

The *.noarch.rpm files are the ones to install, and the .src.rpm contains everything so it can be rebuilt for another rpm-based distribution.

ldraw_parts-202512-ec1.fc43.src.rpm

ldraw_parts-202512-ec1.fc43.noarch.rpm

ldraw_parts-creativecommons-202512-ec1.fc43.noarch.rpm

ldraw_parts-models-202512-ec1.fc43.noarch.rpm

See also LDrawPartsLibrary.

LeoCAD 25.09 - Packaged for Linux

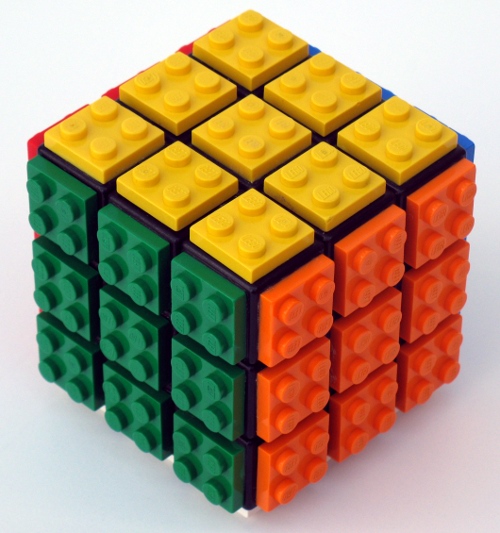

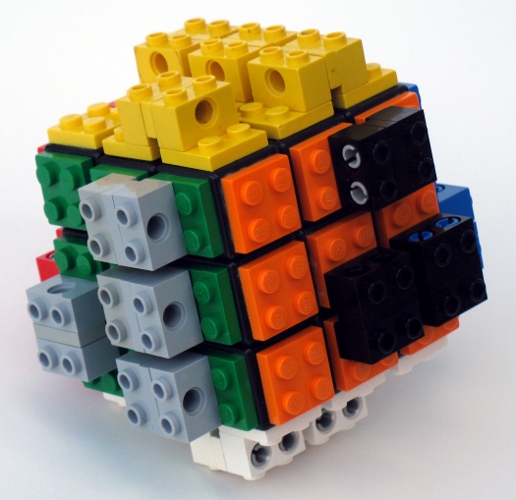

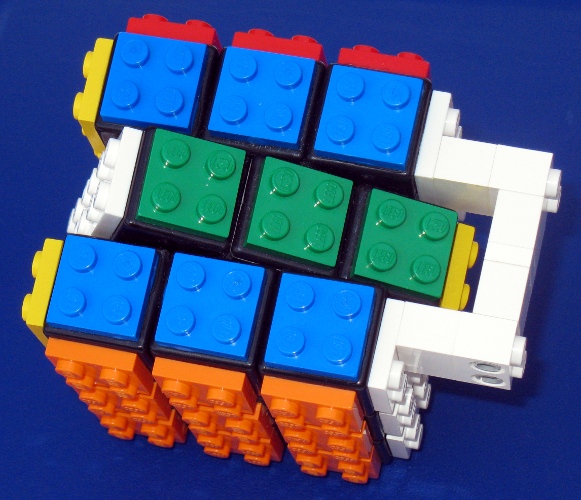

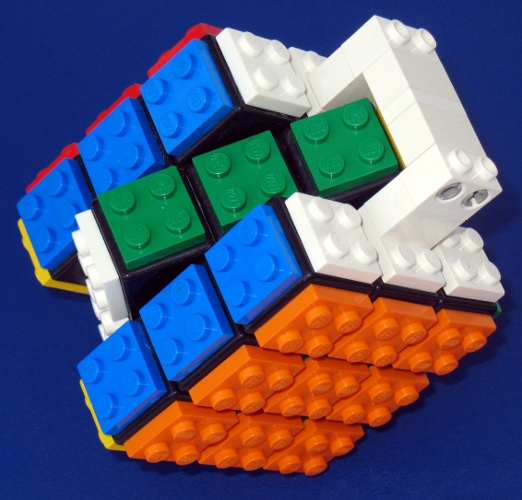

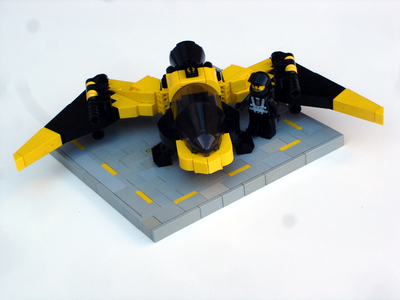

LeoCAD is a CAD application for building digital models with Lego-compatible parts drawn from the LDraw parts library.

I packaged (as an rpm) the 25.09 release of LeoCAD for Fedora 43. This package requires the LDraw parts library package.

Install the binary rpm. The source rpm contains the files to allow you to rebuild the packge for another distribution.

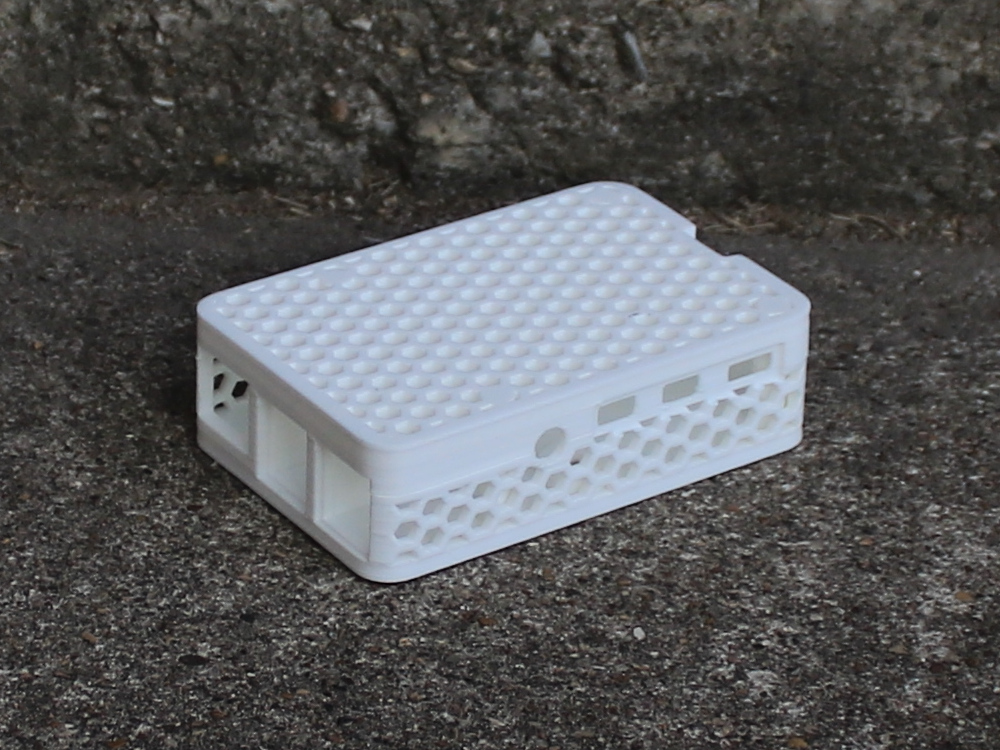

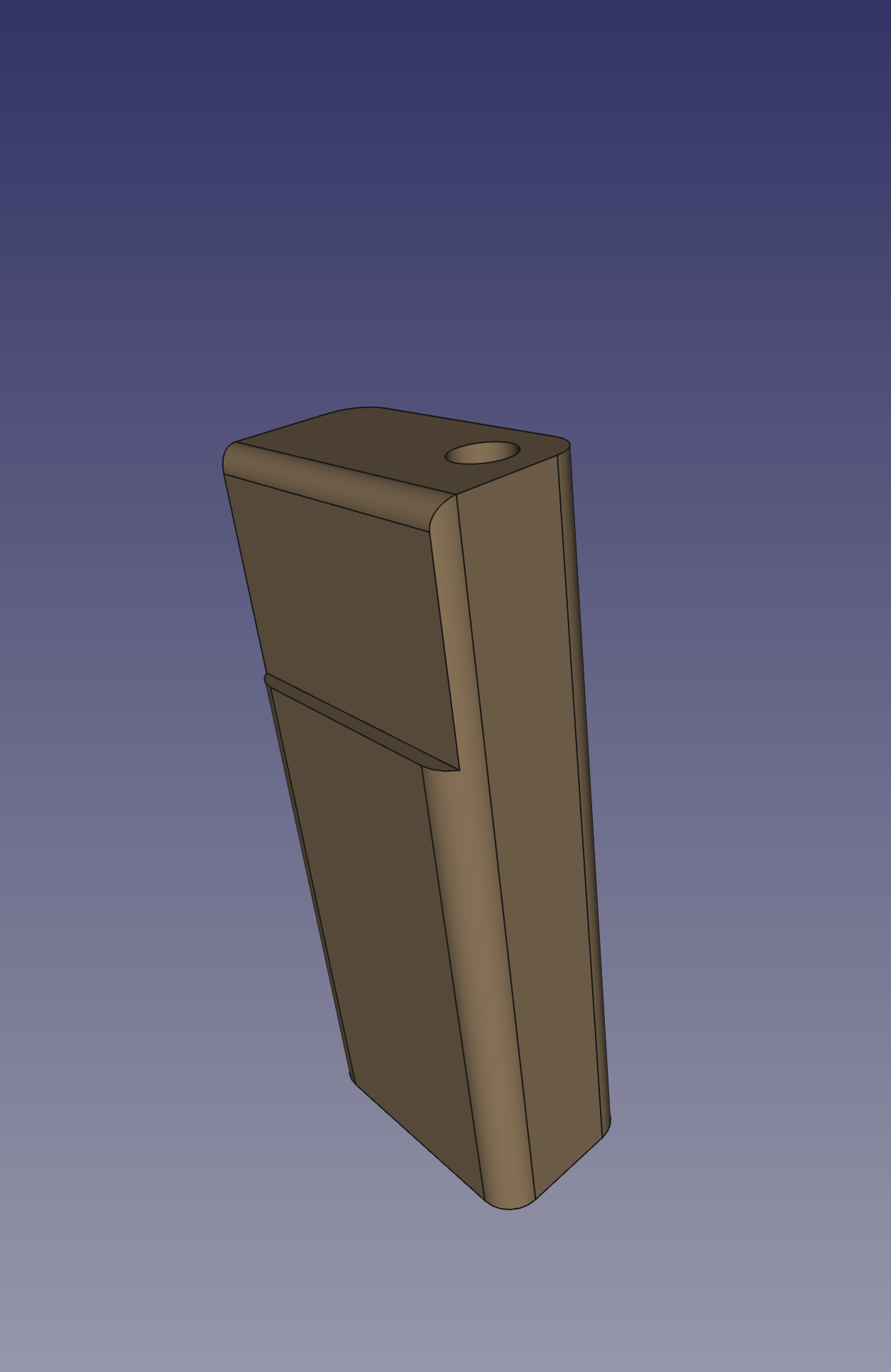

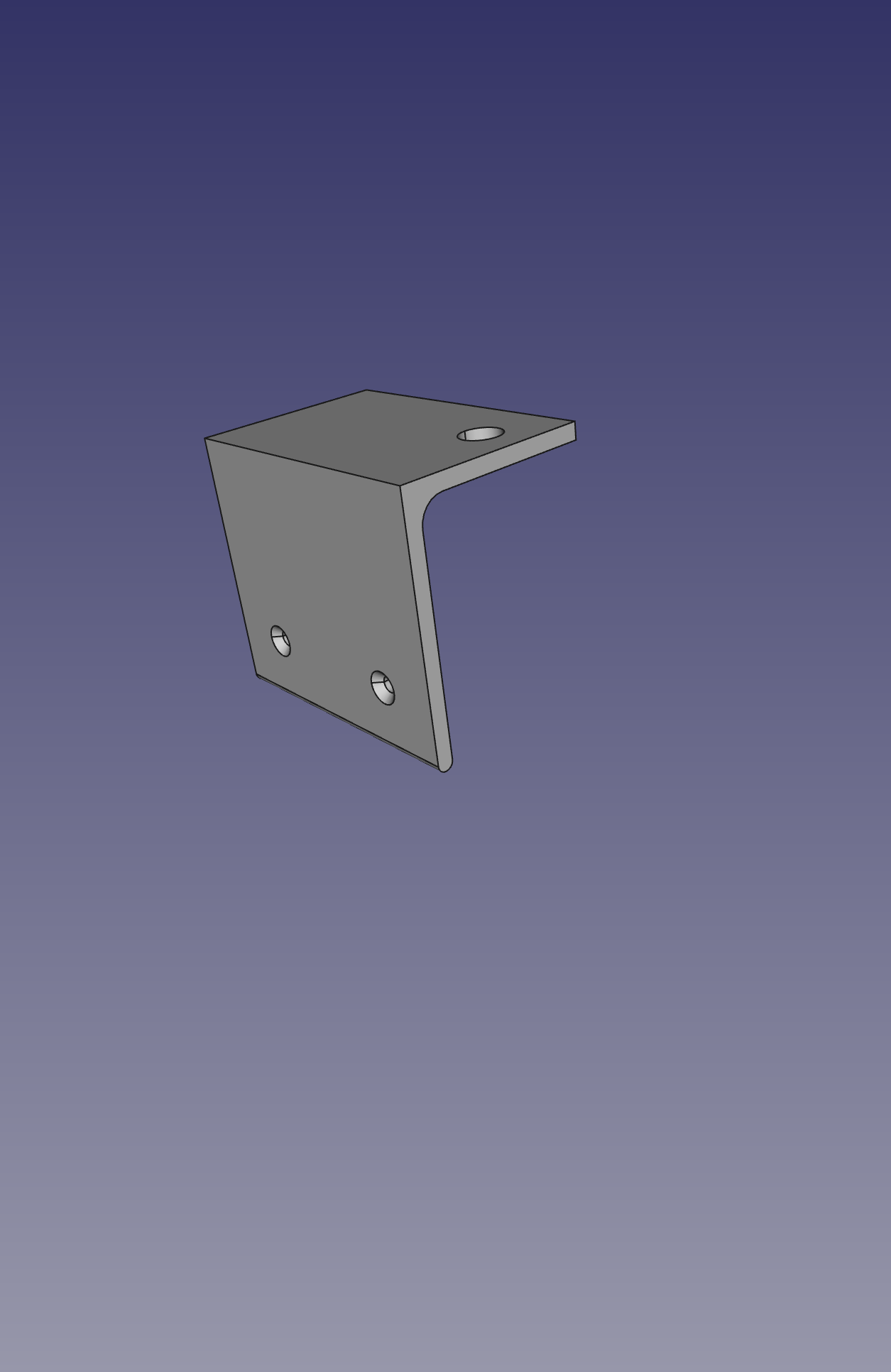

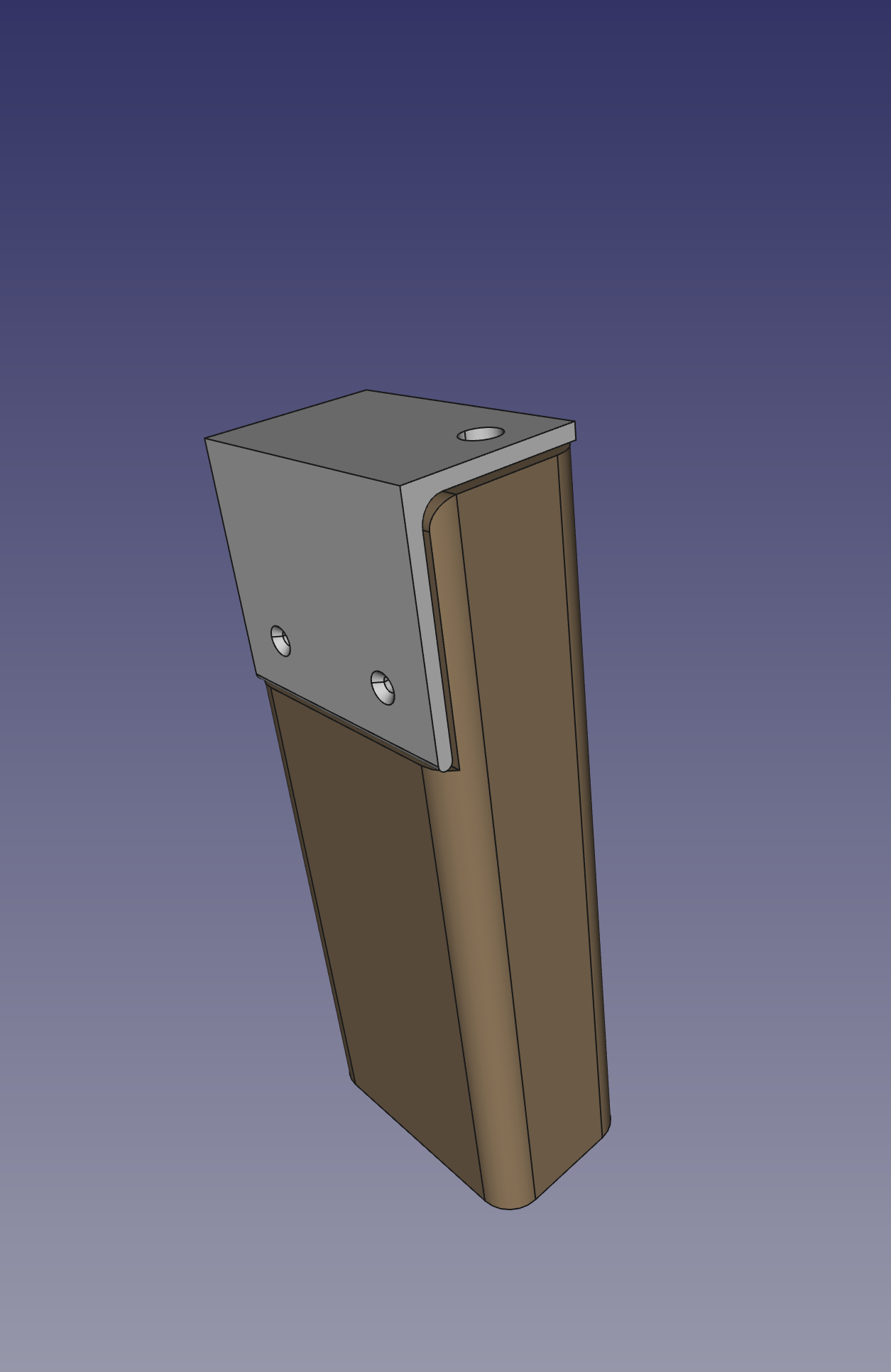

Yet Another Raspberry-Pi case in OpenSCAD

I needed a case for a Raspberry Pi 4B for a project, looked at those available in the usual places, and didn't find something that quite met my needs. I wanted a case which I could bolt to a sheet of plywood, so I wanted holes for a pair of 8-32 heat-set threaded inserts in the lid. I also wanted it to be well-ventilated to avoid overheating.

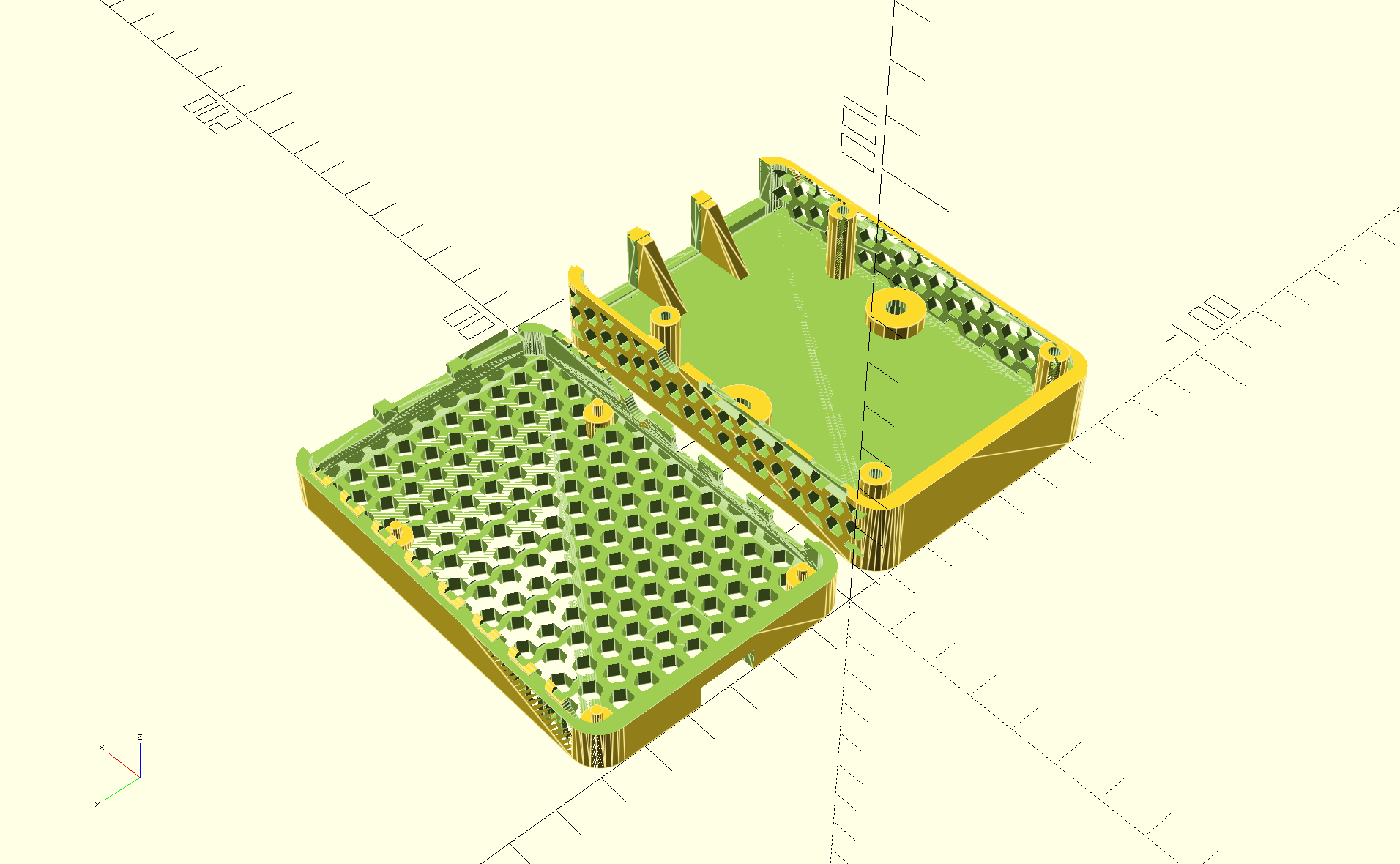

So I created one (from scratch) in OpenSCAD:

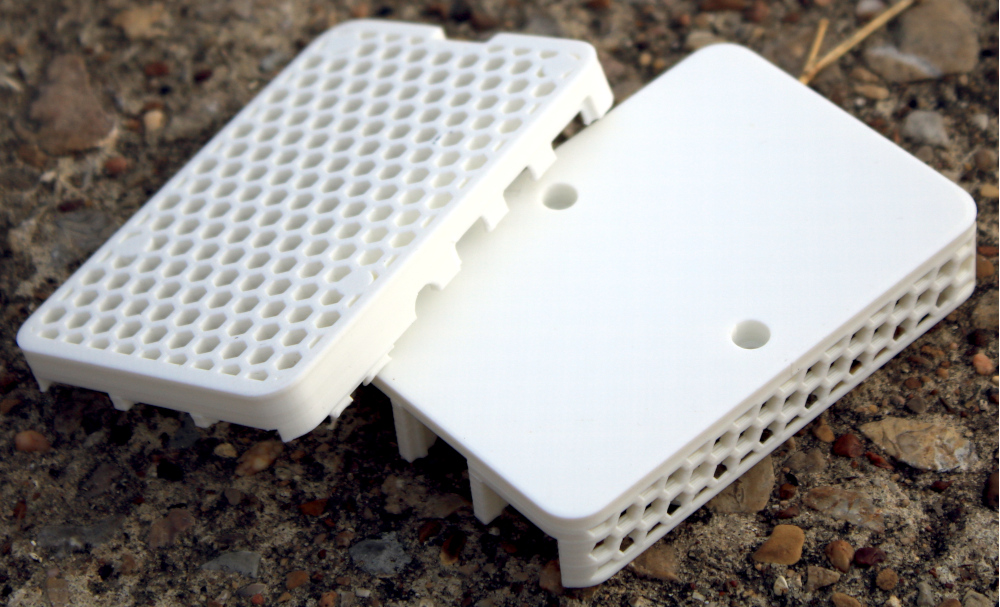

I think it turned out quite well:

And the two parts of the case snap together securely:

For those who would want to make use of it (CC BY-SA), the OpenSCAD and STL files are available in this archive. If you do make use of it, I'd love to hear from you.

(Photography by Joshua Carter.)

LDraw Parts Library 2025-09 - Packaged for Linux

LDraw.org maintains a library of Lego part models upon which a number of related tools such as LeoCAD, LDView and LPub rely.

I packaged the 2025-09 parts library for Fedora 42 to install to /usr/share/ldraw; it should be straight-forward to adapt to other distributions.

The *.noarch.rpm files are the ones to install, and the .src.rpm contains everything so it can be rebuilt for another rpm-based distribution.

ldraw_parts-202509-ec1.fc42.src.rpm

ldraw_parts-202509-ec1.fc42.noarch.rpm

ldraw_parts-creativecommons-202509-ec1.fc42.noarch.rpm

ldraw_parts-models-202509-ec1.fc42.noarch.rpm

See also LDrawPartsLibrary.

LDraw Parts Library 2025-08 - Packaged for Linux

LDraw.org maintains a library of Lego part models upon which a number of related tools such as LeoCAD, LDView and LPub rely.

I packaged the 2025-08 parts library for Fedora 42 to install to /usr/share/ldraw; it should be straight-forward to adapt to other distributions.

The *.noarch.rpm files are the ones to install, and the .src.rpm contains everything so it can be rebuilt for another rpm-based distribution.

ldraw_parts-202508-ec1.fc42.src.rpm

ldraw_parts-202508-ec1.fc42.noarch.rpm

ldraw_parts-creativecommons-202508-ec1.fc42.noarch.rpm

ldraw_parts-models-202508-ec1.fc42.noarch.rpm

See also LDrawPartsLibrary.

LDraw Parts Library 2025-07 - Packaged for Linux

LDraw.org maintains a library of Lego part models upon which a number of related tools such as LeoCAD, LDView and LPub rely.

I packaged the 2025-07 parts library for Fedora 42 to install to /usr/share/ldraw; it should be straight-forward to adapt to other distributions.

The *.noarch.rpm files are the ones to install, and the .src.rpm contains everything so it can be rebuilt for another rpm-based distribution.

ldraw_parts-202507-ec1.fc42.src.rpm

ldraw_parts-202507-ec1.fc42.noarch.rpm

ldraw_parts-creativecommons-202507-ec1.fc42.noarch.rpm

ldraw_parts-models-202507-ec1.fc42.noarch.rpm

See also LDrawPartsLibrary.

LDraw Parts Library 2025-06 - Packaged for Linux

LDraw.org maintains a library of Lego part models upon which a number of related tools such as LeoCAD, LDView and LPub rely.

I packaged the 2025-06 parts library for Fedora 42 to install to /usr/share/ldraw; it should be straight-forward to adapt to other distributions.

The *.noarch.rpm files are the ones to install, and the .src.rpm contains everything so it can be rebuilt for another rpm-based distribution.

ldraw_parts-202506-ec1.fc42.src.rpm

ldraw_parts-202506-ec1.fc42.noarch.rpm

ldraw_parts-creativecommons-202506-ec1.fc42.noarch.rpm

ldraw_parts-models-202506-ec1.fc42.noarch.rpm

See also LDrawPartsLibrary.

LDraw Parts Library 2025-05 - Packaged for Linux

LDraw.org maintains a library of Lego part models upon which a number of related tools such as LeoCAD, LDView and LPub rely.

I packaged the 2025-05 parts library for Fedora 41 to install to /usr/share/ldraw; it should be straight-forward to adapt to other distributions.

The *.noarch.rpm files are the ones to install, and the .src.rpm contains everything so it can be rebuilt for another rpm-based distribution.

ldraw_parts-202505-ec1.fc41.src.rpm

ldraw_parts-202505-ec1.fc41.noarch.rpm

ldraw_parts-creativecommons-202505-ec1.fc41.noarch.rpm

ldraw_parts-models-202505-ec1.fc41.noarch.rpm

See also LDrawPartsLibrary.

LDraw Parts Library 2025-04 - Packaged for Linux

LDraw.org maintains a library of Lego part models upon which a number of related tools such as LeoCAD, LDView and LPub rely.

I packaged the 2025-04 parts library for Fedora 41 to install to /usr/share/ldraw; it should be straight-forward to adapt to other distributions.

The *.noarch.rpm files are the ones to install, and the .src.rpm contains everything so it can be rebuilt for another rpm-based distribution.

ldraw_parts-202504-ec1.fc41.src.rpm

ldraw_parts-202504-ec1.fc41.noarch.rpm

ldraw_parts-creativecommons-202504-ec1.fc41.noarch.rpm

ldraw_parts-models-202504-ec1.fc41.noarch.rpm

See also LDrawPartsLibrary.

LDraw Parts Library 2025-03 - Packaged for Linux

LDraw.org maintains a library of Lego part models upon which a number of related tools such as LeoCAD, LDView and LPub rely.

I packaged the 2025-03 parts library for Fedora 41 to install to /usr/share/ldraw; it should be straight-forward to adapt to other distributions.

The *.noarch.rpm files are the ones to install, and the .src.rpm contains everything so it can be rebuilt for another rpm-based distribution.

ldraw_parts-202503-ec1.fc41.src.rpm

ldraw_parts-202503-ec1.fc41.noarch.rpm

ldraw_parts-creativecommons-202503-ec1.fc41.noarch.rpm

ldraw_parts-models-202503-ec1.fc41.noarch.rpm

See also LDrawPartsLibrary.

LDraw Parts Library 2025-02 - Packaged for Linux

LDraw.org maintains a library of Lego part models upon which a number of related tools such as LeoCAD, LDView and LPub rely.

I packaged the 2025-02 parts library for Fedora 41 to install to /usr/share/ldraw; it should be straight-forward to adapt to other distributions.

The *.noarch.rpm files are the ones to install, and the .src.rpm contains everything so it can be rebuilt for another rpm-based distribution.

ldraw_parts-202502-ec1.fc41.src.rpm

ldraw_parts-202502-ec1.fc41.noarch.rpm

ldraw_parts-creativecommons-202502-ec1.fc41.noarch.rpm

ldraw_parts-models-202502-ec1.fc41.noarch.rpm

See also LDrawPartsLibrary.

LDraw Parts Library 2025-01 - Packaged for Linux

LDraw.org maintains a library of Lego part models upon which a number of related tools such as LeoCAD, LDView and LPub rely.

I packaged the 2025-01 parts library for Fedora 41 to install to /usr/share/ldraw; it should be straight-forward to adapt to other distributions.

The *.noarch.rpm files are the ones to install, and the .src.rpm contains everything so it can be rebuilt for another rpm-based distribution.

ldraw_parts-202501-ec1.fc41.src.rpm

ldraw_parts-202501-ec1.fc41.noarch.rpm

ldraw_parts-creativecommons-202501-ec1.fc41.noarch.rpm

ldraw_parts-models-202501-ec1.fc41.noarch.rpm

See also LDrawPartsLibrary.

Generality in solutions; an example in HttpFile

A few (ok, ok, over a dozen) years ago, I came across a question by someone on stackoverflow who wanted to be able to unzip part of a ZIP file that was hosted on the web without having to download the entire file.

He had not found a Python library for doing this, so he modified the ZipFile library to create an HTTPZipFile class which knew about both ZIP files and also about HTTP requests. I posted a different approach. Over time, stackoverflow changed its goals and now that question and answer have been closed and marked off-topic for stackoverflow. I believe there's value in the question and answer, and I think a fuller treatment of the answer would be fruitful for others to learn from.

Seams and Layers

The idea is to think about the interfaces: the seams or layers in the code.

The ZipFile class expects a file object. The file in this case lives on a website, but we could create a file-like object that knows how to retrieve parts of a file over HTTP using Range GET requests, and behaves like a file in terms of being seekable.

Let's walk through this pedagogically:

We want to create a file-like object that takes a URL as its constructor.

So let's start with our demo script:

#!/usr/bin/env python3

from httpfile import HttpFile

# Try it

from zipfile import ZipFile

URL = "https://www.python.org/ftp/python/3.12.0/python-3.12.0-embed-amd64.zip"

my_zip = ZipFile(HttpFile(URL))

print("\n".join(my_zip.namelist()))

And create httpfile.py with just the constructor as a starting point:

#!/usr/bin/env python3

class HttpFile:

def __init__(self, url):

self.url = url

Trying that, we get:

AttributeError: 'HttpFile' object has no attribute 'seek'

So let's implement seek:

#!/usr/bin/env python3

import requests

class HttpFile:

def __init__(self, url):

self.url = url

self.offset = 0

self._size = -1

def size(self):

if self._size < 0:

response = requests.get(self.url, stream=True)

response.raise_for_status()

if response.status_code != 200:

raise OSError(f"Bad response of {response.status_code}")

self._size = int(response.headers["Content-length"], 10)

return self._size

def seek(self, offset, whence=0):

if whence == 0:

self.offset = offset

elif whence == 1:

self.offset += offset

elif whence == 2:

self.offset = self.size() + offset

else:

raise ValueError(f"whence value {whence} unsupported")

return self.offset

That gets us to the next error:

AttributeError: 'HttpFile' object has no attribute 'tell'

So we implement tell():

def tell(self):

return self.offset

Making progress, we reach the next error:

AttributeError: 'HttpFile' object has no attribute 'read'

So we implement read:

def read(self, count=-1):

if count < 0:

end = self.size() - 1

else:

end = self.offset + count - 1

headers = {

'Range': "bytes=%s-%s" % (self.offset, end),

}

response = requests.get(self.url, headers=headers, stream=True)

if response.status_code != 206:

raise OSError(f"Bad response of {response.status_code}")

# The headers contain the information we need to check that; in particular,

# When the server accepts the range request, we get

# Accept-Ranges: bytes

# Content-Length: 22

# Content-Range: bytes 27382-27403/27404

# vs when it does not accept the range:

# Content-Length: 27404

content_range = response.headers.get('Content-Range')

if not content_range:

raise OSError("Server does not support Range")

if content_range != f"bytes {self.offset}-{end}/{self.size()}":

raise OSError(f"Server returned unexpected range {content_range}")

# End of paranoia checks

chunk = len(response.content)

if count >= 0 and chunk != count:

raise OSError(f"Asked for {count} bytes but got {chunk}")

self.offset += chunk

return response.content

We have a lot going on here; particularly around handling error checking and ensuring the responses match what we expect. We want to fail loudly if we get something unexpected rather than attempt to forge ahead and fail in an obscure way later on.

And now we finally reach some success, giving a listing of the filenames within the ZIP:

python.exe pythonw.exe python312.dll python3.dll vcruntime140.dll vcruntime140_1.dll LICENSE.txt pyexpat.pyd select.pyd unicodedata.pyd winsound.pyd _asyncio.pyd _bz2.pyd _ctypes.pyd _decimal.pyd _elementtree.pyd _hashlib.pyd _lzma.pyd _msi.pyd _multiprocessing.pyd _overlapped.pyd _queue.pyd _socket.pyd _sqlite3.pyd _ssl.pyd _uuid.pyd _wmi.pyd _zoneinfo.pyd libcrypto-3.dll libffi-8.dll libssl-3.dll sqlite3.dll python312.zip python312._pth python.cat

So let's see if we can extract part of the LICENSE.txt file from within the zip:

data = my_zip.open('LICENSE.txt')

data.seek(99)

print(data.read(239).decode('utf-8'))

That triggers a new error (which a comment 8 years after the initial code was posted pointed out was needed as of Python 3.7):

AttributeError: 'HttpFile' object has no attribute 'seekable'

So a trivial implementation of that:

def seekable(self):

return True

and we now get the content:

Guido van Rossum at Stichting Mathematisch Centrum (CWI, see https://www.cwi.nl) in the Netherlands as a successor of a language called ABC. Guido remains Python's principal author, although it includes many contributions from others.

There are a number of ways to further improve this code for production use, but for our pedagogical purposes here, I think we can call that "good enough". (Areas of improvement from an engineering perspective include: actual unit tests, integration tests that do not rely on a remote server, filling out the file object's full interface, addressing the read-only nature of the file access, using a session to support authentication mechanisms and connection reuse, among others.)

This gets us an object that acts like a local file even though it's reaching over the network. The implementation requires less code than a modified HttpZipFile would.

This same interface of a file-like object can be used for other purposes as well.

A Second Application Of The Pattern

Let's continue with our motivating use case of accessing parts of remote zip files where we don't want to download the entire file. If we don't want to download the entire file, then surely we would not want to download part of the file multiple times, right? So we would like HttpFile to cache data. But then we wind up mixing caching into the HTTP logic. Instead, we can again use the file-like-object interface to add a caching layer for a file-like object.

So we will need a class that takes a file object and a location to save the cached data. To keep this simple, let's say we point to a directory where the cache for this one file object will be stored. We will want to be able to store the file's total size, every chunk of data, and where each chunk of data maps into the file. So let's say the directory can contain a file named size with the file's size as a base 10 string with a newline, and any number of data.<offset> files with a chunk of data. This makes it easy for a human to understand how the data on disk works. I would not call it exactly "self describing", but it does lean in that general direction. (There are many, many ways we could store the data in the cache directory. Each one has its own set of trade-offs. Here I'm aiming for ease of implementation and obviousness.)

Since the file's data will be stored in segments, we will want to be able to think in terms of segments which can be ordered, check if two segments overlap, or if one segment contains another. So let's create a class to provide that abstraction:

import functools

@functools.total_ordering

class Segment(object):

def __init__(self, offset, length):

self.offset = offset

self.length = length

def overlaps(self, other):

return (

self.offset < other.offset+other.length and

other.offset < self.offset + self.length

)

def contains(self, offset):

return self.offset <= offset < (self.offset + self.length)

def __lt__(self, other):

return (self.offset, self.length) < (other.offset, other.length)

def __eq__(self, other):

return self.offset == other.offset and self.length == other.length

Using that class, we can create a constructor that loads the metadata from the cache directory:

class CachingFile:

def __init__(self, fileobj, backingstore):

"""fileobj is a file-like object to cache. backingstore is a directory name.

"""

self.fileobj = fileobj

self.backingstore = backingstore

self.offset = 0

os.makedirs(backingstore, exist_ok=True)

try:

with open(os.path.join(backingstore, 'size'), 'r', encoding='utf-8') as size_file:

self._size = int(size_file.read().strip(), 10)

except Exception:

self._size = -1

# Get files and sizes for any pre-existing data, so

# self.available_segments is a sorted list of Segments.

self.available_segments = [

Segment(int(filename[len("data."):], 10), os.stat(os.path.join(self.backingstore, filename)).st_size)

for filename in os.listdir(self.backingstore) if filename.startswith("data.")]

and the simple seek/tell/seekable parts of the interface we learned above:

def size(self):

if self._size < 0:

self._size = self.fileobj.seek(0, 2)

with open(os.path.join(self.backingstore, 'size'), 'w', encoding='utf-8') as size_file:

size_file.write(f"{self._size}\n")

return self._size

def seek(self, offset, whence=0):

if whence == 0:

self.offset = offset

elif whence == 1:

self.offset += offset

elif whence == 2:

self.offset = self.size() + offset

else:

raise ValueError("Invalid whence")

return self.offset

def tell(self):

return self.offset

def seekable(self):

return True

Implementation of read() is a bit more complex. It needs to handle reads with nothing in the cache, reads with everything in the cache, but also reads with multiple cached and uncached segments.

def _read(self, offset, count):

"""Does not update self.offset"""

if offset >= self.size() or count == 0:

return b""

desired_segment = Segment(offset, count)

# Is there a cached segment for the start of this segment?

matches = sorted(segment for segment in self.available_segments if segment.contains(offset))

if matches: # Read data from cache

match = matches[0]

with open(os.path.join(self.backingstore, f"data.{match.offset}"), 'rb') as data_file:

data_file.seek( offset - match.offset )

data = data_file.read(min(offset+count, match.offset+match.length) - offset)

else: # Read data from underlying file

# The beginning of the requested data is not cached, but if a later

# portion of the data is cached, we don't want to re-read it, so

# request only up to the next cached segment..

matches = sorted(segment for segment in self.available_segments if segment.overlaps(desired_segment))

if matches:

match = matches[0]

chunk_size = match.offset - offset

else:

chunk_size = count

# Read from the underlying file object

if not self.fileobj:

raise RuntimeError(f"No underlying file to satisfy read of {count} bytes at offset {offset}")

self.fileobj.seek(offset)

data = self.fileobj.read(chunk_size)

# Save to the backing store

with open(os.path.join(self.backingstore, f"data.{offset}"), 'wb') as data_file:

data_file.write(data)

# Add it to the list of available segments

self.available_segments.append(Segment(offset, chunk_size))

# Read the rest of the data if needed

if len(data) < count:

data += self._read(offset+len(data), count-len(data))

return data

def read(self, count=-1):

if count < 0:

count = self.size() - self.offset

data = self._read(self.offset, count)

self.offset += len(data)

return data

Notice the RuntimeError raised if we created the CachingFile object with fileobj=None. Why would we ever do that? Well, if we have fully cached the file, then we can run entirely from cache. If the original file (or URL, in our HttpFile case) is no longer available, the cache may be all we have. Or perhaps we want to isolate some operation, so we run once in "non-isolated" mode with the file object passed in, and then run in "isolated" mode with no file object. If the second run works, we know we have locally cached everything needed for the operation in question.

Our motivation is to use this with HttpFile, but it could be used in other situations. Perhaps you have mounted an sshfs file system over a slow or expensive link; CachingFile would improve performance or reduce cost. Or maybe you have the original files on a harddrive, but put the cache on an SSD so repeated reads are faster. (Though in the latter case, Linux offers functionality that would likely be superior to anything implemented in Python.)

Generalized Lesson

So those are a couple of handy utilities, but they demonstrate a more profound point.

When you design your code around standard interfaces, your solutions can be applied in a broader range of situations, and reduce the amount of code you must write to achieve your goal.

When faced with a problem of the form "I want to perform an operation on <something>, but I only know how to operate on <something else>", consider if you can create code that takes the "something" you have, and provides an interface that looks like the "something else" that you can use. If you can write that code to adapt one kind of thing to another kind of thing, you can solve your problem without having to reimplement the operation you already have code to do. And you might find there are more uses for the result than you anticipated.

LDraw Parts Library 2024-09 - Packaged for Linux

LDraw.org maintains a library of Lego part models upon which a number of related tools such as LeoCAD, LDView and LPub rely.

I packaged the 2024-09 parts library for Fedora 39 to install to /usr/share/ldraw; it should be straight-forward to adapt to other distributions.

The *.noarch.rpm files are the ones to install, and the .src.rpm contains everything so it can be rebuilt for another rpm-based distribution.

ldraw_parts-202409-ec1.fc39.src.rpm

ldraw_parts-202409-ec1.fc39.noarch.rpm

ldraw_parts-creativecommons-202409-ec1.fc39.noarch.rpm

ldraw_parts-models-202409-ec1.fc39.noarch.rpm

See also LDrawPartsLibrary.

LDraw Parts Library 2024-08 - Packaged for Linux

LDraw.org maintains a library of Lego part models upon which a number of related tools such as LeoCAD, LDView and LPub rely.

I packaged the 2024-08 parts library for Fedora 39 to install to /usr/share/ldraw; it should be straight-forward to adapt to other distributions.

The *.noarch.rpm files are the ones to install, and the .src.rpm contains everything so it can be rebuilt for another rpm-based distribution.

ldraw_parts-202408-ec1.fc39.src.rpm

ldraw_parts-202408-ec1.fc39.noarch.rpm

ldraw_parts-creativecommons-202408-ec1.fc39.noarch.rpm

ldraw_parts-models-202408-ec1.fc39.noarch.rpm

See also LDrawPartsLibrary.

LDraw Parts Library 2024-07 - Packaged for Linux

LDraw.org maintains a library of Lego part models upon which a number of related tools such as LeoCAD, LDView and LPub rely.

I packaged the 2024-07 parts library for Fedora 39 to install to /usr/share/ldraw; it should be straight-forward to adapt to other distributions.

The *.noarch.rpm files are the ones to install, and the .src.rpm contains everything so it can be rebuilt for another rpm-based distribution.

ldraw_parts-202407-ec1.fc39.src.rpm

ldraw_parts-202407-ec1.fc39.noarch.rpm

ldraw_parts-creativecommons-202407-ec1.fc39.noarch.rpm

ldraw_parts-models-202407-ec1.fc39.noarch.rpm

See also LDrawPartsLibrary.

LDraw Parts Library 2024-06 - Packaged for Linux

LDraw.org maintains a library of Lego part models upon which a number of related tools such as LeoCAD, LDView and LPub rely.

I packaged the 2024-06 parts library for Fedora 39 to install to /usr/share/ldraw; it should be straight-forward to adapt to other distributions.

The *.noarch.rpm files are the ones to install, and the .src.rpm contains everything so it can be rebuilt for another rpm-based distribution.

ldraw_parts-202406-ec1.fc39.src.rpm

ldraw_parts-202406-ec1.fc39.noarch.rpm

ldraw_parts-creativecommons-202406-ec1.fc39.noarch.rpm

ldraw_parts-models-202406-ec1.fc39.noarch.rpm

See also LDrawPartsLibrary.

LDraw Parts Library 2024-04 - Packaged for Linux

LDraw.org maintains a library of Lego part models upon which a number of related tools such as LeoCAD, LDView and LPub rely.

I packaged the 2024-04 parts library for Fedora 39 to install to /usr/share/ldraw; it should be straight-forward to adapt to other distributions.

The *.noarch.rpm files are the ones to install, and the .src.rpm contains everything so it can be rebuilt for another rpm-based distribution.

ldraw_parts-202404-ec1.fc39.src.rpm

ldraw_parts-202404-ec1.fc39.noarch.rpm

ldraw_parts-creativecommons-202404-ec1.fc39.noarch.rpm

ldraw_parts-models-202404-ec1.fc39.noarch.rpm

See also LDrawPartsLibrary.

Sabaton Index updates; through May 2024

Since the last update, Sabaton released two videos of full albums. The SabatonIndex is updated with links to that new content.

LDraw Parts Library 2024-03 - Packaged for Linux

LDraw.org maintains a library of Lego part models upon which a number of related tools such as LeoCAD, LDView and LPub rely.

I packaged the 2024-03 parts library for Fedora 39 to install to /usr/share/ldraw; it should be straight-forward to adapt to other distributions.

The *.noarch.rpm files are the ones to install, and the .src.rpm contains everything so it can be rebuilt for another rpm-based distribution.

ldraw_parts-202403-ec1.fc39.src.rpm

ldraw_parts-202403-ec1.fc39.noarch.rpm

ldraw_parts-creativecommons-202403-ec1.fc39.noarch.rpm

ldraw_parts-models-202403-ec1.fc39.noarch.rpm

See also LDrawPartsLibrary.

Sabaton Index updates; through March 2024

Since the last update, Sabaton released a bunch of new lyric videos, some new history videos, and three videos of full albums. A couple of the history videos relate to multiple songs, so those will appear multiple times in the list. The SabatonIndex is updated with links to that new content.

LDraw Parts Library 2024-01 - Packaged for Linux

LDraw.org maintains a library of Lego part models upon which a number of related tools such as LeoCAD, LDView and LPub rely.

I packaged the 2024-01 parts library for Fedora 39 to install to /usr/share/ldraw; it should be straight-forward to adapt to other distributions.

The *.noarch.rpm files are the ones to install, and the .src.rpm contains everything so it can be rebuilt for another rpm-based distribution.

ldraw_parts-202401-ec1.fc39.src.rpm

ldraw_parts-202401-ec1.fc39.noarch.rpm

ldraw_parts-creativecommons-202401-ec1.fc39.noarch.rpm

ldraw_parts-models-202401-ec1.fc39.noarch.rpm

See also LDrawPartsLibrary.

LDraw Parts Library 2023-07 - Packaged for Linux

LDraw.org maintains a library of Lego part models upon which a number of related tools such as LeoCAD, LDView and LPub rely.

I packaged the 2023-07 parts library for Fedora 39 to install to /usr/share/ldraw; it should be straight-forward to adapt to other distributions.

The *.noarch.rpm files are the ones to install, and the .src.rpm contains everything so it can be rebuilt for another rpm-based distribution.

ldraw_parts-202307-ec1.fc39.src.rpm

ldraw_parts-202307-ec1.fc39.noarch.rpm

ldraw_parts-creativecommons-202307-ec1.fc39.noarch.rpm

ldraw_parts-models-202307-ec1.fc39.noarch.rpm

See also LDrawPartsLibrary.

LDraw Parts Library 2023-06 - Packaged for Linux

LDraw.org maintains a library of Lego part models upon which a number of related tools such as LeoCAD, LDView and LPub rely.

I packaged the 2023-06 parts library for Fedora 38 to install to /usr/share/ldraw; it should be straight-forward to adapt to other distributions.

The *.noarch.rpm files are the ones to install, and the .src.rpm contains everything so it can be rebuilt for another rpm-based distribution.

ldraw_parts-202306-ec1.fc38.src.rpm

ldraw_parts-202306-ec1.fc38.noarch.rpm

ldraw_parts-creativecommons-202306-ec1.fc38.noarch.rpm

ldraw_parts-models-202306-ec1.fc38.noarch.rpm

See also LDrawPartsLibrary.

Sabaton Index updates; through October 2023

Sabaton released a bunch of new lyric videos and some new history videos. The SabatonIndex is updated with links to that new content.

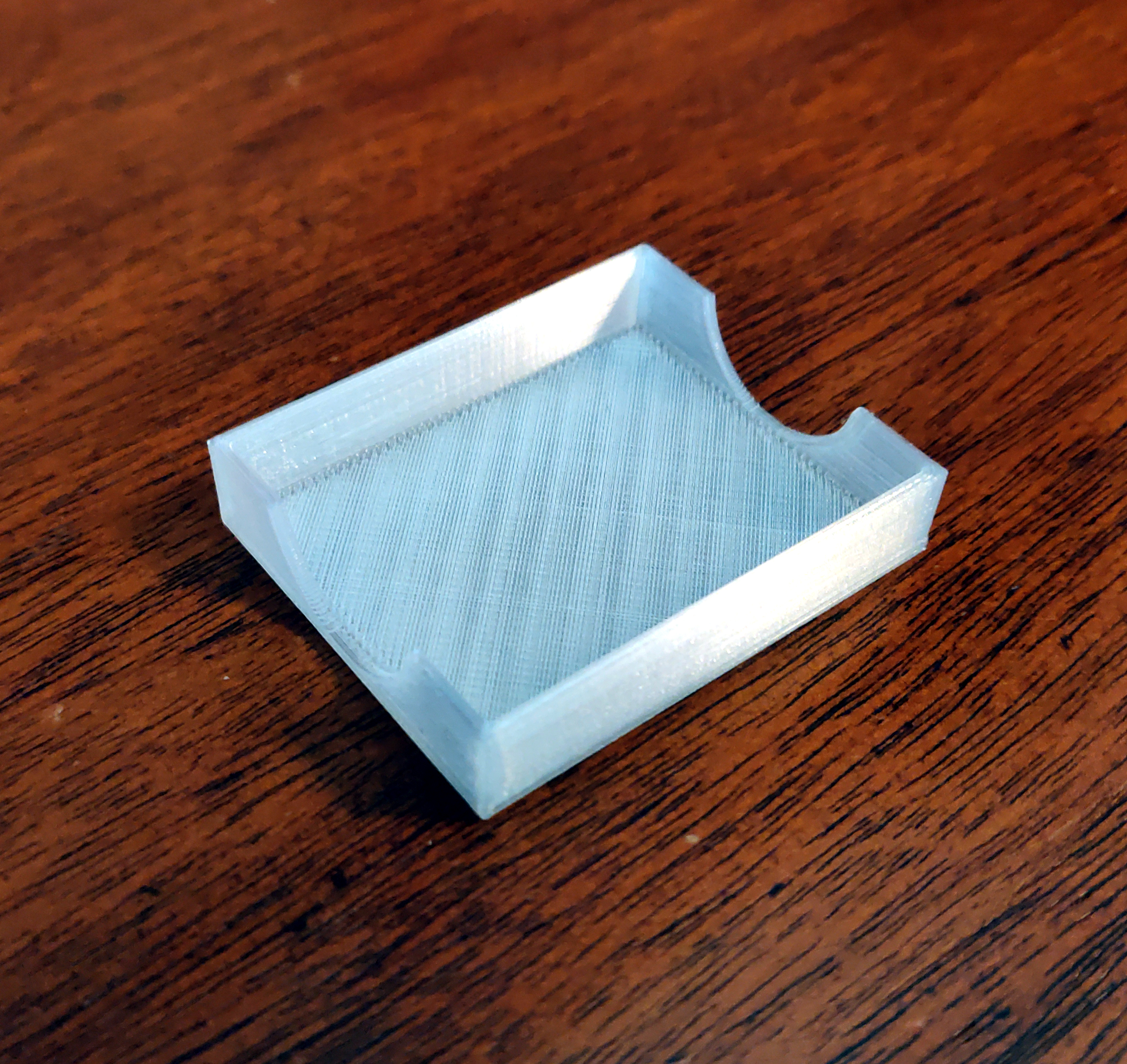

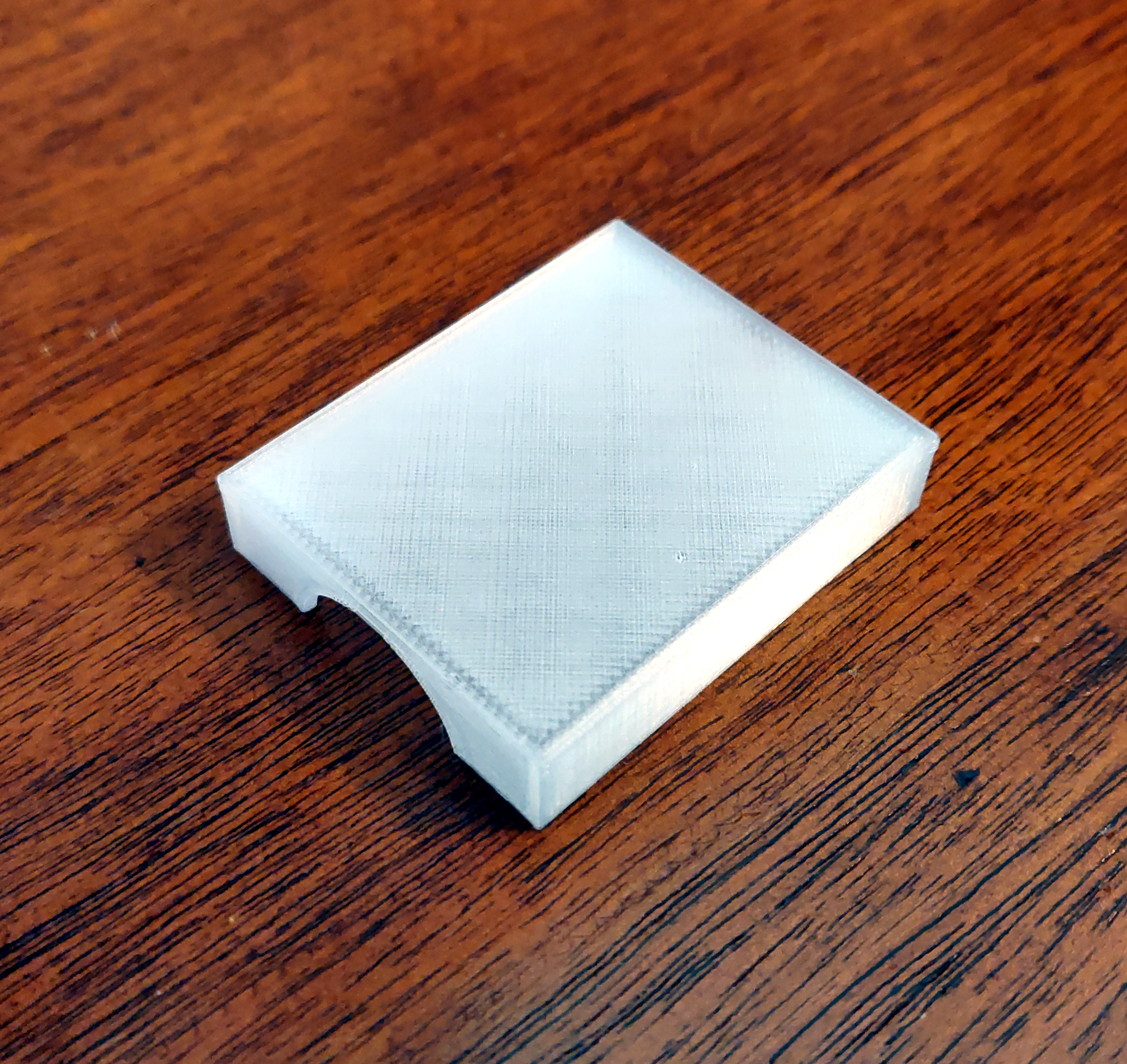

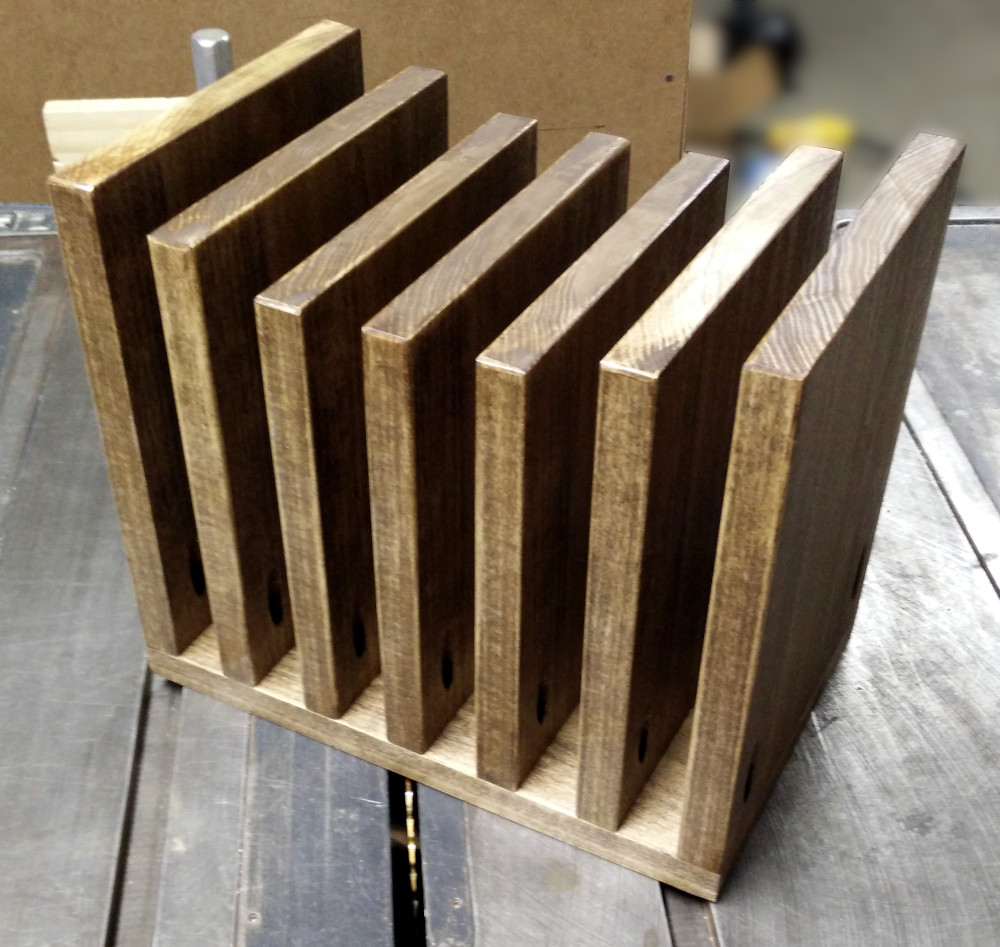

Heat-set threaded insert tooling box lid

I recently discovered heat-set threaded inserts. These little bits of brass provide a way to use bolts in 3D-printed plastic parts.

The idea is that you create a plastic part with a slightly-undersized hole where the insert will go, and then using a soldering iron with a specialized tip, you heat the insert, which softens the plastic enough that you can press it into the part. Once the plastic cools, the insert is firmly embedded in the part. The inserts have internal threads which a machine screw / bolt can be screwed into. They have sharp teeth features at opposing angles on the outside, so they remain firmly embedded in the part and can't themselves unscrew.

I bought a set of soldering iron tips for this purpose from Virtjoule (via Amazon). The set of brass tips came in a plastic tray which had obviously been 3D-printed.

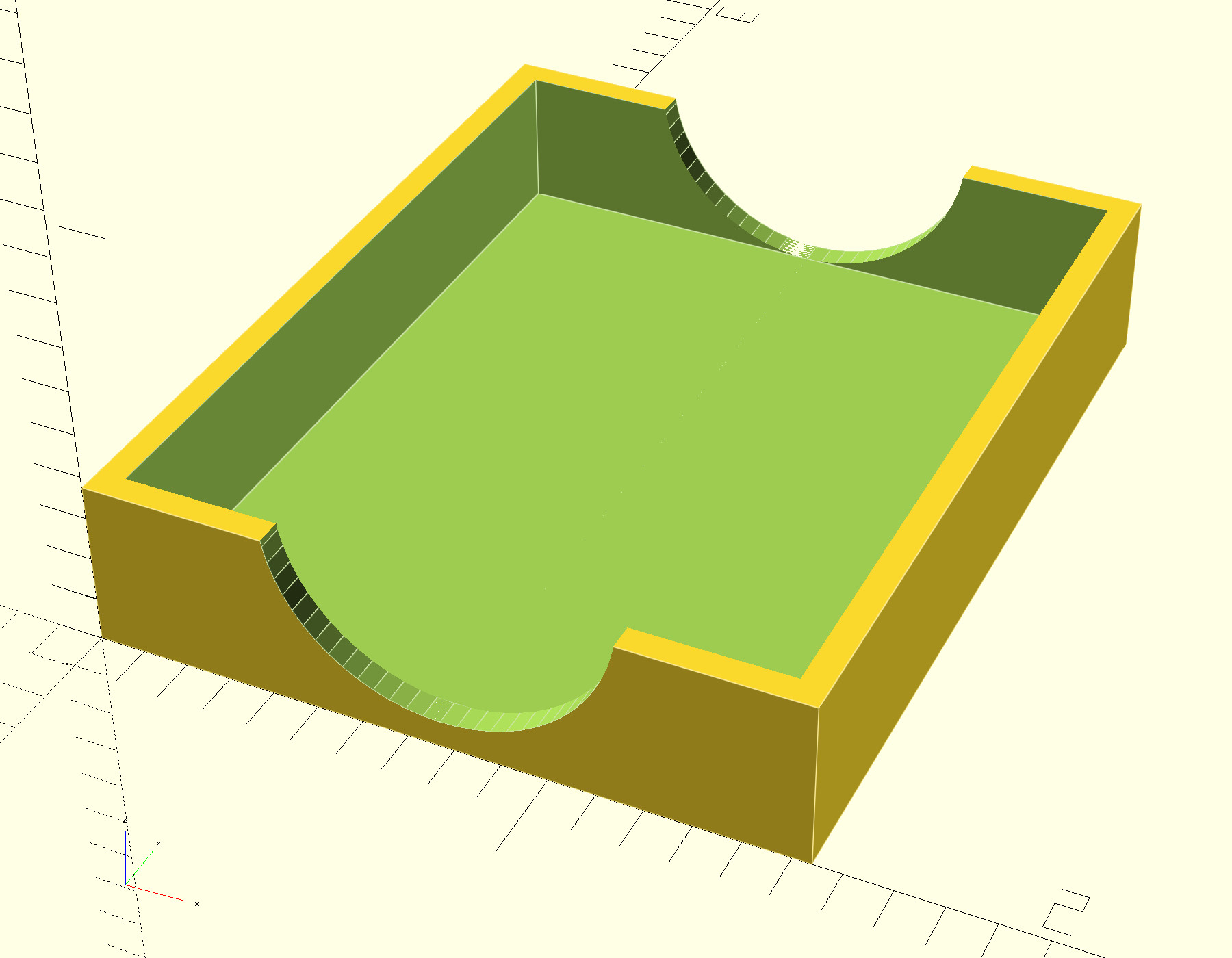

But there was no lid for the tray. So I threw together a 3D model of a lid for it (OpenSCAD, STL)

and printed it in "transparent" PLA.

That fits nicely and helps to keep the set of tips together:

Being able to quickly create a lid for a box is not something I had anticipated doing with a 3D printer, but in retrospect, it's an amazingly obvious application.

And then there's the intriguing aspect that it's useful to a business creating packaging for a specialty product. In this case, the US-based company is in a business where they are applying 3D-printed parts, and are selling a tool they themselves created and use. So they've found an additional use of infrastructure they already had, and a market for a product they had created for their own use. I think that's really neat.

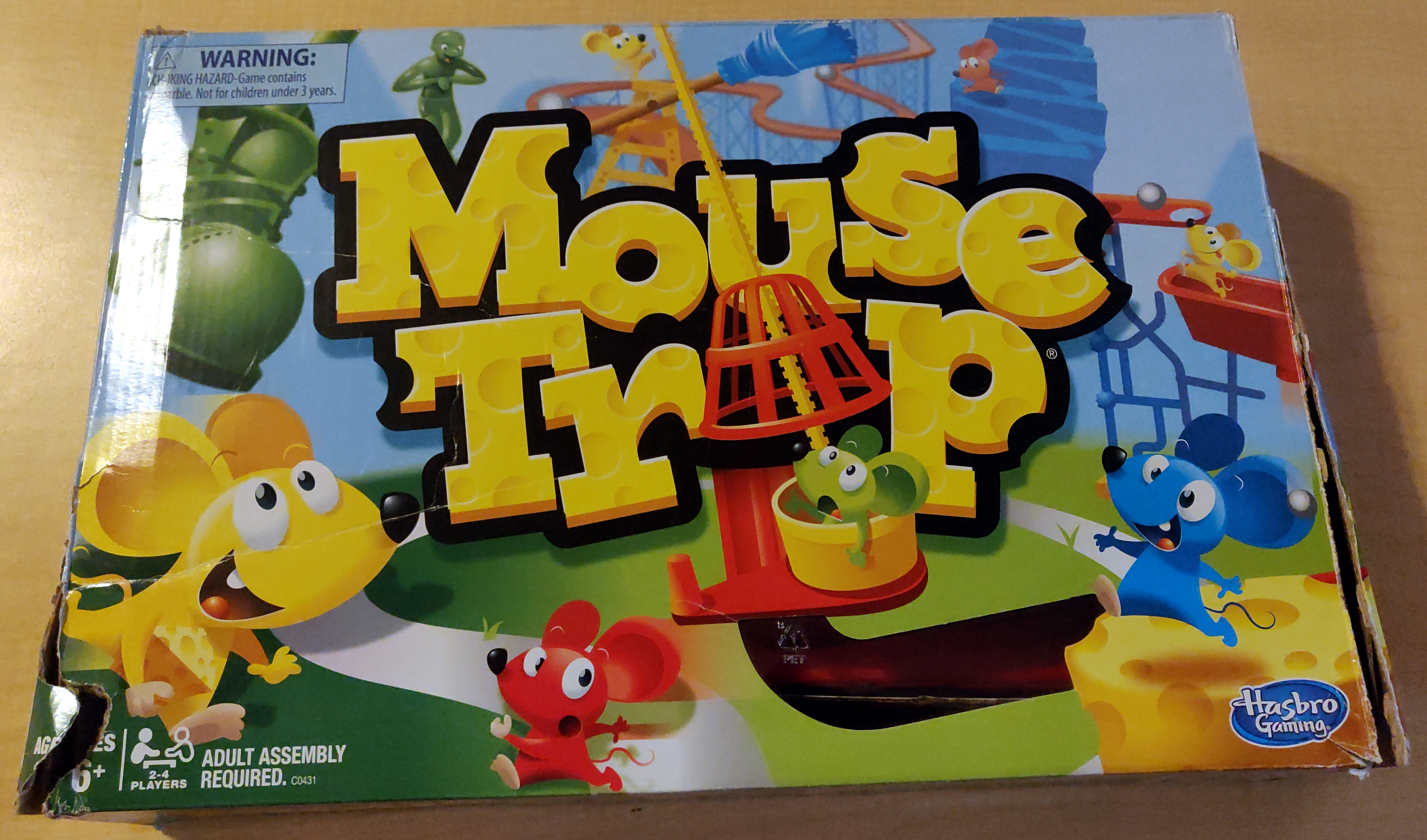

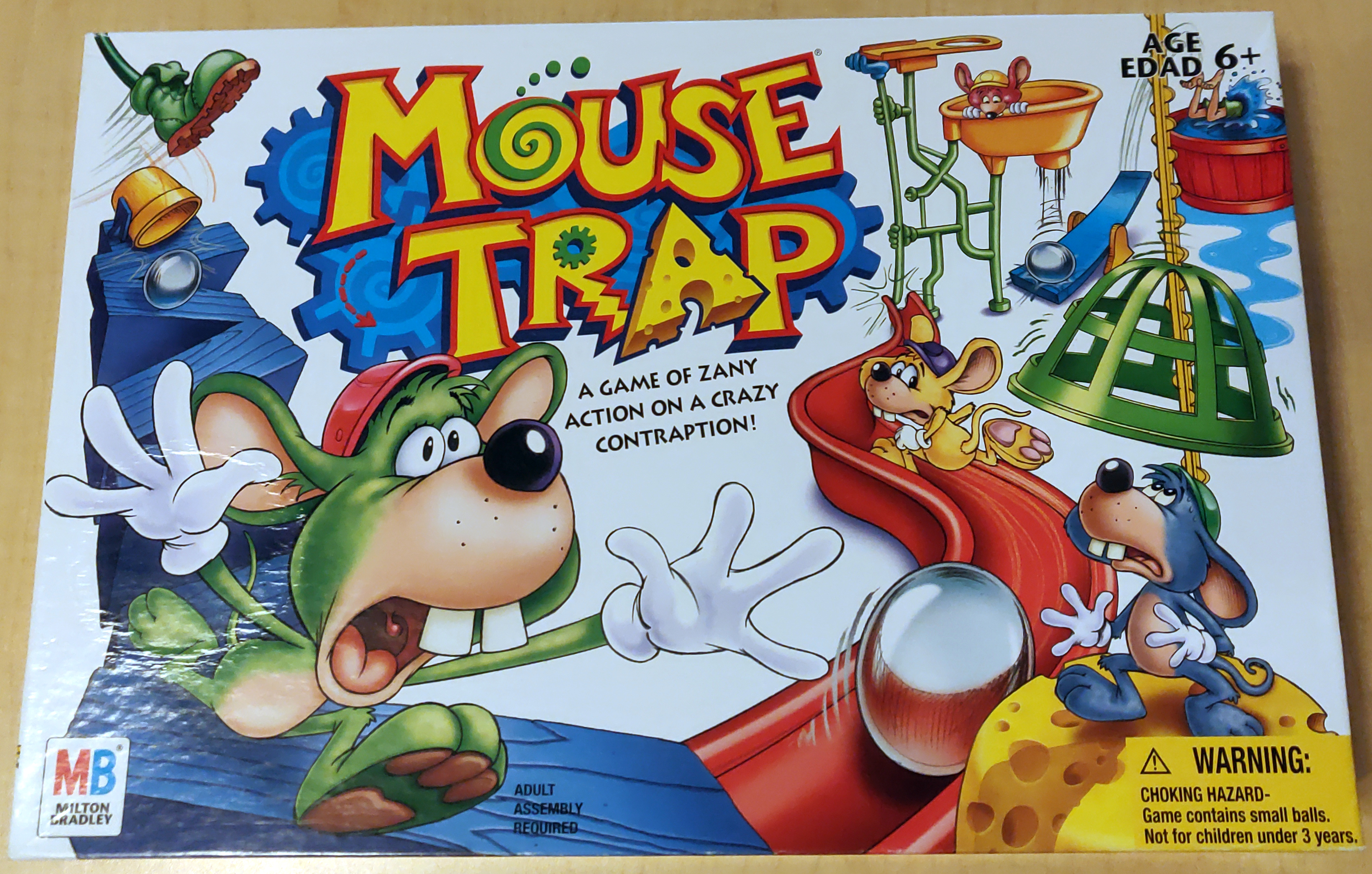

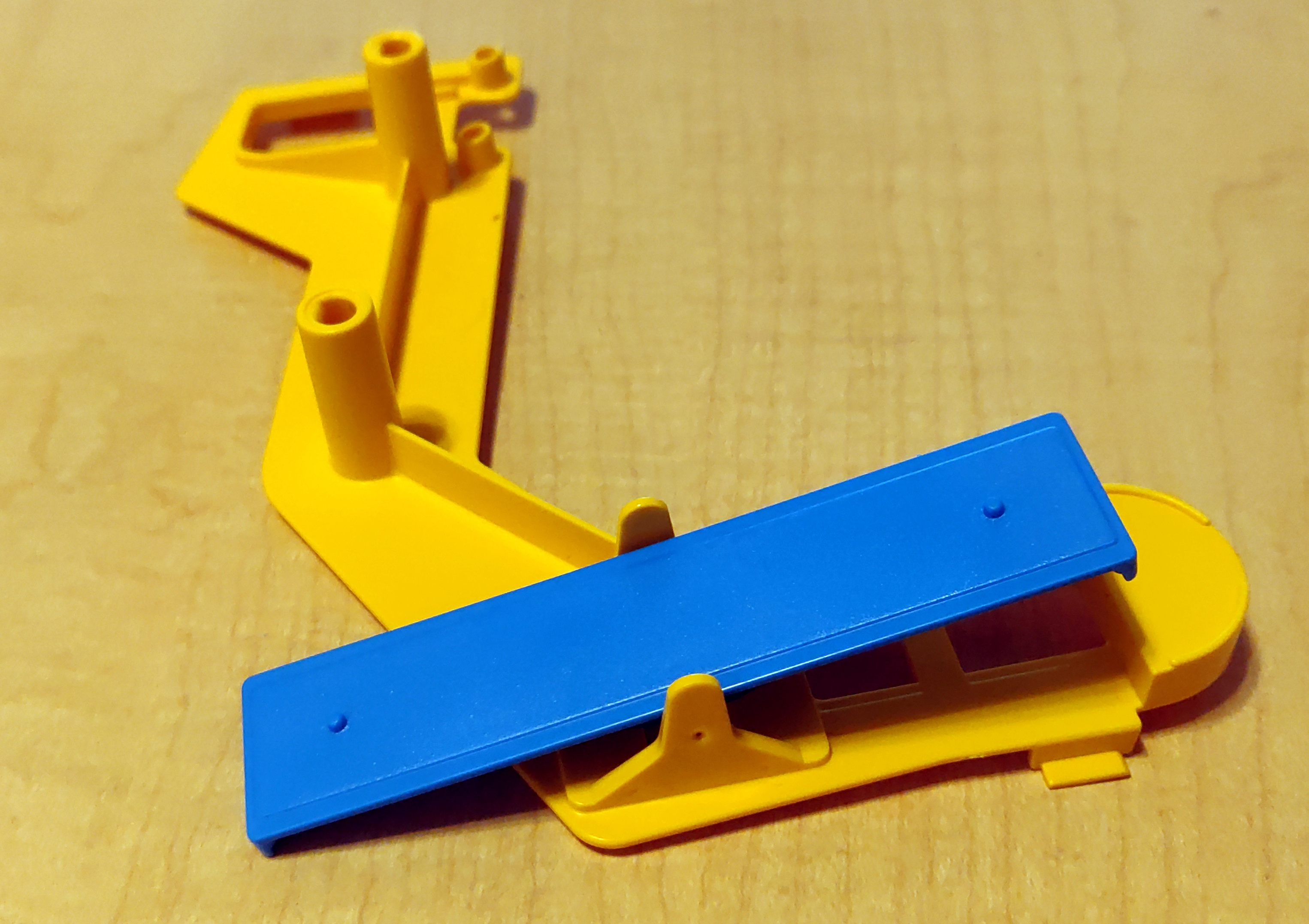

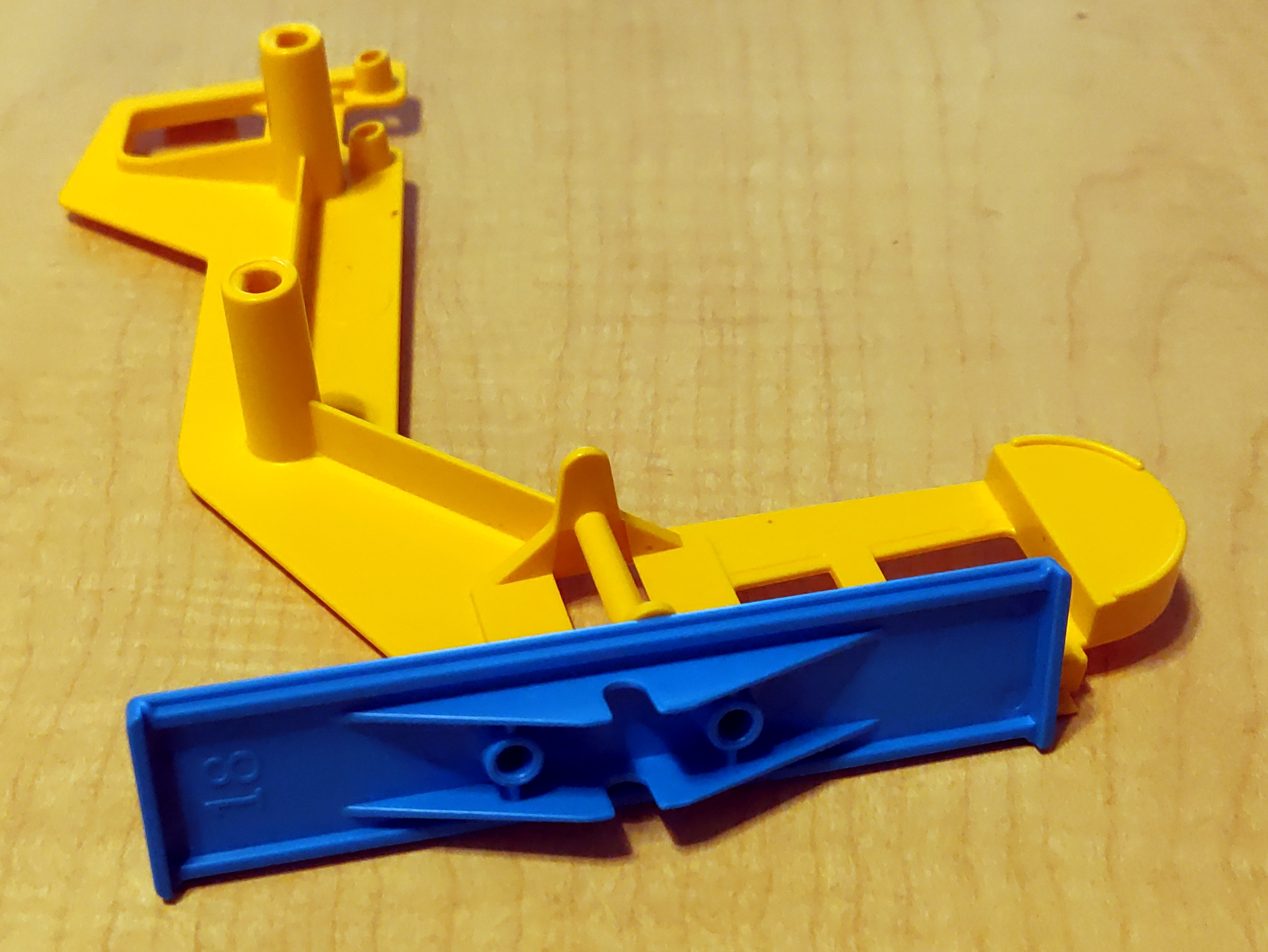

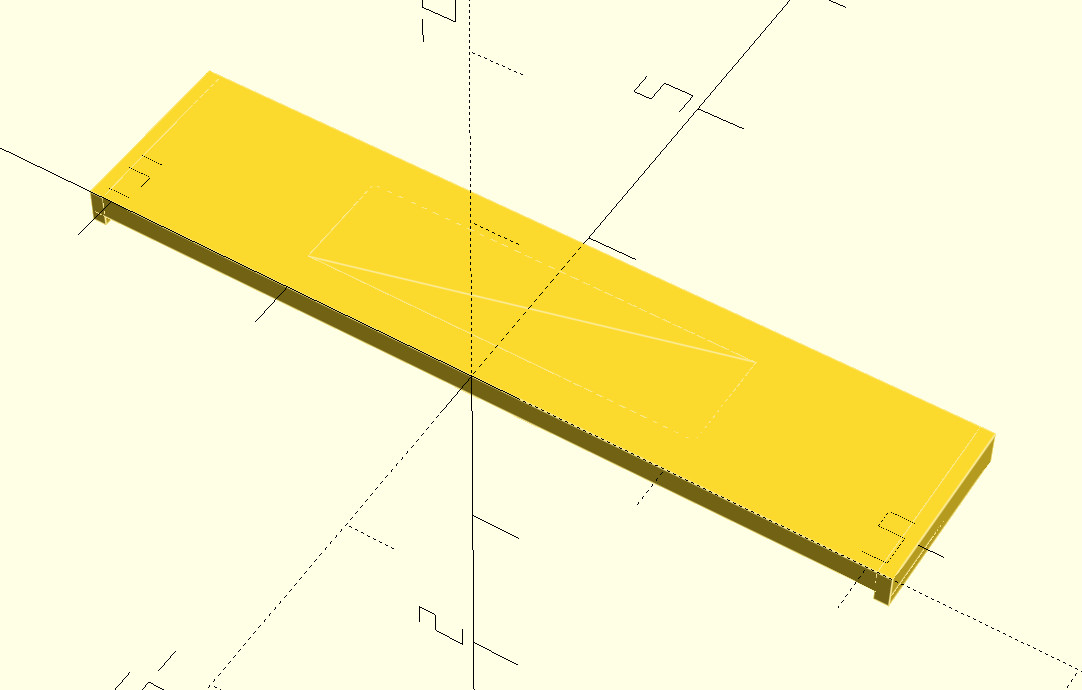

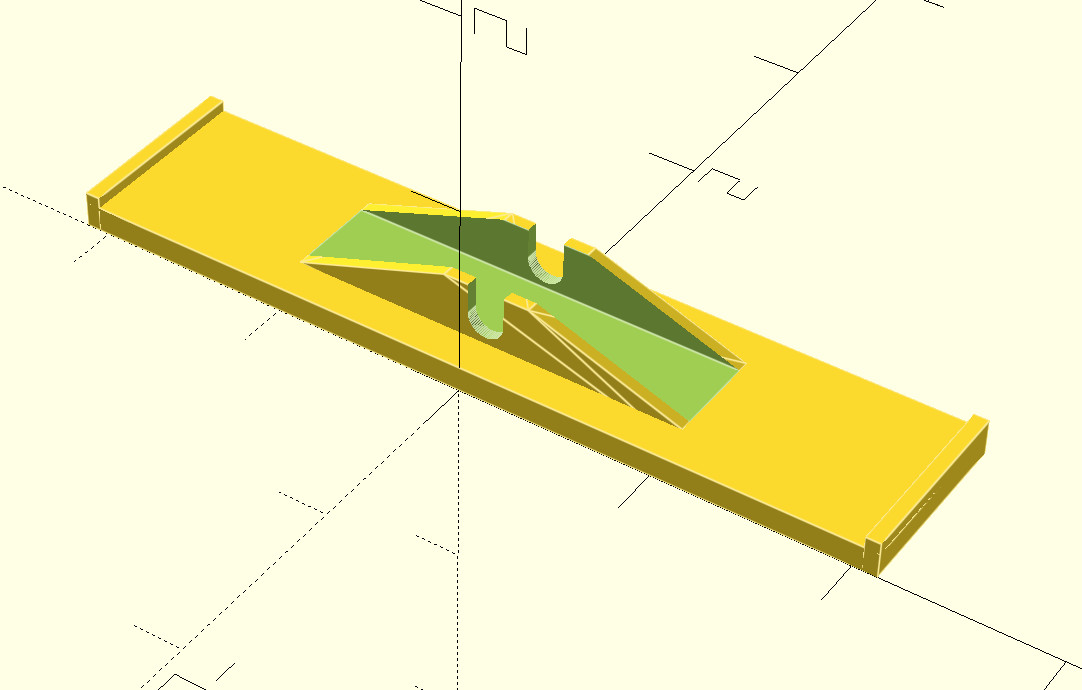

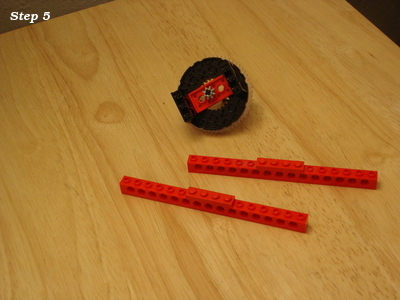

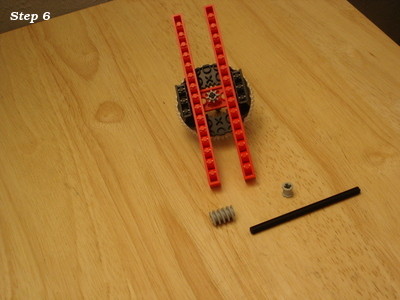

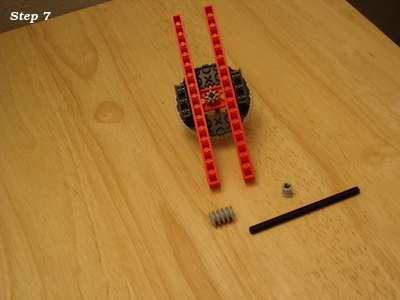

Mouse Trap replacement seesaw

There are some tools you figure will be useful, but it is difficult to really justify investing in them before you have them. And then you get it anyway, and discover how much more useful it is than you envisioned. Getting a 3D printer was that sort of experience for me; I knew I'd find it useful, but I hadn't realized just how useful. Using OpenSCAD and some calipers, I have managed to create replacement parts or useful gizmos that would not have occurred to me prior to owning a 3D printer.

Here is just one example.

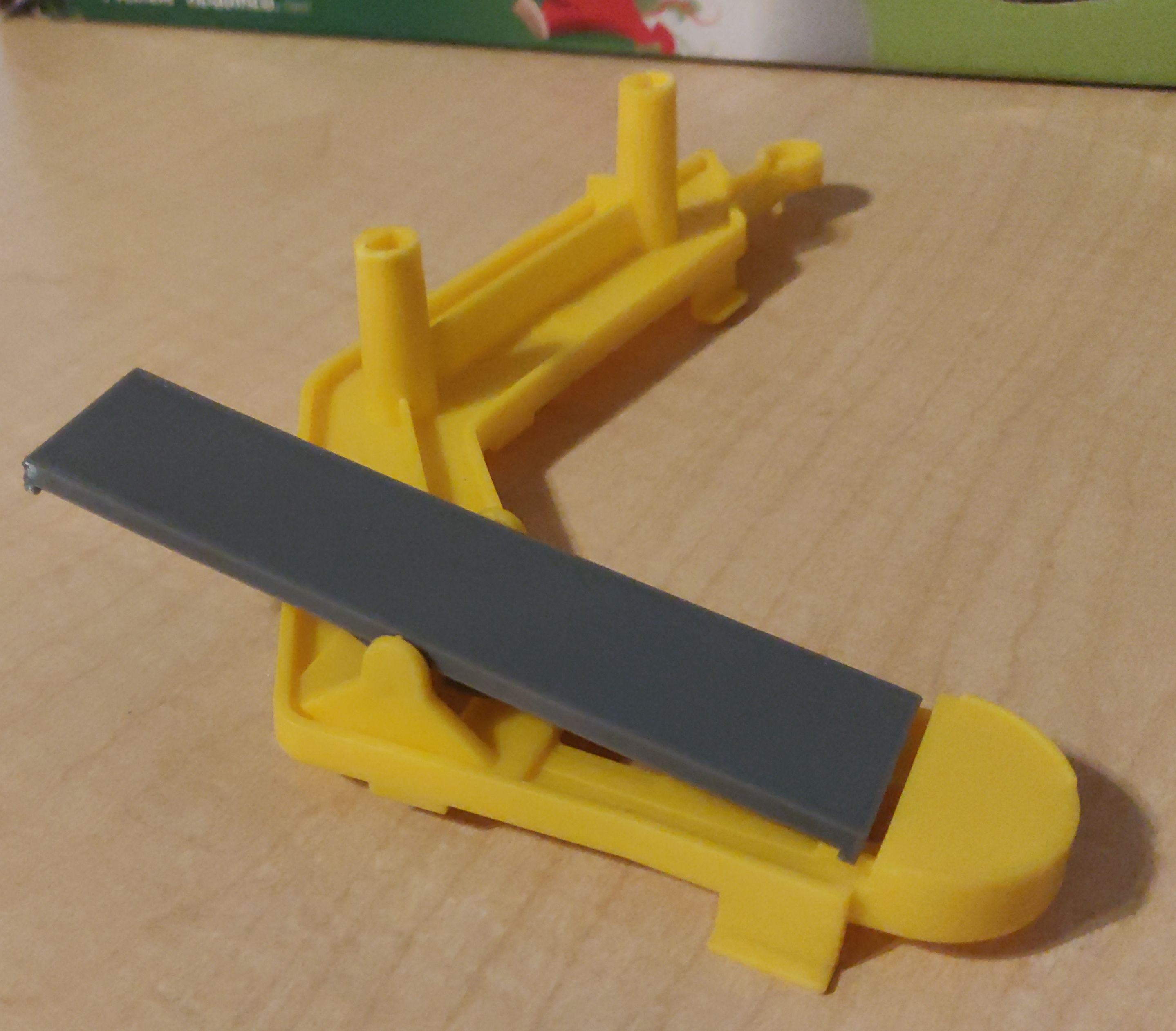

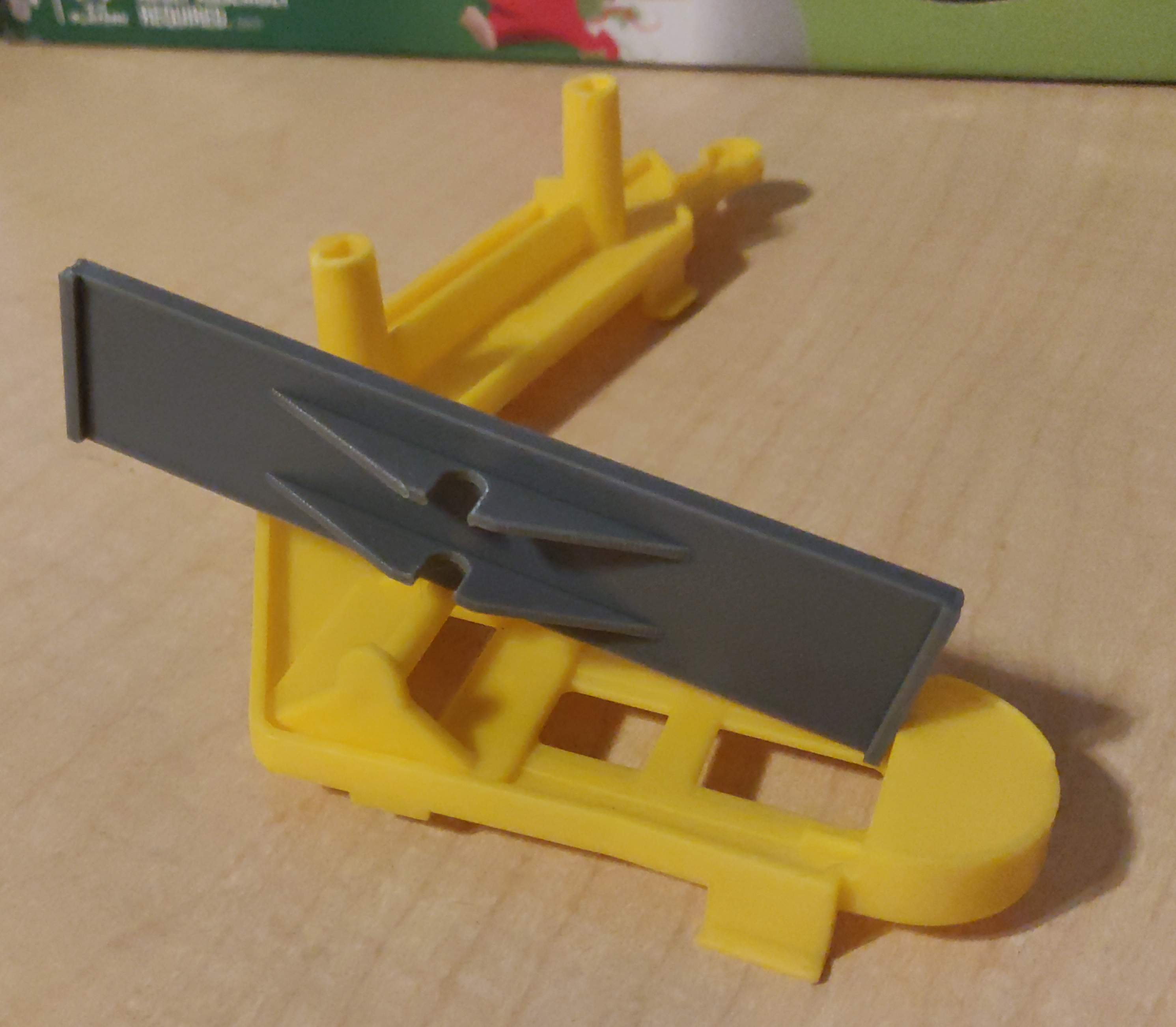

A friend of my wife had Mouse Trap, a kids' board game built around a Rube Goldberg contraption.

Unfortunately, she had lost the seesaw piece, which meant that the contraption could not work.

As it so happened, we also had the game, though it did have some differences.

The seesaw from our game still worked with hers.

So I grabbed my calipers, and threw together a quick approximation of our seesaw in OpenSCAD,

and printed it out.

The resulting seesaw, though not as brightly colored as the rest of the game, did work, and fixed her game.

If you want to print your own, here are the OpenSCAD model and the STL.

Sabaton Index updates; through July 2023

Sabaton released a few new lyric videos and a couple of new history videos. The SabatonIndex is updated with links to that new content.

LDraw Parts Library 2023-03 - Packaged for Linux

LDraw.org maintains a library of Lego part models upon which a number of related tools such as LeoCAD, LDView and LPub rely.

I packaged the 2023-03 parts library for Fedora 36 to install to /usr/share/ldraw; it should be straight-forward to adapt to other distributions.

The *.noarch.rpm files are the ones to install, and the .src.rpm contains everything so it can be rebuilt for another rpm-based distribution.

ldraw_parts-202303-ec1.fc36.src.rpm

ldraw_parts-202303-ec1.fc36.noarch.rpm

ldraw_parts-creativecommons-202303-ec1.fc36.noarch.rpm

ldraw_parts-models-202303-ec1.fc36.noarch.rpm

See also LDrawPartsLibrary.

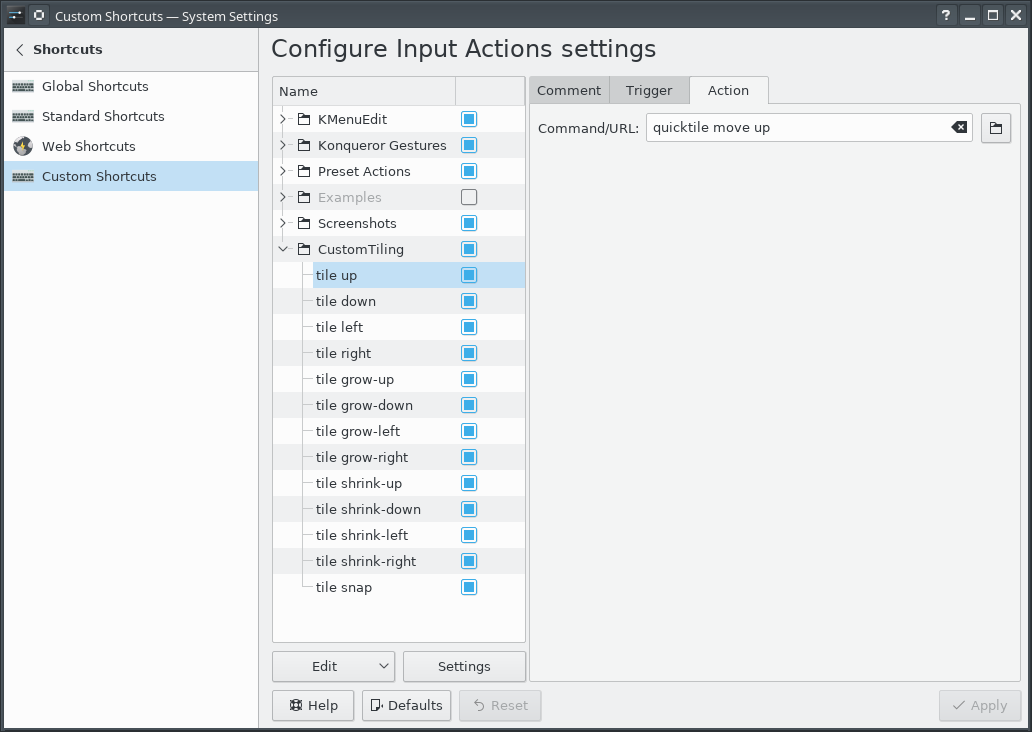

Grid-based Tiling Window Management, Mark III, aka QuickGridZones

With a laptop and a 4K monitor, I wind up with a large number of windows scattered across my screens. The general disarray of scattered and randomly offset windows drives me nuts.

I've done some work to address this problem before (here and here), which I had been referring to as "quicktile". But that's what KDE called its implementation that allowed snapping the window to either half of the screen, or to some quarter. On Windows, there's a Power Tool called "Fancy Zones" that also has a few similarities. In an effort to disambiguate what I've built, I've renamed my approach to "Quick Grid Zones".

Since the last post on this, I've done some cleanup of the logic and also ported it to work on Windows.

This isn't a cross-platform implementation, but rather three implementations with some structural similarities, implemented on top of platform-specific tools.

- Linux KDE - KDE global shortcuts that call a Python script using xdotool, wmctrl, and xprop

- Mac OS - a lua script for Hammerspoon

- Windows - an AutoHotKey2 script

Simple demo of running this on KDE:

Grab the local tarball for this release, or check out the QuickGridZones project page.

LDraw Parts Library 2023-02 - Packaged for Linux

LDraw.org maintains a library of Lego part models upon which a number of related tools such as LeoCAD, LDView and LPub rely.

I packaged the 2023-02 parts library for Fedora 36 to install to /usr/share/ldraw; it should be straight-forward to adapt to other distributions.

The *.noarch.rpm files are the ones to install, and the .src.rpm contains everything so it can be rebuilt for another rpm-based distribution.

ldraw_parts-202302-ec1.fc36.src.rpm

ldraw_parts-202302-ec1.fc36.noarch.rpm

ldraw_parts-creativecommons-202302-ec1.fc36.noarch.rpm

ldraw_parts-models-202302-ec1.fc36.noarch.rpm

See also LDrawPartsLibrary.

LeoCAD 23.03 - Packaged for Linux

LeoCAD is a CAD application for building digital models with Lego-compatible parts drawn from the LDraw parts library.

I packaged (as an rpm) the 23.03 release of LeoCAD for Fedora 36. This package requires the LDraw parts library package.

Install the binary rpm. The source rpm contains the files to allow you to rebuild the packge for another distribution.

Fixing a "blank screen" after a failed flash of an Ender-3 Pro

The Problem:

I have a Creality Ender-3 Pro that came with a 32bit 4.2.2 motherboard. The firmware on these boards can be updated by booting from a correctly prepared uSD card containing compatible firmware. (This is done by "the bootloader", which I will refer to as the "second-stage bootloader" hereafter because there is a first-stage bootloader in ROM which jumps to the second-stage bootloader at the beginning of flash, which in turn handles any firmware upgrade and then jumps to the firmware later in the flash. Being pedantically precise is important when dealing with machines at this low level.)

But if something goes wrong with a firmware upgrade, you can be faced with a device that shows only a blank screen, and very little to go on when trying to diagnose why it won't flash a correct firmware.

If the board does not attempt to flash, and boots into the existing working firmware, you should see the splash screen about 2 seconds after power is applied. If the board successfully flashes the updated firmware, you should see a blank screen for about 15 seconds, and then the splash screen of the new firmware. If the board does not attempt to flash and the existing firmware is broken, you will see a blank screen forever. If the board flashes a broken firmware, you will also see a blank screen forever.

I'm not the only one who ran into this issue, and I found a lot of advice online regarding this, but the second-stage bootloader on this board seems to be rather finicky, which led to people drawing apparently erroneous conclusions about what they did to fix their machines.

The documentation mentions that you should use a uSD card 8GB or smaller. But I have found that a properly-prepared 512MB uSD card does not work either. So it appears that there is also a lower bound to the uSD card size somewhere between 8GB and 512MB.

It is commonly believed that the firmware file must be named firmware<number>.bin, where <number> is a number different from what was previously flashed. I found that the requirement is that the file be named <something>.bin, where <something> is different from what was previously flashed. For example, I was able flash the firmware from a file named Ender-3 Pro- Marlin2.0.1 - V1.0.1 - TMC2225 - English.bin

And to be explicit, it only tracks the most recently flashed filename. If you have two uSD cards, one with firmware1.bin and the other with firmware2.bin, you can boot from them alternately, and successfully re-flash each time.

I have also seen people suggest using an 8.3 filename (limiting oneself to 8 character filename and 3 character bin extension). However, the firmware correctly identified that Ender-3 Pro- Marlin2.0.1 - V1.0.1 - TMC2225 - English2.bin differed from Ender-3 Pro- Marlin2.0.1 - V1.0.1 - TMC2225 - English.bin and flashed the image.

I found people talking about using a freshly formatted or brand new uSD card, and frequently people blamed cheap uSD cards as being faulty, including the uSD card that came with the printer. It appears to me that this is misattributing to the uSD hardware what is really a problem with the second-stage bootloader. Starting with an 8GB uSD card containing a correct firmware file, I would wind up with a blank screen. Taking that same 8GB uSD card, wiping the entirety of it with zeros, creating a vfat filesystem on it, copying that same firmware file to it, and flashing from that would reliably succeed. Writing an image of the previous filesystem to the uSD card would reliably recreate the blank screen problem. So the problem was clearly with the data, not with the hardware.

It appears that the history of the filesystem must play a role in the blank screen problem. The original uSD card, with all files deleted, and a correct firmware file copied to it, was causing the blank screen problem. I must conclude that the second-stage bootloader is looking at deleted file entries, or the data blocks for the firmware file were fragmented in some way that confused it, or... something. My attempts to produce a filesystem from scratch that would exhibit this behavior were not successful.

I've seen instructions about having to create a partition on the uSD card at exactly the right place. However, I found I could reliably flash the board with no partitioning of the device.

Solution

At this point, I can reliably flash the board with this process:

Fully wipe and format the device by running these commands as root:

dd if=/dev/zero bs=1G of=/dev/mmcblk0 mkfs -t vfat /dev/mmcblk0 sync

Caution: Ensure that you use the correct device name for your system (/dev/mmcblk0 was the device on my machine); using the wrong device could wipe and reformat your entire laptop.

When using the SD card slot on my laptop, the sync was superfluous, but when using a USB uSD card adapter, it would aggressively cache the writes and the bulk of the time was spent waiting on the sync. (That process takes about 12 minutes on my machine with the uSD card that shipped with the printer.)

Mount the device, then copy the firmware file to it as firmware1.bin, unmount the device. Insert it into the board, power on, and wait for it to flash and boot into the new firmware. If it does not attempt to flash, rename the firmware file and try again.

Caveats:

While debugging this, I attempted many scenarios with various parts of the system disconnected, such as all the motors, switches, sensors, and heaters. In order to get a handle on the variables, I did my testing to draw the conclusions in this article with the motherboard connected to the LCD screen, all other components disconnected, and powered by the micro USB port. I did verify that I was able to flash the firmware with the LCD screen disconnected; i.e., with the only connection to the board being the micro USB cable to power it. I did not see any indication that the board behaved differently with everything disconnected vs with everything properly connected and installed in the printer. But with the number of flaky oddities I saw in working through this, such context is important to capture.

I have upgraded my Ender-3 Pro with the 4.2.7 silent motherboard, and ran into the same blank screen problem when trying to flash it to support the CR Touch. I was able to recover it using the same techniques above.

Sabaton Index updates; new song "The First Soldier"

Sabaton released a new song this month about Albert Severin Roche titled The First Soldier along with a few history videos on Sarajevo, The Battles of Doiran, and the Dreadnought. They've also filled in some lyric videos for past songs.

I've updated SabatonIndex with the discography and videos they've released of late.

LDraw Parts Library 2022-06 - Packaged for Linux

LDraw.org maintains a library of Lego part models upon which a number of related tools such as LeoCAD, LDView and LPub rely.

I packaged the 2022-06 parts library for Fedora 36 to install to /usr/share/ldraw; it should be straight-forward to adapt to other distributions.

The *.noarch.rpm files are the ones to install, and the .src.rpm contains everything so it can be rebuilt for another rpm-based distribution.

ldraw_parts-202206-ec1.fc36.src.rpm

ldraw_parts-202206-ec1.fc36.noarch.rpm

ldraw_parts-creativecommons-202206-ec1.fc36.noarch.rpm

ldraw_parts-models-202206-ec1.fc36.noarch.rpm

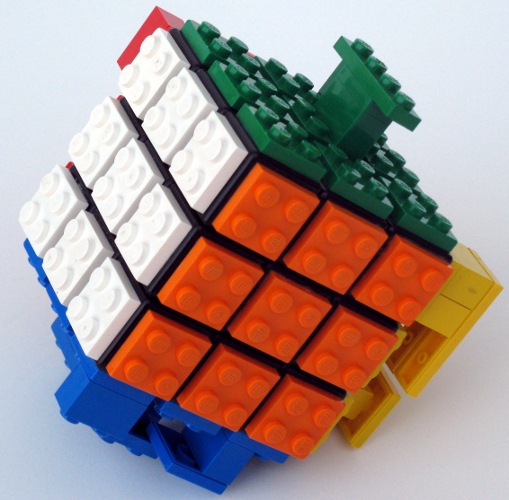

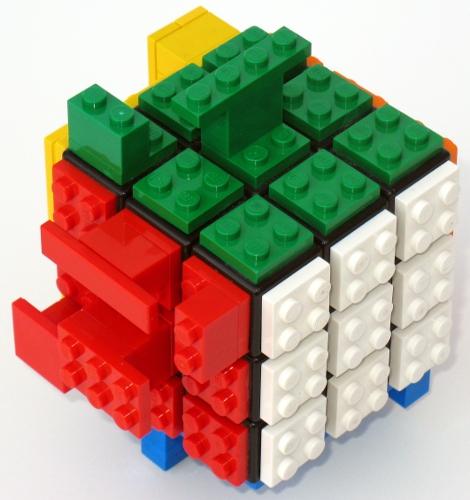

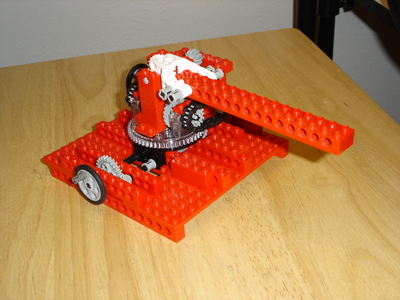

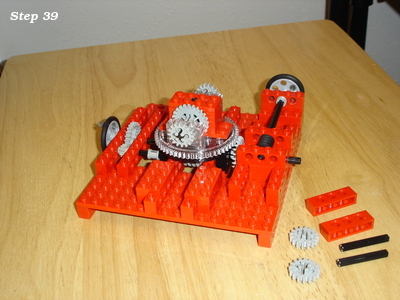

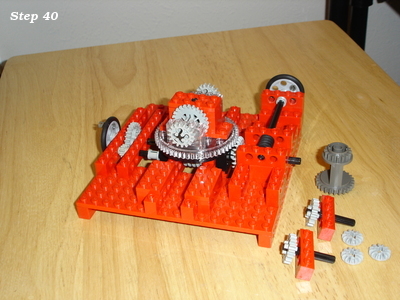

Cooling fan Lego assembly

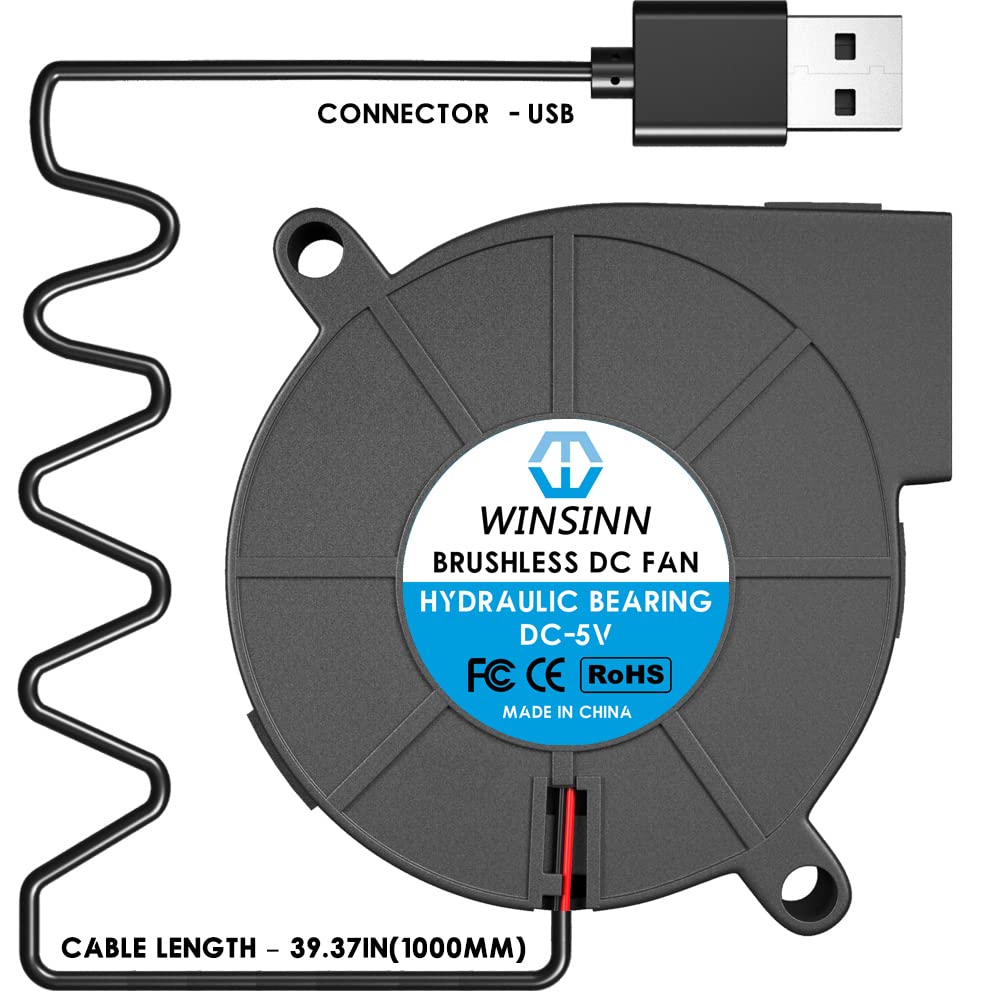

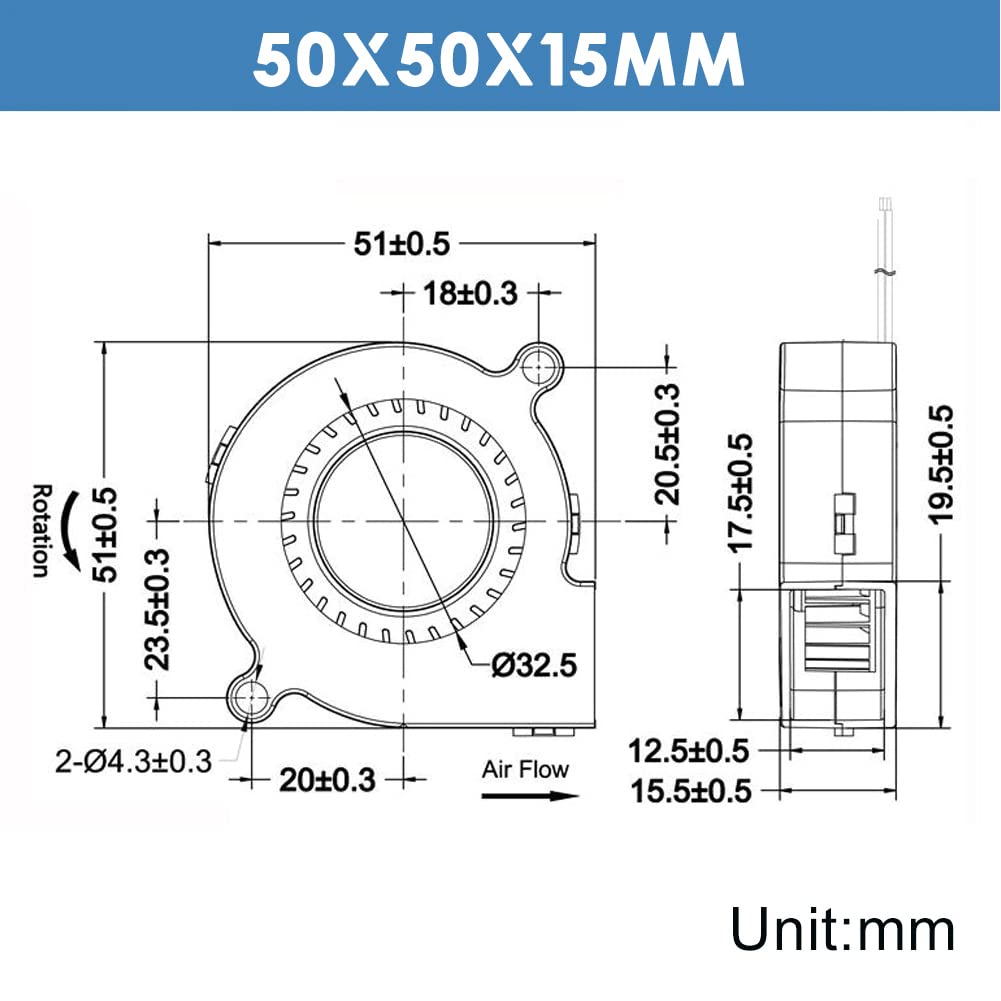

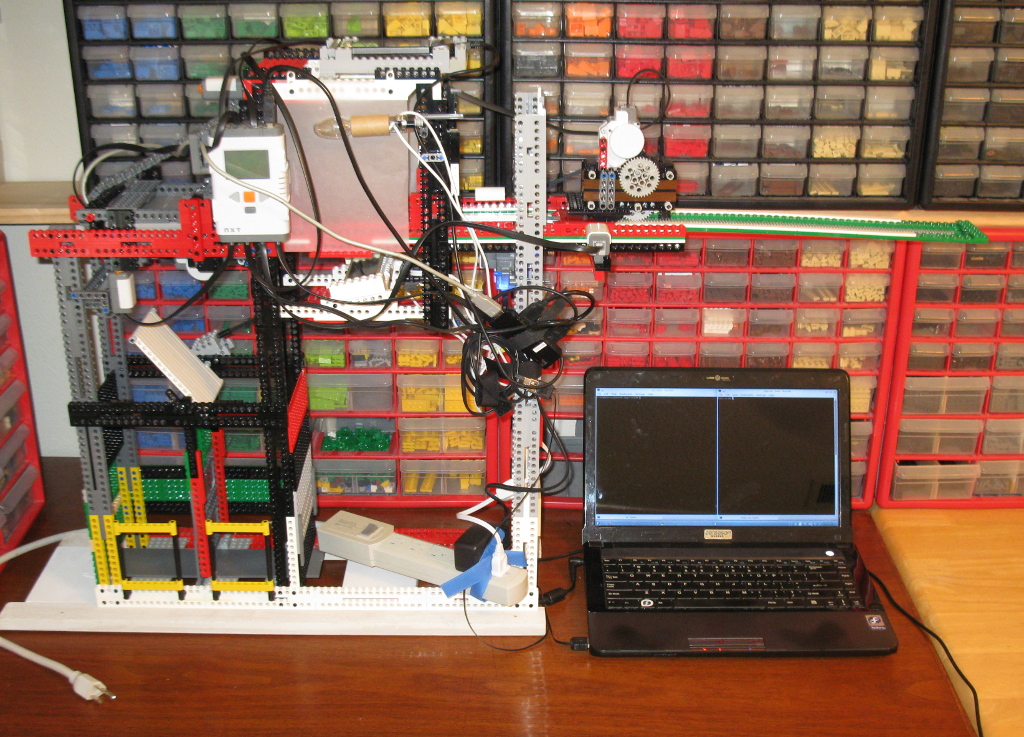

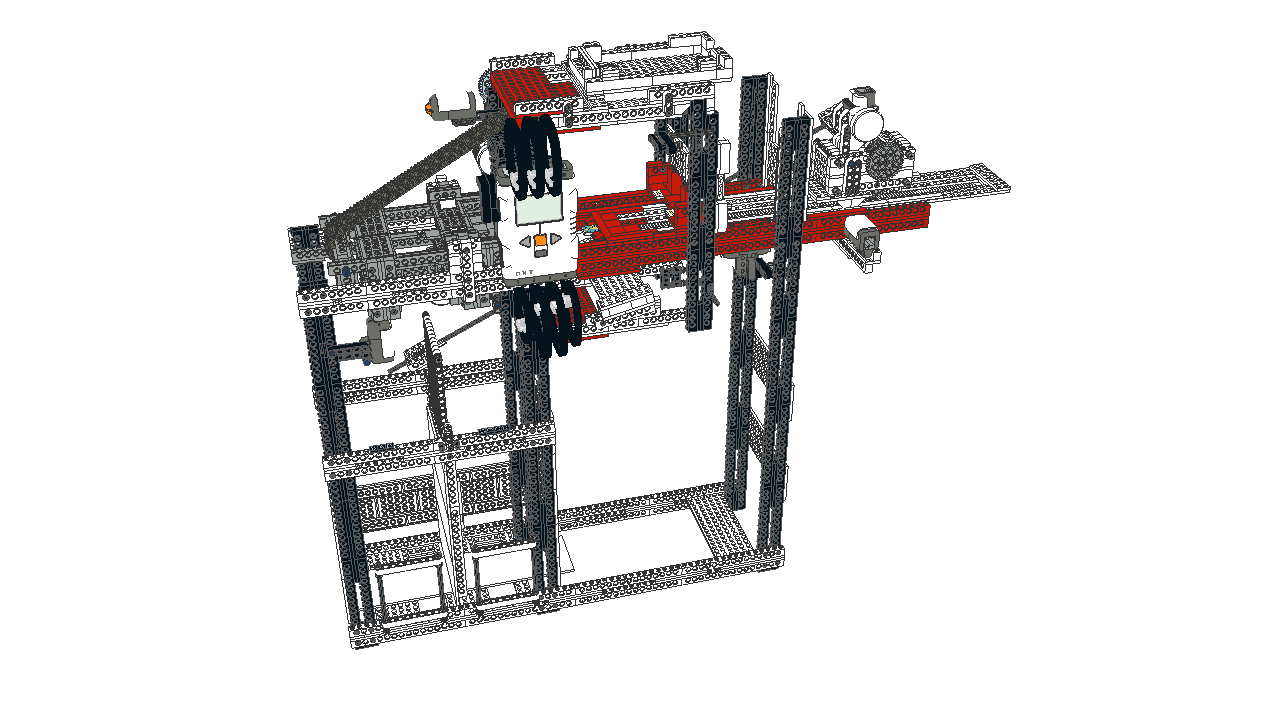

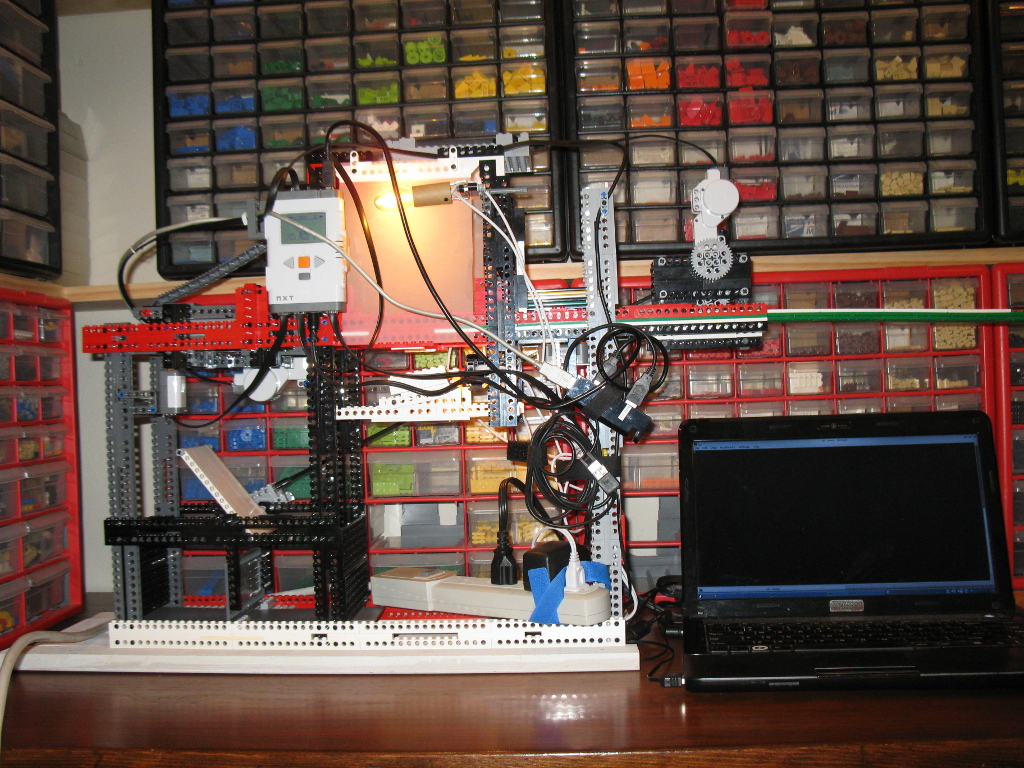

I have a SeaWit 4-port HDMI KVM switch (similar to this one on Amazon) that I suspected might be overheating. I didn't have any fans blowing across it, and it was in a stack of other electronic devices, so I'm not sure blame attaches. Still, I needed some way to get some air across it.

For this type of application, I prefer a blower fan design rather than a propeller fan.

I found a four-pack of small, inexpensive USB-powered 5V 50mm x 50mm x 15mm fans on Amazon.

The KVM switch wasn't designed with fan mounting holes or anything, so I needed some sort of frame assembly to hold them. So of course I reached for my mechanical engineering rapid prototyping kit, better known as my Lego collection.

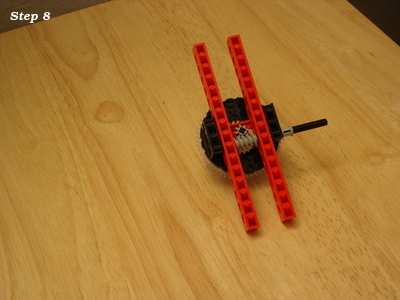

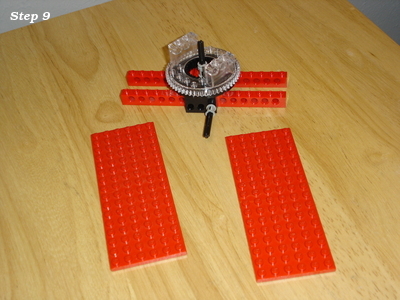

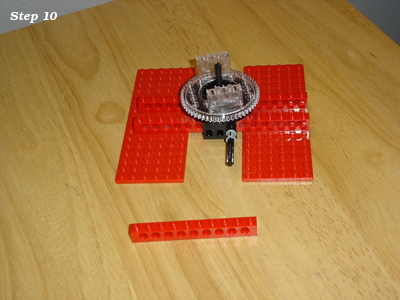

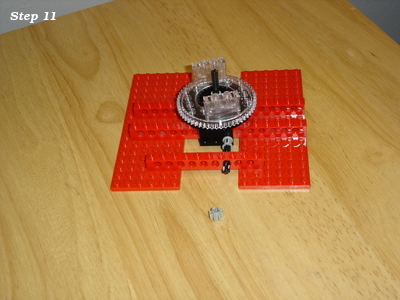

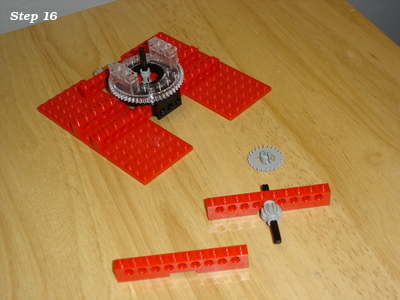

The mounting holes of those are a snug fit for a Technic pin with friction, which can then be used to mount them to a Lego assembly.

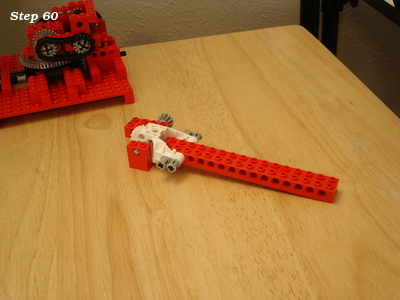

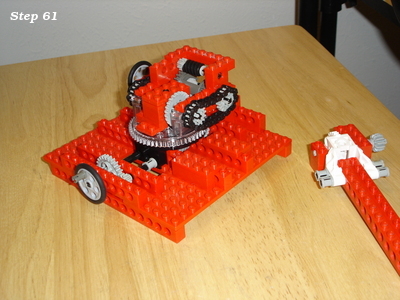

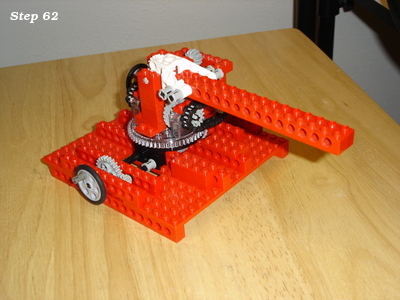

The distance between the mounting holes does not quite align with Lego dimensions, but a Technic beam provides the needed adjustment.

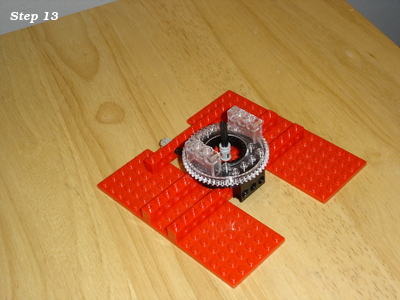

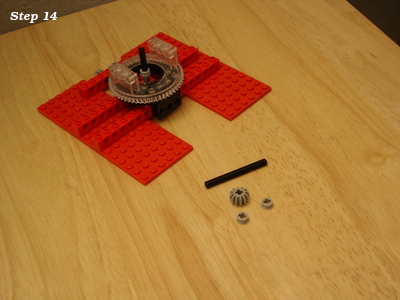

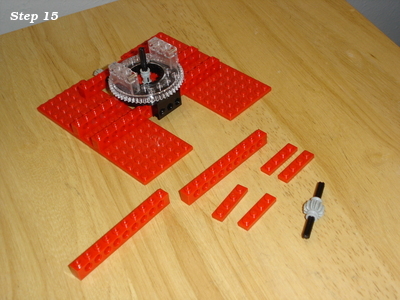

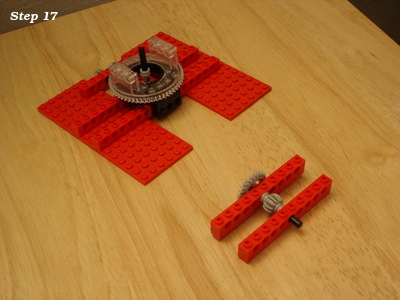

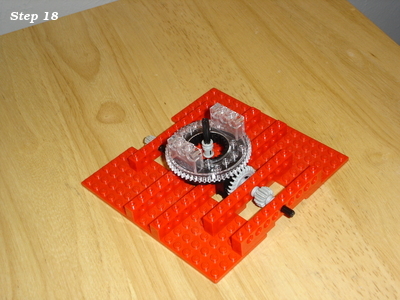

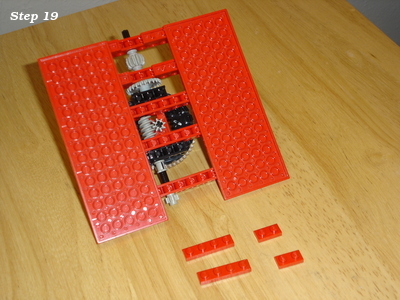

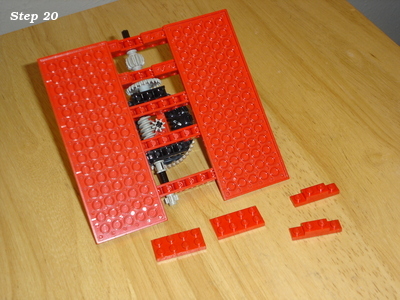

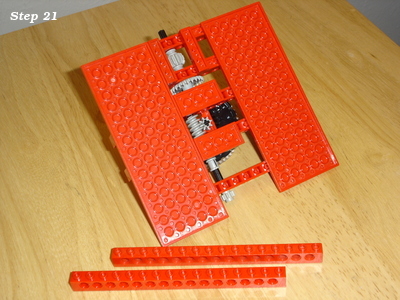

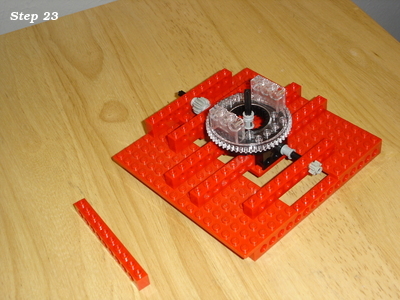

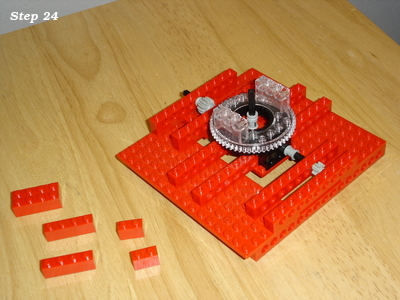

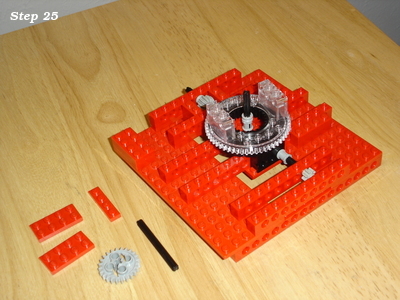

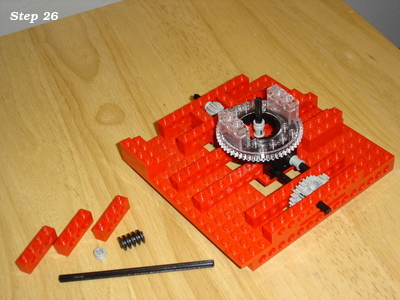

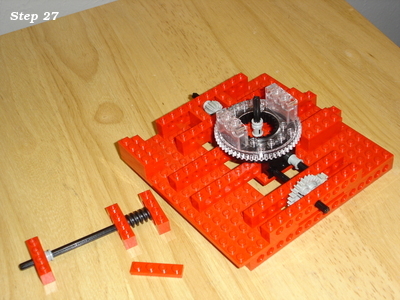

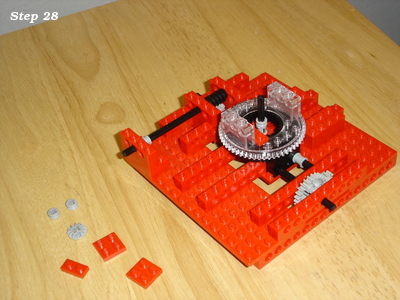

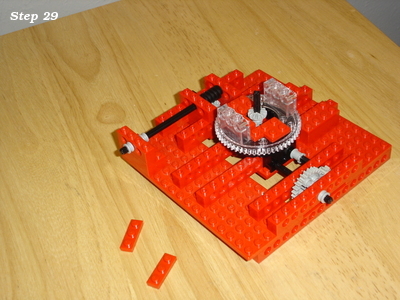

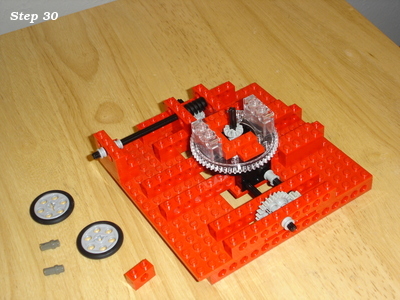

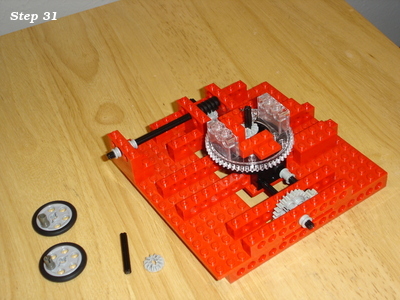

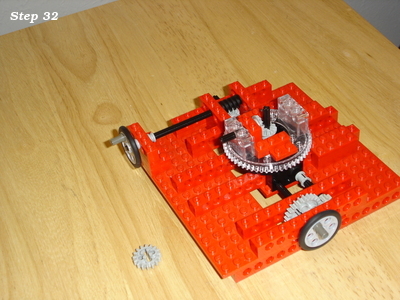

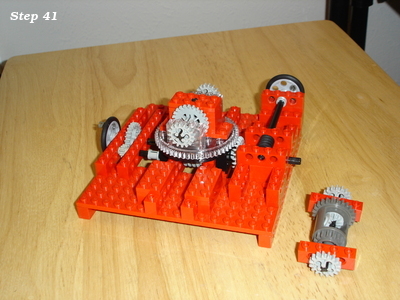

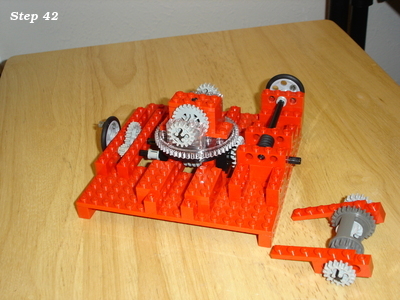

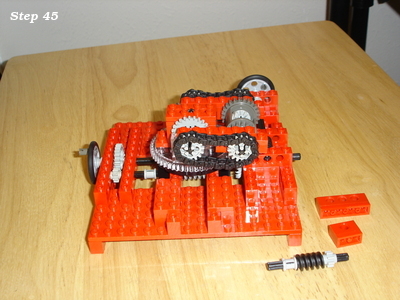

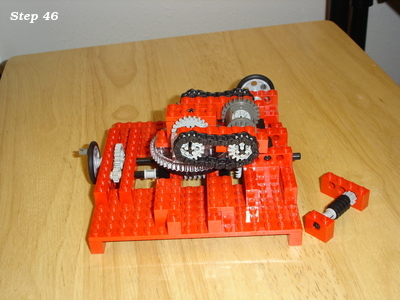

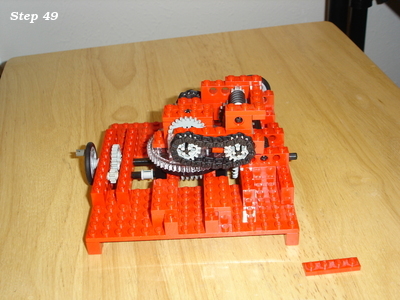

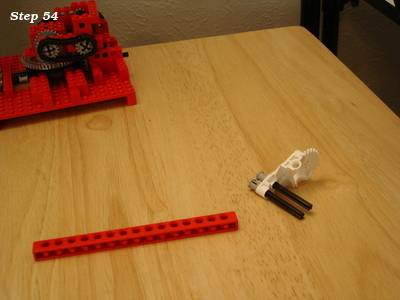

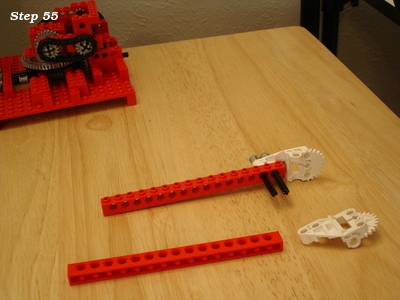

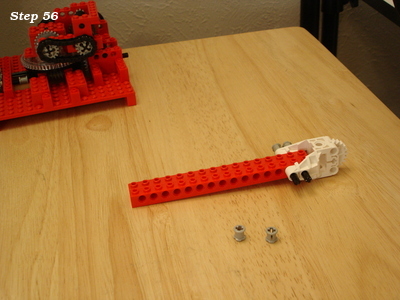

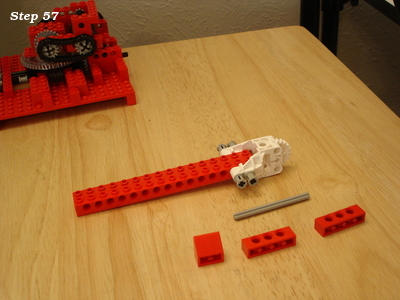

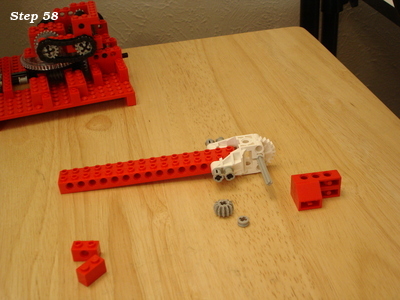

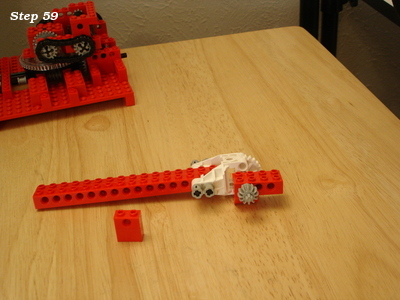

I developed two assembly types. If the fan is positioned to draw air from below (so that small random objects don't fall into the fan blades), then the fan can be above (type 1) or below (type 2) the supporting structure.

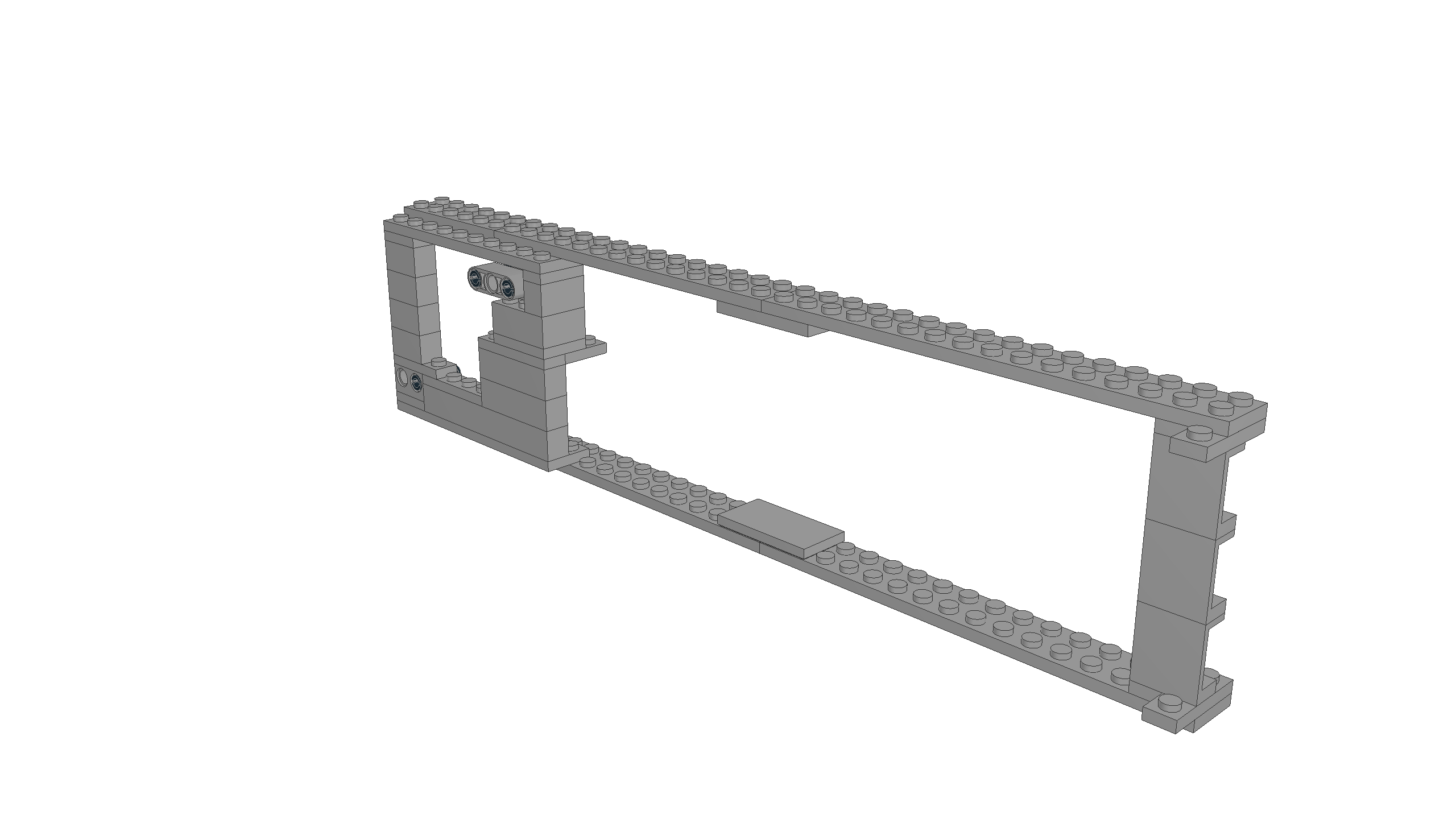

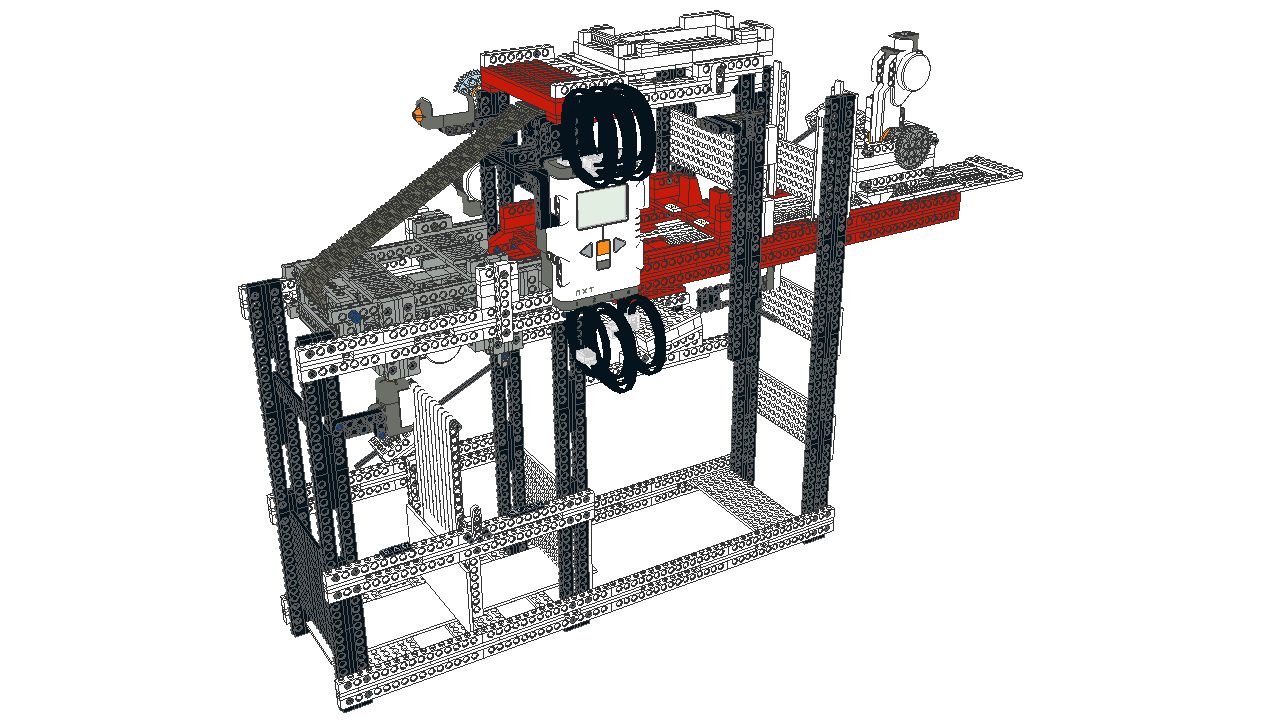

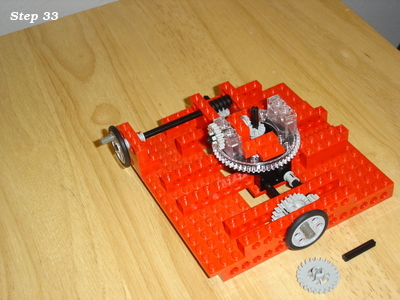

Type 1: Fan above supporting structure

model file and building instructions

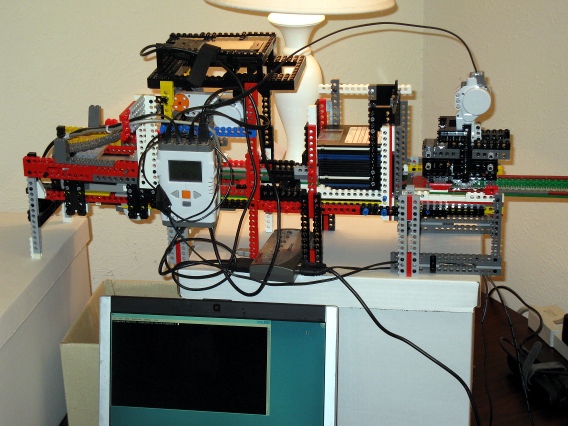

The physical build, with the fan installed:

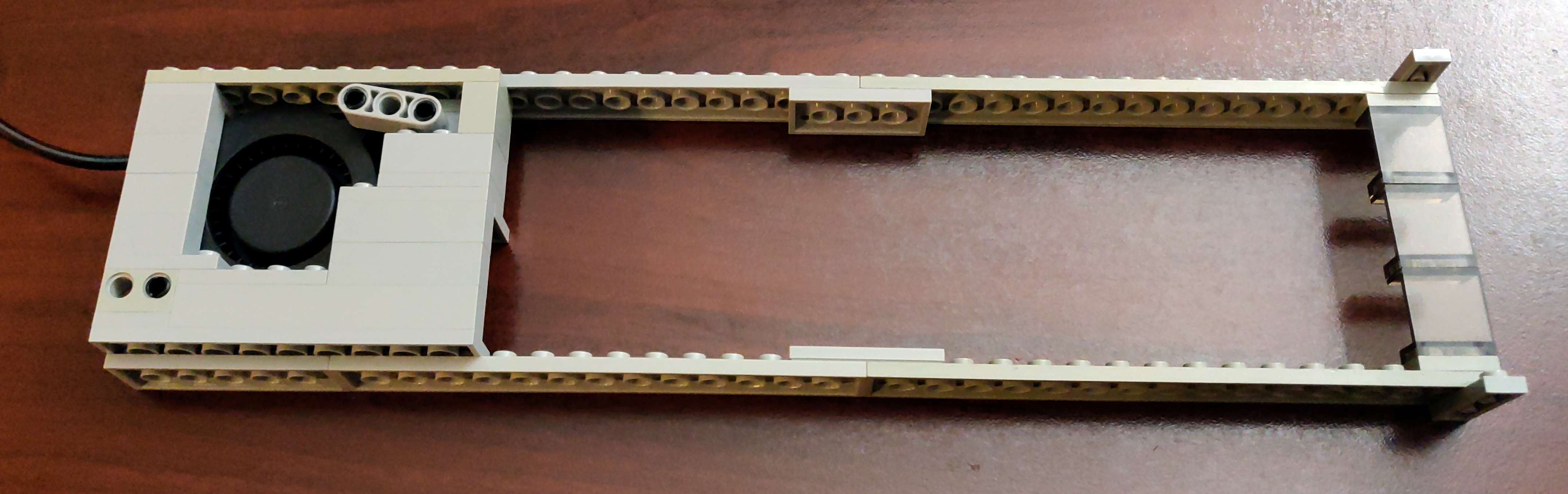

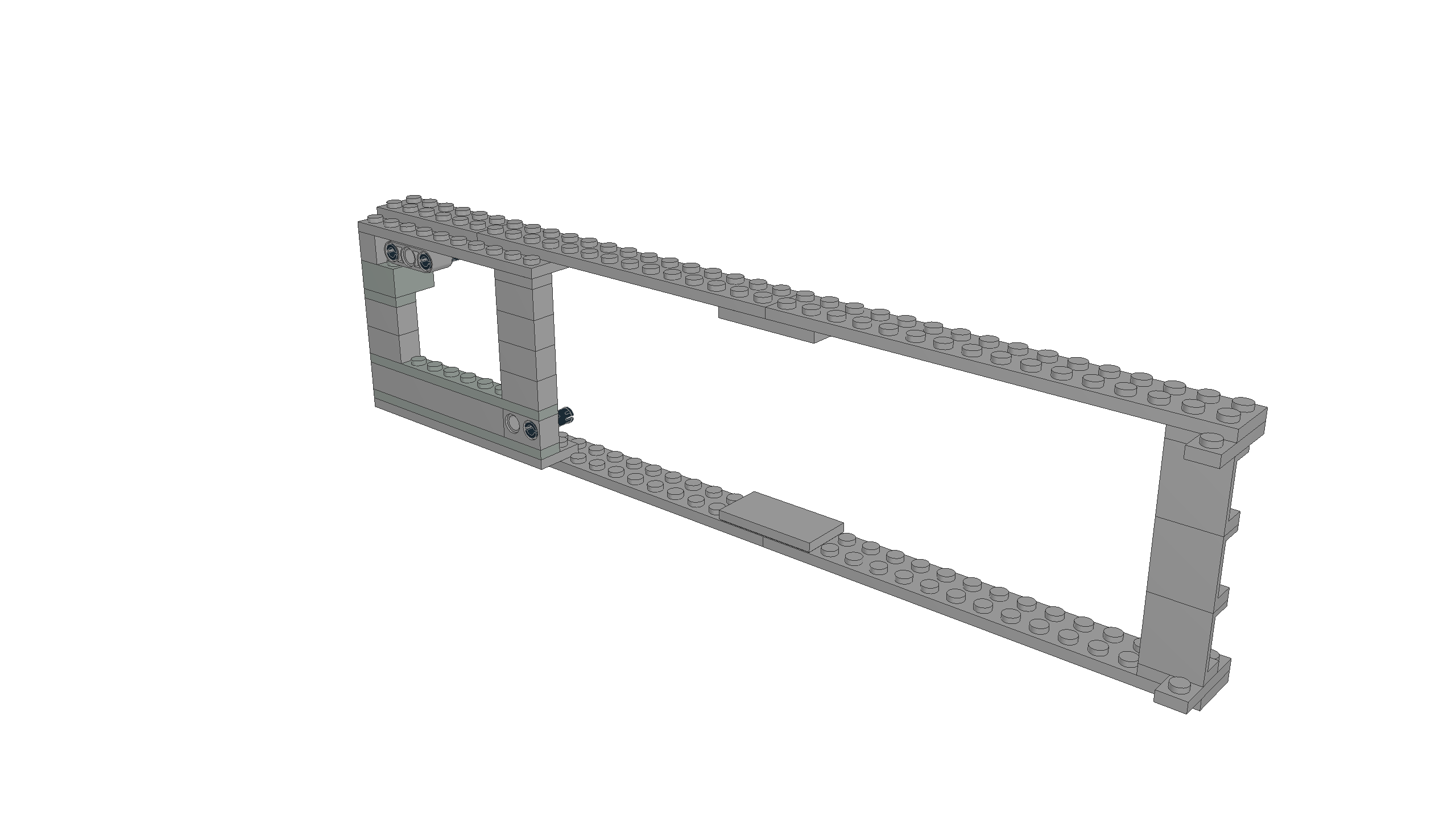

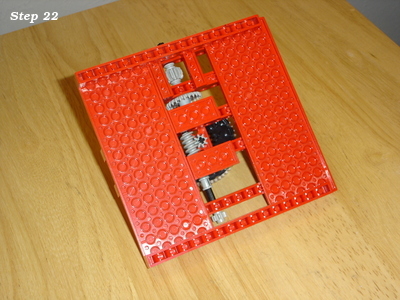

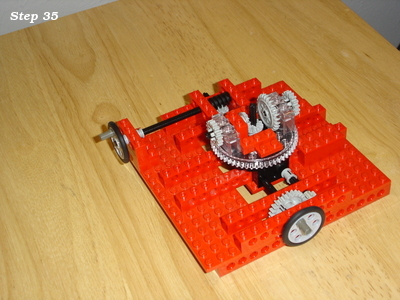

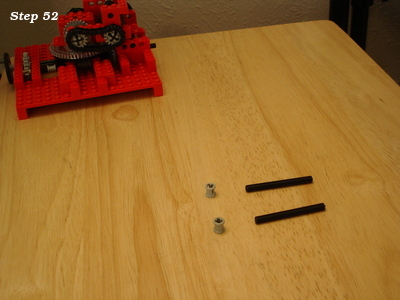

Type 2: Fan below supporting structure

model file and building instructions

The physical build, with the fan installed:

Note that for this build, I adjusted the width of the structure by tacking on a couple extra bricks to the top. It's a trivial change, but made for a better fit around the KVM switch's feet.

This seems to work for cooling the KVM switch, and while two fans is probably overkill, having two assembly types does give me some flexibility.

Sabaton Index updates; new song "Father"

Sabaton released a new song last month related to World War One titled Father and a related history video about Fritz Haber.

I've updated SabatonIndex with the discography and videos they've released of late.

LDraw Parts Library 2022-05 - Packaged for Linux

LDraw.org maintains a library of Lego part models upon which a number of related tools such as LeoCAD, LDView and LPub rely.

I packaged the 2022-05 parts library for Fedora 34 to install to /usr/share/ldraw; it should be straight-forward to adapt to other distributions.

The *.noarch.rpm files are the ones to install, and the .src.rpm contains everything so it can be rebuilt for another rpm-based distribution.

ldraw_parts-202205-ec1.fc34.src.rpm

ldraw_parts-202205-ec1.fc34.noarch.rpm

ldraw_parts-creativecommons-202205-ec1.fc34.noarch.rpm

ldraw_parts-models-202205-ec1.fc34.noarch.rpm

Grid-based Tiling Window Management, Mark II

A few years ago, I implemented a grid-based tiling window management tool for Linux/KDE that drastically improved my ability to utilize screen realestate on a 4K monitor.

The basic idea is that a 4K screen is divided into 16 cells in a 4x4 grid, and a Full HD screen is divided into 4 cells in a 2x2 grid. Windows can be snapped (Meta-Enter) to the nearest rectangle that aligns with that grid, whether that rectangle is 1 cell by 1 cell, or if it is 2 cells by 3 cells, etc. They can be moved around the grid with the keyboard (Meta-Up, Meta-Down, Meta-Left, Meta-Right). They can be grown by increments of the cell size in the four directions (Ctrl-Meta-Up, Ctrl-Meta-Down, Ctrl-Meta-Left, Ctrl-Meta-Right), and can be shrunk similarly (Shift-Meta-Up, Shift-Meta-Down, Shift-Meta-Left, Shift-Meta-Right).

While simple in concept, it dramatically improves the manageability of a large number of windows on multiple screens.

Since that first implementation, KDE or X11 introduced a change that broke some of the logic in the quicktile code for dealing with differences in behavior between different windows. All windows report location and size information for the part of the window inside the frame. When moving a window, some windows move the window inside the frame to the given coordinates (meaning that you set the window position to 100,100, and then query the location and it reports as 100,100). But other windows move the window _frame_ to the given coordinates (meaning that you set the window position to 100,100, and then query the location and it reports as 104,135). It used to be that we could differentiate those two types of windows because one type would show a client of N/A, and the other type would show a client of the hostname. But now, all windows show a client of the hostname, so I don't have a way to differentiate them.

Fortunately, all windows report their coordinates in the same way, so we can set the window's coordinates to the desired value, get the new coordinates, and if they aren't what were expected, adjust the coordinates we request by the error amount, and try again. That gets the window to the desired location reliably.

The downside is that you do see the window move to the wrong place and then shift to the right place. Fixing that would require finding some characteristic that can differentiate between the two types of windows. It does seem to be consistent in terms of what program the window is for, and might be a GTK vs QT difference or something. Alternatively, tracking the error correction required for each window could improve behavior by making a proactive adjustment after the first move of a window. But that requires maintaining state from one call of quicktile to the next, which would entail saving information to disk (and then managing the life-cycle of that data), or keeping it in memory using a daemon (and managing said daemon). For the moment, I don't see the benefit being worth that level of effort.

Here is the updated quicktile script.

To use the tool, you need to set up global keyboard shortcuts for the various quicktile subcommands. To make that easier, I created an importable quicktile shortcuts config file for KDE.

Of late I have also noticed that some windows may get rearranged when my laptop has the external monitor connected or disconnected. When that happens, I frequently wind up with a large number of windows with odd shapes and in odd locations. Clicking on each window, hitting Meta-Enter to snap it to the grid, and then moving it out of the way of the next window gets old very quickly. To more easily get back to some sane starting point, I added a quicktile snap all subcommand which will snap all windows on the current desktop to the grid. The shortcuts config file provided above ties that action to Ctrl-Meta-Enter.

This version works on Fedora 34; I have not tested on other distributions.

Sabaton Index updates

Sabaton released a few new lyric videos and a couple of new history videos. The SabatonIndex is updated with links to that new content.

LDraw Parts Library 2022-03 - Packaged for Linux

LDraw.org maintains a library of Lego part models upon which a number of related tools such as LeoCAD, LDView and LPub rely.

I packaged the 2022-03 parts library for Fedora 34 to install to /usr/share/ldraw; it should be straight-forward to adapt to other distributions.

The *.noarch.rpm files are the ones to install, and the .src.rpm contains everything so it can be rebuilt for another rpm-based distribution.

ldraw_parts-202203-ec1.fc34.src.rpm

ldraw_parts-202203-ec1.fc34.noarch.rpm

ldraw_parts-creativecommons-202203-ec1.fc34.noarch.rpm

ldraw_parts-models-202203-ec1.fc34.noarch.rpm

Sabaton "The War To End All Wars" album released

Sabaton released a new album this week with the theme of World War One titled The War To End All Wars. A previous album The Great War was also about WW1; the new album looks at another set of stories from that era, including "Christmas Truce", the story of a truce made by the ground forces on Christmas Eve. I've updated SabatonIndex with the discography and videos they've released for the new album.

Random Username Generator

Sometimes you need a username for a service, and you may not want it to be tied to your name. You could try to come up with something off the top of your head, but while that might seem random, it's still a name you would think of, and the temptation will always be to choose something meaningful to you. So you want to create a random username. On the other hand, "gsfVauIZLuE1s4gO" is extremely awkward as a username. It'd be better to have something more memorable; something that might seem kinda normal at a casual glance,

You could grab a random pair of lines from /usr/share/dict/words, but that includes prefixes, acronymns, proper nouns, and scientific terms. Even without those, "stampedingly-isicle" is a bit "problematical". So I'd rather use "adjective"-"noun". I went looking for word lists that included parts of speech information, and found a collection of English words categorized by various themes, which are grouped by parts of speech. The list is much smaller, but the words are also going to be more common, and therefore more familiar.

Using this word list, and choosing one adjective and one noun yields names like "gray-parent", "boxy-median", and "religious-tree". The smaller list means that such a name only has about 20 bits of randomness, but for a username, that's probably sufficient.

On the otherhand, we could use something like this for passwords like "correct horse battery staple", but in the form of adjective-adjective-adjective-noun. Given the part-of-speech constraint, 3 adjectives + 1 noun is about 40 bits of entropy. Increasing that to 4 adjectives and 1 noun gets about 49 bits of entropy.

So of course, I implemented such a utility in Python:

usage: generate-username [-h] [--adjectives ADJECTIVES] [--divider DIVIDER] [--bits BITS] [--verbose]

Generate a random-but-memorable username.

optional arguments:

-h, --help show this help message and exit

--adjectives ADJECTIVES, -a ADJECTIVES

number of adjectives (default: 1)

--divider DIVIDER character or string between words (default: )

--bits BITS minimum bits of entropy (default: 0)

--verbose be chatty (default: False)

As the word list grows, the number of bits of entropy per word will increase, so the code calculates that from the data it actually has. It allows you to specify the number of adjectives and the amount of entropy desired so you can choose something appropriate for the usecase.

That said, 128 bits of entropy does start to get a little unweildy with 13 adjectives and one noun: "concrete-inverse-sour-symmetric-saucy-stone-kind-flavorful-roaring-vertical-human-balanced-ebony-gofer". Whoo-boy; that's one weird gofer. That could double as a crazy writing prompt.

If the word list had adverbs, we might be able to make this even more interesting. For that matter, it might be fun to create a set of "Mad Libs"-like patterns "The [adjective] [noun] [adverb] [verb] a [adjective] [noun]." Verb tenses and conjugations would make that more difficult to generate, but could yield quite memorable passphrases. Something to explore some other time.

Hopefully this will be useful to others.

LDraw Parts Library 2022-01 - Packaged for Linux

LDraw.org maintains a library of Lego part models upon which a number of related tools such as LeoCAD, LDView and LPub rely.

I packaged the 2022-01 parts library for Fedora 34 to install to /usr/share/ldraw; it should be straight-forward to adapt to other distributions.

The *.noarch.rpm files are the ones to install, and the .src.rpm contains everything so it can be rebuilt for another rpm-based distribution.

ldraw_parts-202201-ec1.fc34.src.rpm

ldraw_parts-202201-ec1.fc34.noarch.rpm

ldraw_parts-creativecommons-202201-ec1.fc34.noarch.rpm

ldraw_parts-models-202201-ec1.fc34.noarch.rpm

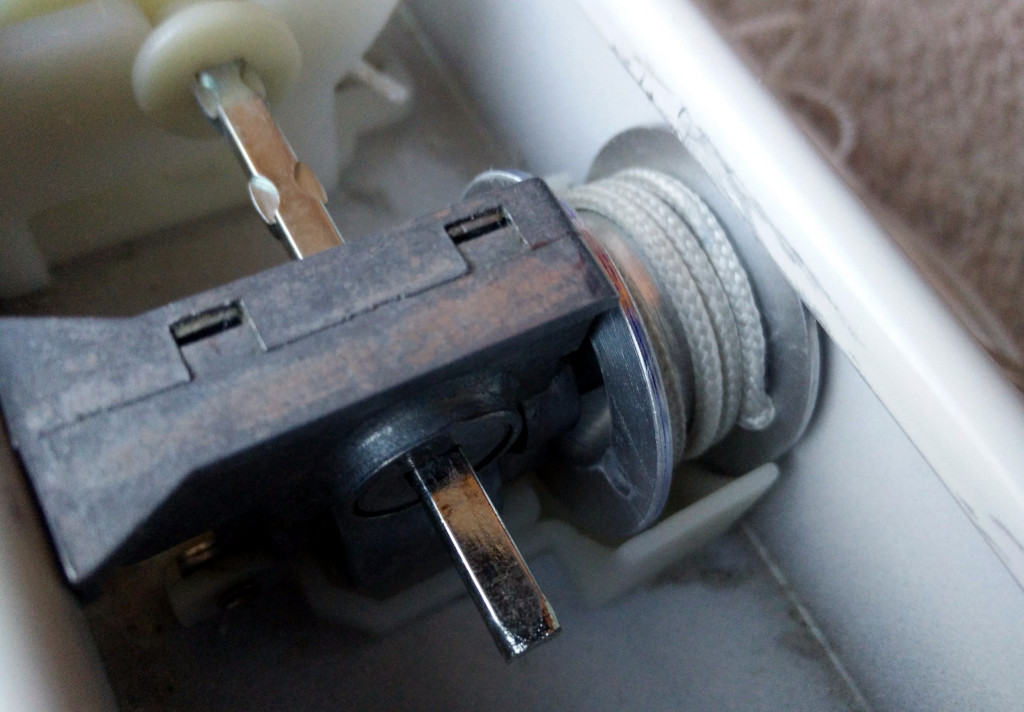

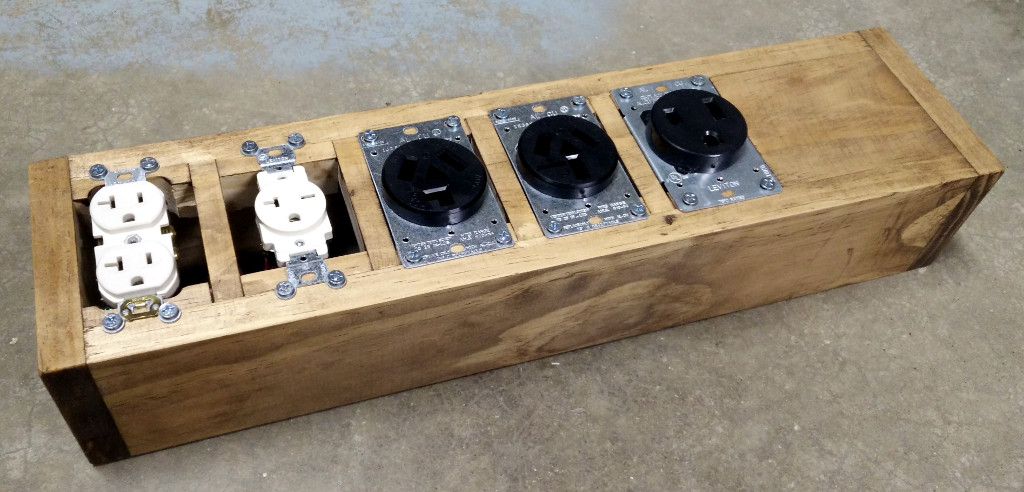

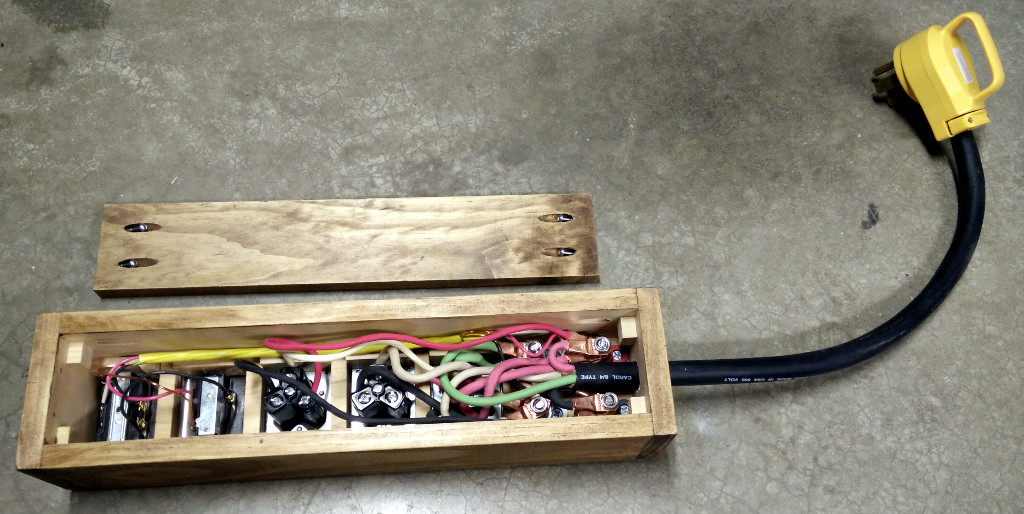

Remote Power Switches

Being able to turn a vacuum cleaner or fan on and off from across a room can make life much easier while working on a project. I had previously made an extension cord which incorporated a double lightswitch and had found that I was dragging that box around with the switch and the vacuum cleaner power cord plugged in, and that was getting awkward. So I created a dedicated "remote" switch power cable to make it easier to use.

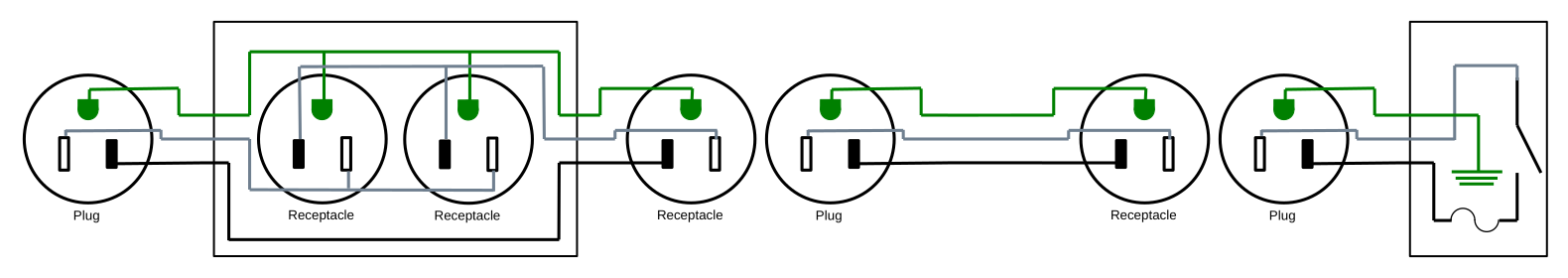

I'm going to show four designs for such a remote switch. One of them is a reasonable design. Three of them add functionality, but also introduce hazards, and therefore I must recommend against them. But I find those interesting enough to explore.

All components use NEMA5-15 receptacles and plugs.

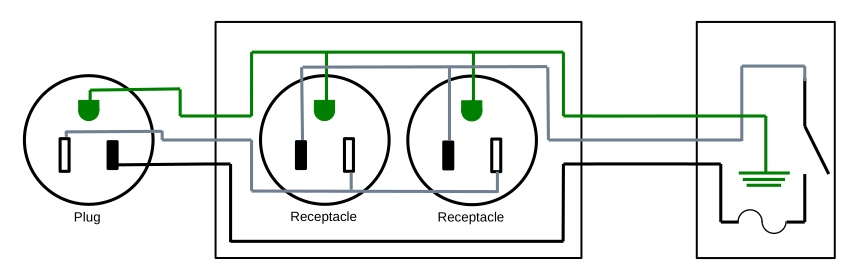

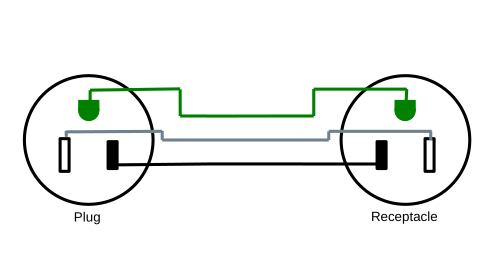

Basic Remote Switch

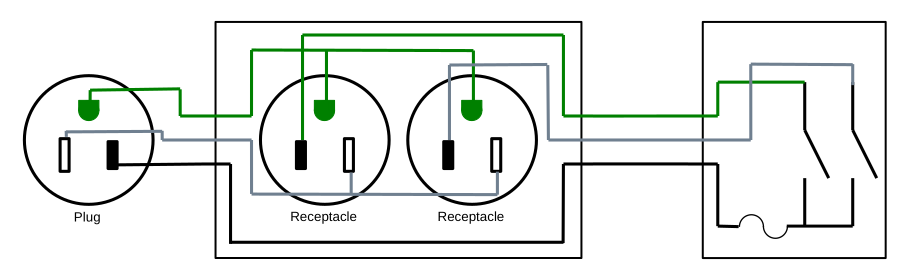

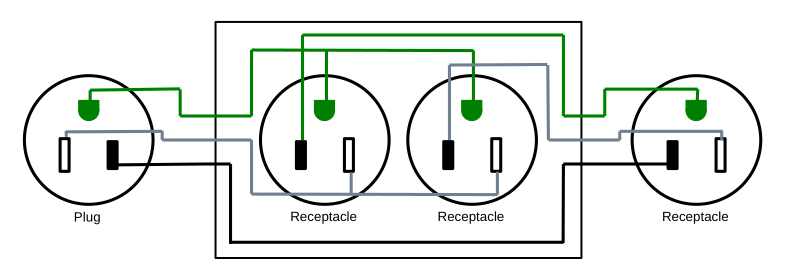

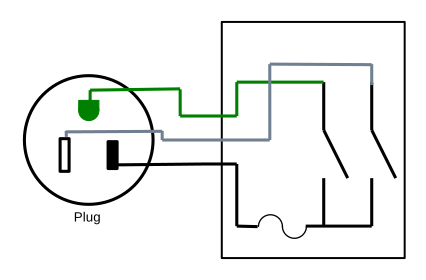

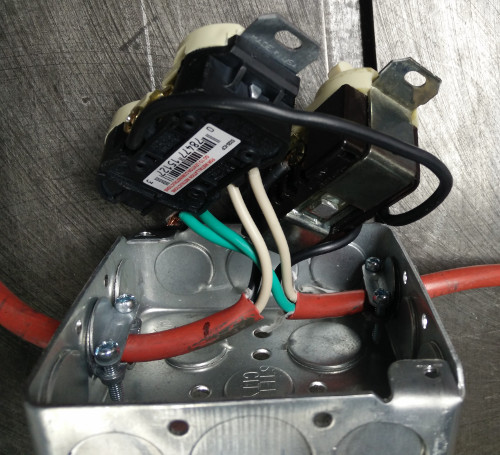

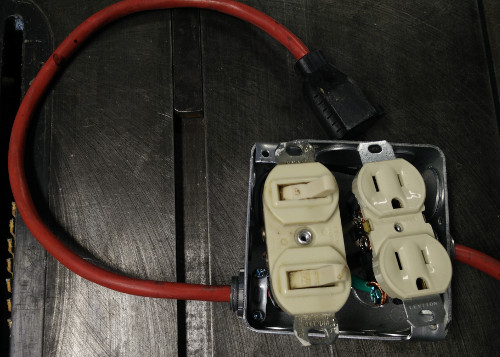

Beginning with the simplest design, we have a switch that controls a receptacle:

Complete parts list:

| Part | Qty |

|---|---|

| single-width deep electrical box | 2 |

| electrical box outlet cover plate | 1 |

| electrical box switch cover plate | 1 |

| conduit clamp grommet | 3 |

| heavy-duty extension cord | 1 |

| NEMA5-15 power outlet | 1 |

| light switch | 1 |

| fuse holder | 1 |

| fuse | 1 |

| wire nut | 1 |

| 10-12 AWG insulated female spade crimp terminal | 2 |

| 4-5" of stranded wire | 1 |

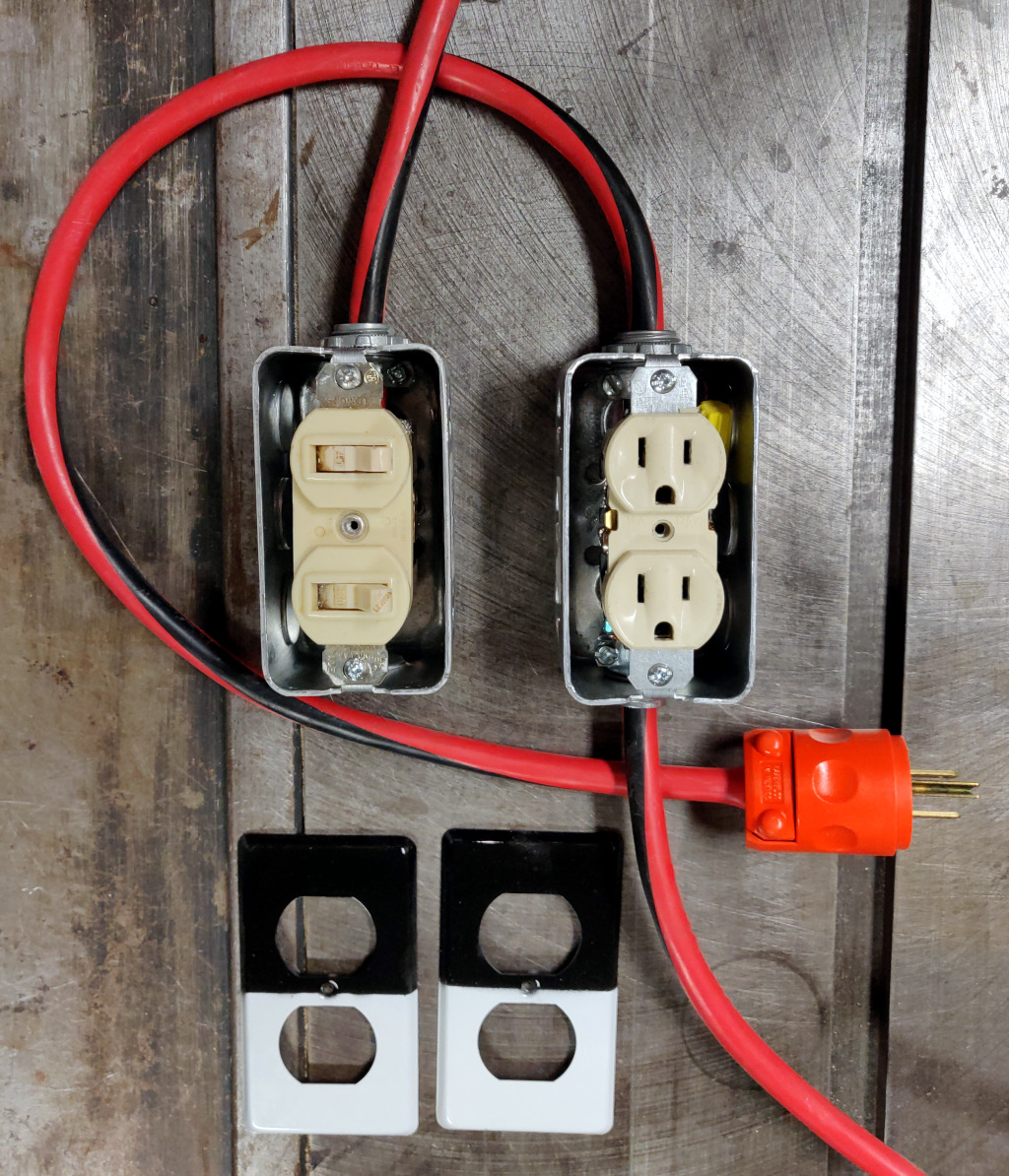

Cut the extension cord so you have the plug end connected to about 2 feet of wire, then cut the receptacle off the other part of the extension cord. This will give you a pigtail that can plug into a receptacle, and a length of power cable.

To create a hole in which to mount the fuse holder in the switch module, drill/dremel a hole of matching size in the electrical box knock-out plate opposite the knock-out plate removed for the power cable.

Wire the contraption up following the diagram above. The pigtail with the plug is wired to the receptical in the box, The wire nut is used inside the receptacle box to connect the hot wire from the pigtail to the hot wire in the power cable. The power cable runs from the receptacle box to the switch box. The power cable hot wire connects to the fuse holder in the switch box with a crimp terminal, and then the other lead on the fuse holder connects to the switch using a crimp terminal and the short length of stranded wire. The ground wire connects to the ground terminal on the switch.

Be sure to use a multimeter to check that your wiring is correct and you have not introduced a short before you plug it into power.

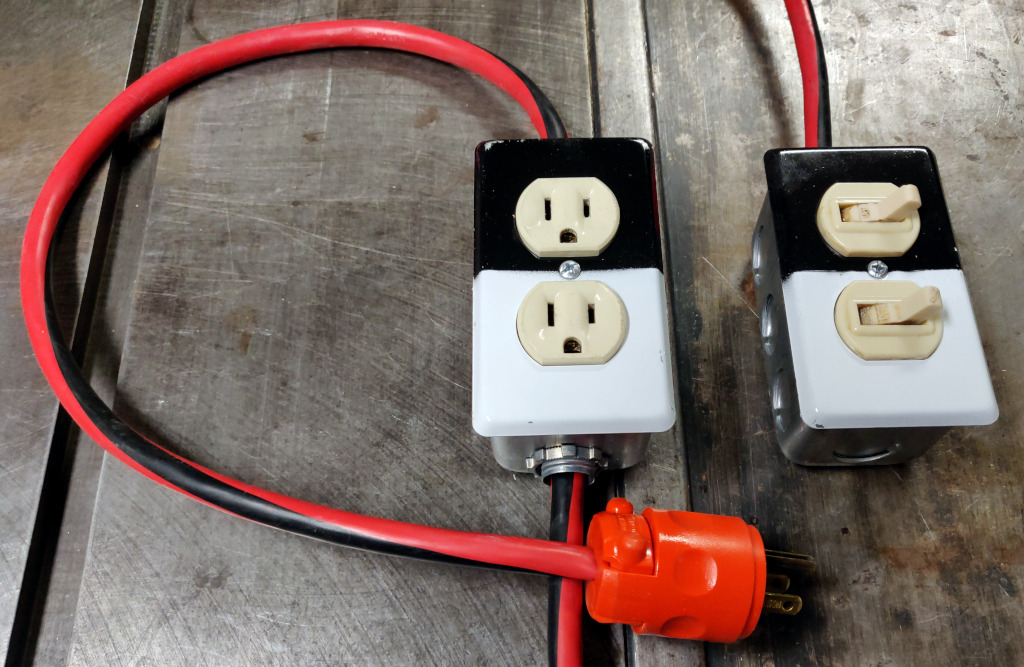

This is the reasonable design; it gives you a switch that controls a power outlet up to some number of feet away, depending on the size of the extension cord you started with. It solves the problem at hand. If you're here looking to solve that problem, this is the design to use, and you can skip the rest of the blog post. The remaining designs below, while more functional, introduce hazards that I cannot recommend.

But if you have an engineering mindset, come with me as I explore them anyway.

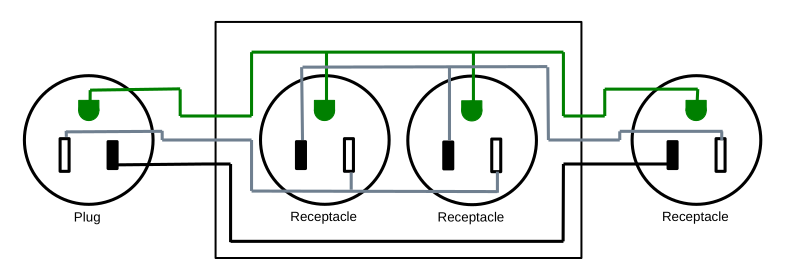

Extensible Remote Switch

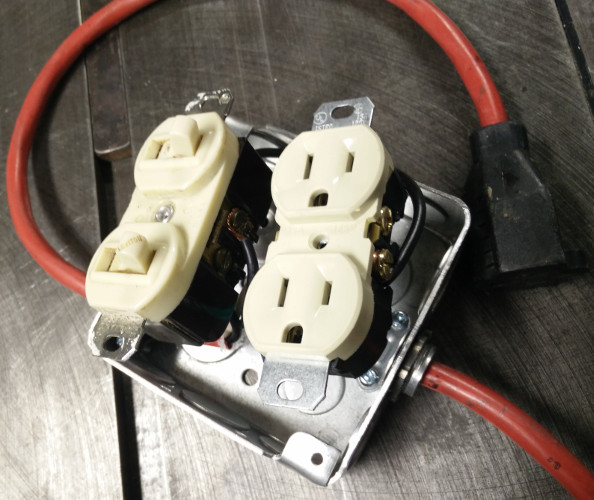

The above design is quite functional, but you have to commit to the distance between the receptacle and the switch. We can add a receptacle and plug into the design between the two like this:

And now you can put a standard extension cord of almost arbitrary length between the receptacle box and the switch.

But this also introduces a hazard. Now the switch is its own module, and could accidentally be plugged into a regular power outlet and flipped "on", directly creating a short circuit. While doing so should throw a breaker or blow the fuse in the switch module, there is risk of problems, so I recommend against building this.

Complete parts list:

| Part | Qty |

|---|---|

| single-width deep electrical box | 2 |

| electrical box outlet cover plate | 1 |

| electrical box switch cover plate | 1 |

| conduit clamp grommet | 3 |

| heavy-duty extension cord | 1 |

| NEMA5-15 power outlet | 1 |

| light switch | 1 |

| fuse holder | 1 |

| fuse | 1 |

| wire nut | 1 |

| NEMA5-15 receptacle | 1 |

| NEMA5-15 plug | 2 |

| 10-12 AWG insulated female spade crimp terminal | 2 |

| 4-5" of stranded wire | 1 |

For this design, you make a different set of cuts on the power cord. Cut the extension cord so you have the plug end connected to about 2 feet of wire. From the other portion of the extension cord, cut two 2-foot lengths from the cut end to give you two 2-foot sections of power cable, with the remainder of the power cable still connected to the original receptacle.

Build a (shorter) extension cord:

Sub-assembly parts list:

| Part | Qty |

|---|---|

| heavy-duty extension cord receptacle + wire | 1 |

| NEMA5-15 plug | 1 |

Wire one of the plugs to the remaining part of the extension cord (which has the extension cord's original receptacle on it) to give you a (shortened) extension cord.

Build the receptacle module:

Sub-assembly parts list:

| Part | Qty |

|---|---|

| single-width deep electrical box | 1 |

| electrical box outlet cover plate | 1 |

| conduit clamp grommet | 2 |

| heavy-duty extension cord plug + 2' wire | 1 |

| heavy-duty extension cord 2' wire | 1 |

| NEMA5-15 power outlet | 1 |

| wire nut | 1 |

| NEMA5-15 receptacle | 1 |

The wire nut is used inside the receptacle box to connect the hot wire from the short plug pigtail to the hot wire going to the receptacle.

Build the switch box module:

Sub-assembly parts list:

| Part | Qty |

|---|---|

| single-width deep electrical box | 1 |

| electrical box switch cover plate | 1 |

| conduit clamp grommet | 1 |

| heavy-duty extension cord 2' wire | 1 |

| light switch | 1 |

| fuse holder | 1 |

| fuse | 1 |

| NEMA5-15 plug | 1 |

| 10-12 AWG insulated female spade crimp terminal | 2 |

| 4-5" of stranded wire | 1 |

Be sure to use a multimeter to check that your wiring is correct and you have not introduced a short before you plug any of this into power.

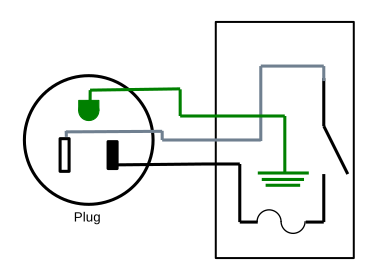

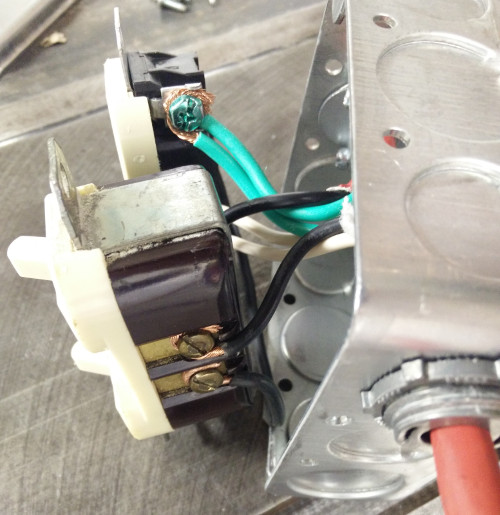

Double Remote Switch

A different functionality enhancement is to make the two receptacles in the receptical box independent of each other and put two switches in the control box, giving two controls in one device.

This also introduces a hazard, though a more subtle one; in this case we use the ground wire between the receptacle box and the control box to carry load current. The ground wire may be a smaller gauge wire than the neutral and hot conductors, and thus risk overheating. Additionally, the control box which you will be handling is no longer grounded; if something goes wrong electrically, you risk electrocution.

| Part | Qty |

|---|---|

| single-width deep electrical box | 2 |

| electrical box cover plate | 2 |

| conduit clamp grommet | 3 |

| heavy-duty extension cord | 1 |

| NEMA5-15 power outlet | 1 |

| double light switch | 1 |

| fuse holder | 1 |

| fuse | 1 |

| spray paint, white | 1 |

| spray paint, black | 1 |

| wire nut | 1 |

| 10-12 AWG insulated female spade crimp terminal | 2 |

| 4-5" of stranded wire | 1 |

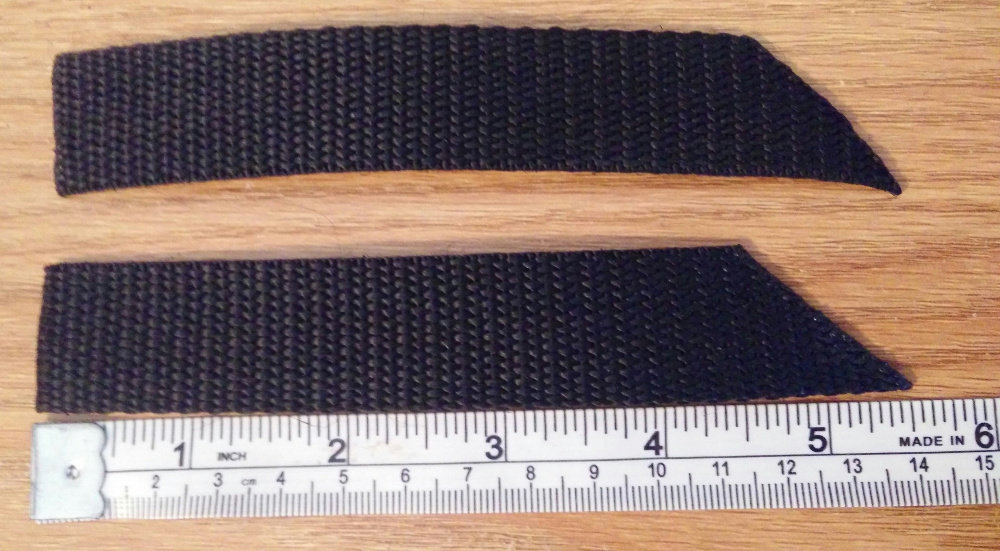

Lightly sand the faceplates,

and paint one half white and one half black.

For this design, you make the same cuts in the extension cord as for the single remote switch design. Cut the extension cord so you have the plug end connected to about 2 feet of wire, then cut the receptacle off the other part of the extension cord. This will give you a pigtail that can plug into a receptacle, and a length of power cable.

Wire the contraption up following the diagram above. The wire nut is used to connect the hot wire inside the receptacle box.

Note that you will likely need to snap off a metal tab connecting the hot terminals of the receptacles to make them independently controllable.

Be sure to use a multimeter to check that your wiring is correct and you have not introduced a short before you plug it into power.

Once the painted faceplates have dried, install them so that the white switch controls the white receptacle, and the black switch controls the black receptacle.

Double Extensible Remote Switch

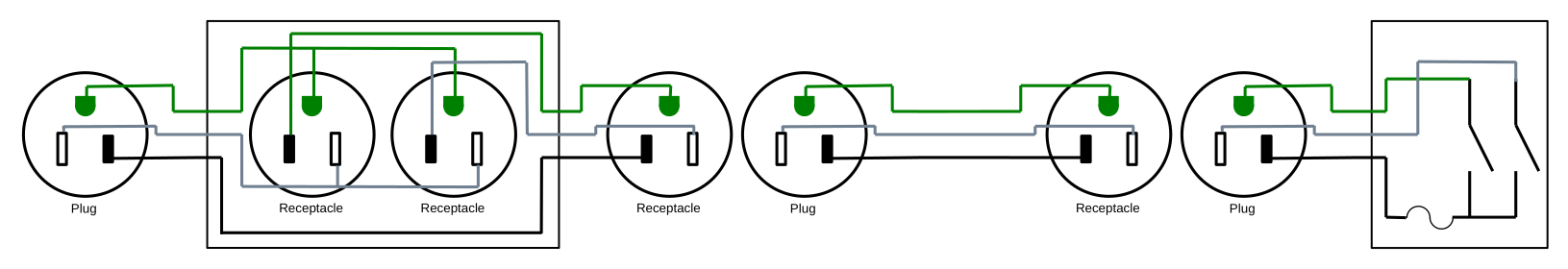

The two previous enhancements can be combined to yield the most functional, but also the most hazardous version of this remote power switch:

| Part | Qty |

|---|---|

| single-width deep electrical box | 2 |

| electrical box cover plate | 2 |

| conduit clamp grommet | 3 |

| heavy-duty extension cord | 1 |

| NEMA5-15 power outlet | 1 |

| double light switch | 1 |

| fuse holder | 1 |

| fuse | 1 |

| spray paint, white | 1 |

| spray paint, black | 1 |

| wire nut | 1 |

| NEMA5-15 receptacle | 1 |

| NEMA5-15 plug | 2 |

| 10-12 AWG insulated female spade crimp terminal | 2 |

| 4-5" of stranded wire | 1 |

Paint the faceplates as for the previous build.

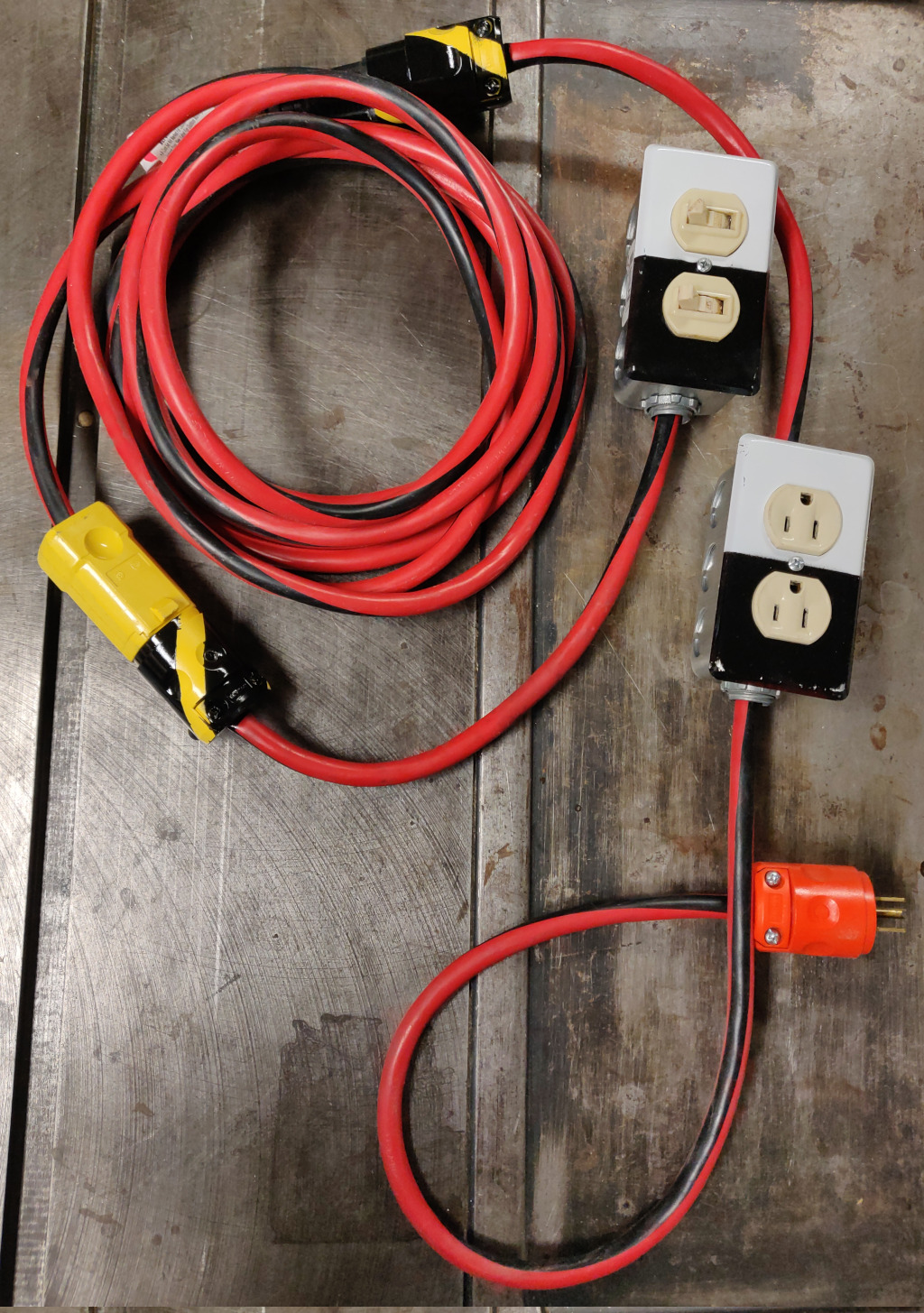

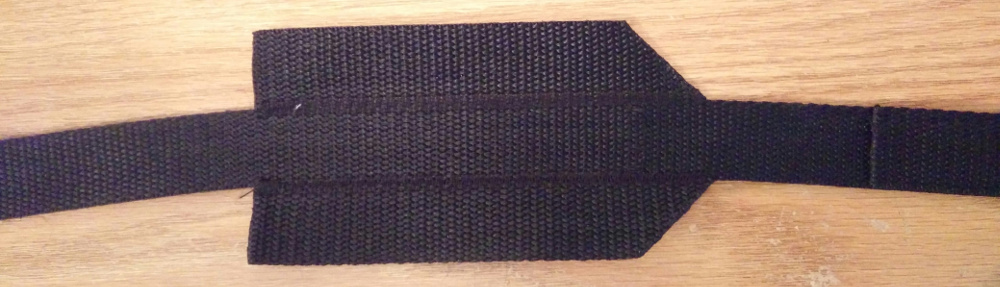

In an effort to mitigate one of the hazards of this design, I took the plug and receptacle in the middle of this system and painted them to make them stand out as somehow different from normal plugs and receptacles. Before building everything, I plugged them into each other and wrapped two pieces of electrical tape around them in a spiral to create something resembling a warning stripe. Since they were yellow plastic, I sprayed them with black paint, then removed the electrical tape. That gave a visually striking touch to those connectors:

For this design, you make the same cuts in the extension cord as for the single extensible remote switch design. Cut the extension cord so you have the plug end connected to about 2 feet of wire. From the other portion of the extension cord, cut two 2-foot lengths from the cut end to give you two 2-foot sections of power cable, with the remainder of the power cable still connected to the original receptacle.

Build a (shorter) extension cord:

Sub-assembly parts list:

| Part | Qty |

|---|---|

| heavy-duty extension cord receptacle + wire | 1 |

| NEMA5-15 plug | 1 |

Wire one of the plugs to the remaining part of the extension cord (which has the extension cord's original receptacle on it) to give you a (shortened) extension cord.

Build the receptacle module:

Sub-assembly parts list:

| Part | Qty |

|---|---|

| single-width deep electrical box | 1 |

| electrical box outlet cover plate | 1 |

| conduit clamp grommet | 2 |

| heavy-duty extension cord plug + 2' wire | 1 |

| heavy-duty extension cord 2' wire | 1 |

| NEMA5-15 power outlet | 1 |

| wire nut | 1 |

| NEMA5-15 receptacle | 1 |

The wire nut is used inside the receptacle box to connect the hot wire from the short plug pigtail to the hot wire going to the receptacle.

Note that you will likely need to snap off a metal tab connecting the hot terminals of the receptacles to make them independently controllable.

Build the switch box module:

Sub-assembly parts list:

| Part | Qty |

|---|---|

| single-width deep electrical box | 1 |

| electrical box outlet cover plate | 1 |

| conduit clamp grommet | 1 |

| heavy-duty extension cord 2' wire | 1 |

| double light switch | 1 |

| fuse holder | 1 |

| fuse | 1 |

| NEMA5-15 plug | 1 |

| 10-12 AWG insulated female spade crimp terminal | 2 |

| 4-5" of stranded wire | 1 |

Be sure to use a multimeter to check that your wiring is correct and you have not introduced a short before you plug any of this into power.

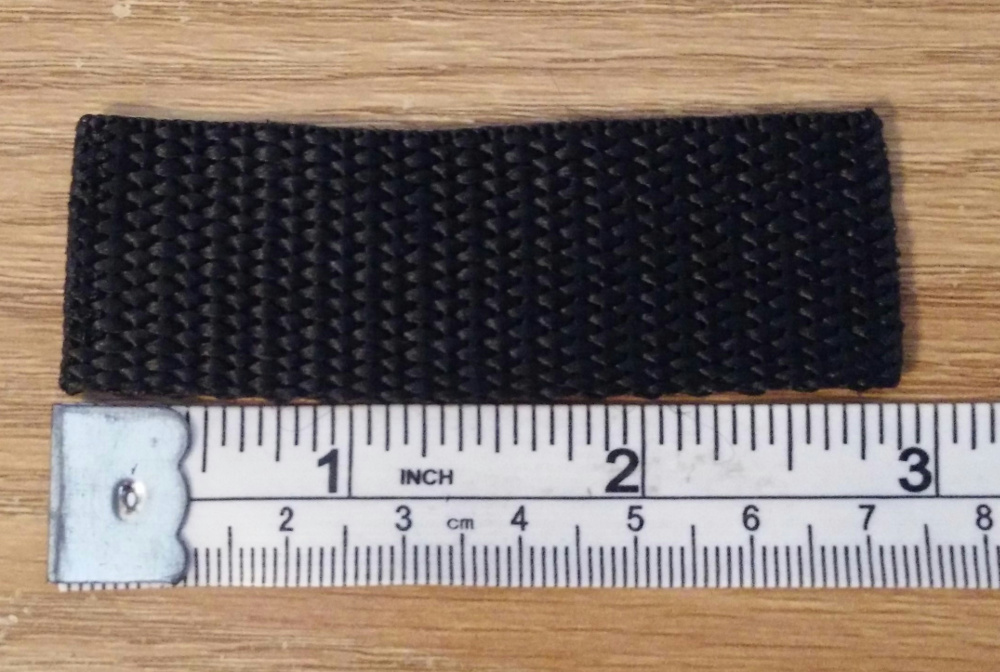

And here is the completed contraption in all its hazardous glory:

(A couple of notes regarding the photo: I started with an extension cord on which I had previously replaced the original receptacle. Further, I made my cuts "backward" from what is described here; I used the plug end of the original extension cord for the build of the shortened extension cord. I've attempted to refine the designs given here based on my trial-and-error build experience.)

Conclusion

As always, the above should be used at your own risk, but hopefully you can see that there are risks and weigh them appropriately. And once again, the simplest answer is frequently the best answer.

Filtering embedded timestamps from PNGs

PNG files created by ImageMagick include an embedded timestamp. When programatically generating images, sometimes an embedded timestamp is undesirable. If you want to ensure that your input data always generates the exact same output data, bit-for-bit, embedded timestamps break what you're doing. Cryptographic signature schemes, or content-addressible storage mechanisms do not allow for even small changes to file content without changing their signature or address. Or if you are regenerating files from some source and tracking changes in the generated files, updated timestamps just add noise.

The PNG format has an 8-byte header followed by chunks with a length, type, content, and checksum. The embedded timestamp chunk has a type of tIME. For images created directly by ImageMagick, there are also creation and modification timestamps in tEXt chunks.

To see this for yourself:

convert -size 1x1 xc:white white-pixel-1.png

sleep 1

convert -size 1x1 xc:white white-pixel-2.png

cmp -l white-pixel-{1,2}.png

That will generate two 258-byte PNG files, and show the differences between the binaries. You should see output something like this:

122 3 4 123 374 142 124 52 116 125 112 337 126 123 360 187 63 64 194 156 253 195 261 26 196 32 44 197 30 226 236 63 64 243 37 332 244 354 113 245 242 234 246 244 52

I have a project where I want to avoid these types of changes in PNG files generated from processed inputs. We can remove these differences from the binaries by iterating over the chunks and dropping those with a type of either tIME or tEXt. So I wrote a bit of (Python 3) code (png_chunk_filter.py) that allows filtering specific chunk types from PNG files without making any other modifications to them.

./png_chunk_filter.py --verbose --exclude tIME --exclude tEXt \

white-pixel-1.png white-pixel-1-cleaned.png

./png_chunk_filter.py --verbose --exclude tIME --exclude tEXt \

white-pixel-2.png white-pixel-2-cleaned.png

cmp -l white-pixel-{1,2}-cleaned.png

Because of the --verbose option, you should see this output:

Excluding tEXt, tIME chunks Found IHDR chunk Found gAMA chunk Found cHRM chunk Found bKGD chunk Found tIME chunk Excluding tIME chunk Found IDAT chunk Found tEXt chunk Excluding tEXt chunk Found tEXt chunk Excluding tEXt chunk Found IEND chunk Excluding tEXt, tIME chunks Found IHDR chunk Found gAMA chunk Found cHRM chunk Found bKGD chunk Found tIME chunk Excluding tIME chunk Found IDAT chunk Found tEXt chunk Excluding tEXt chunk Found tEXt chunk Excluding tEXt chunk Found IEND chunk

The cleaned PNG files are each 141 bytes, and both are identical.

usage: png_chunk_filter.py [-h] [--exclude EXCLUDE] [--verbose] filename target Filter chunks from a PNG file. positional arguments: filename target optional arguments: -h, --help show this help message and exit --exclude EXCLUDE chunk types to remove from the PNG image. --verbose list chunks encountered and exclusions

The code also accepts - in place of the filenames to read from stdin and/or write to stdout so that it can be used in a shell pipeline.

Another use for this code is stripping every unnecessary byte from a png to acheive a minimum size.

./png_chunk_filter.py --verbose \

--exclude gAMA \

--exclude cHRM \

--exclude bKGD \

--exclude tIME \

--exclude tEXt \

white-pixel-1.png minimal.png

That strips our 258-byte PNG down to a still-valid 67-byte PNG file.

Filtering of PNG files solved a problem I faced; perhaps it will help you at some point as well.

Pool noodle PVC swords

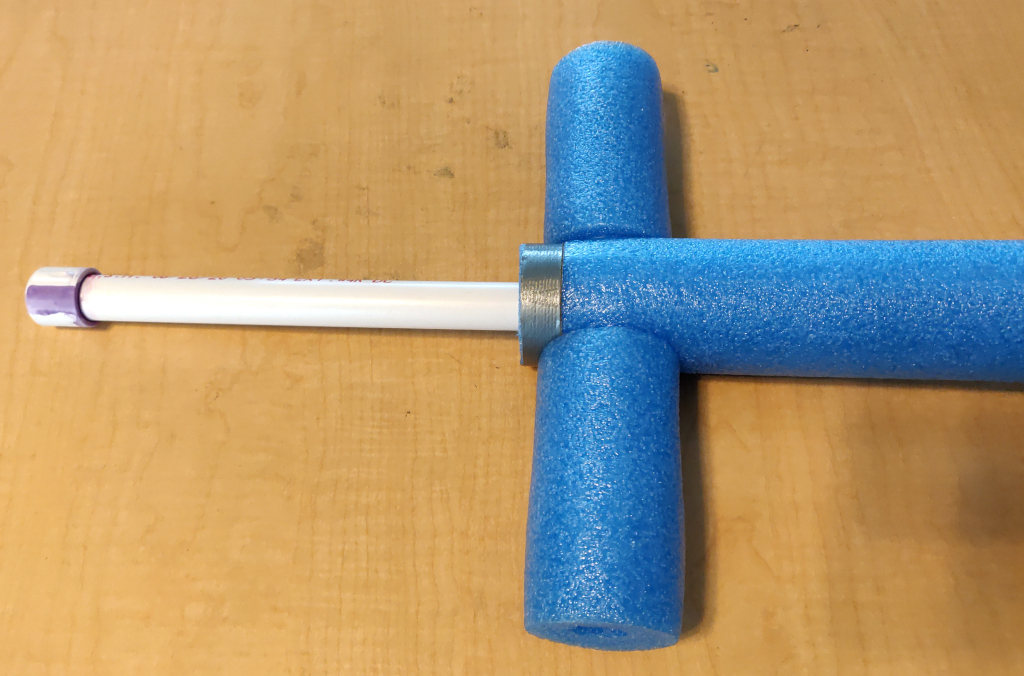

Swords are cool. Sword fights, doubly so. But sword fights are dangerous, so we don't let kids do that for fun. Unless we make the swords out of pool noodles. One approach is to wrap one end of the pool noodle in duct tape to create a "light saber" style sword; but that design is hard to swing well and devolves towards treating it like a cross between a sword and a whip.

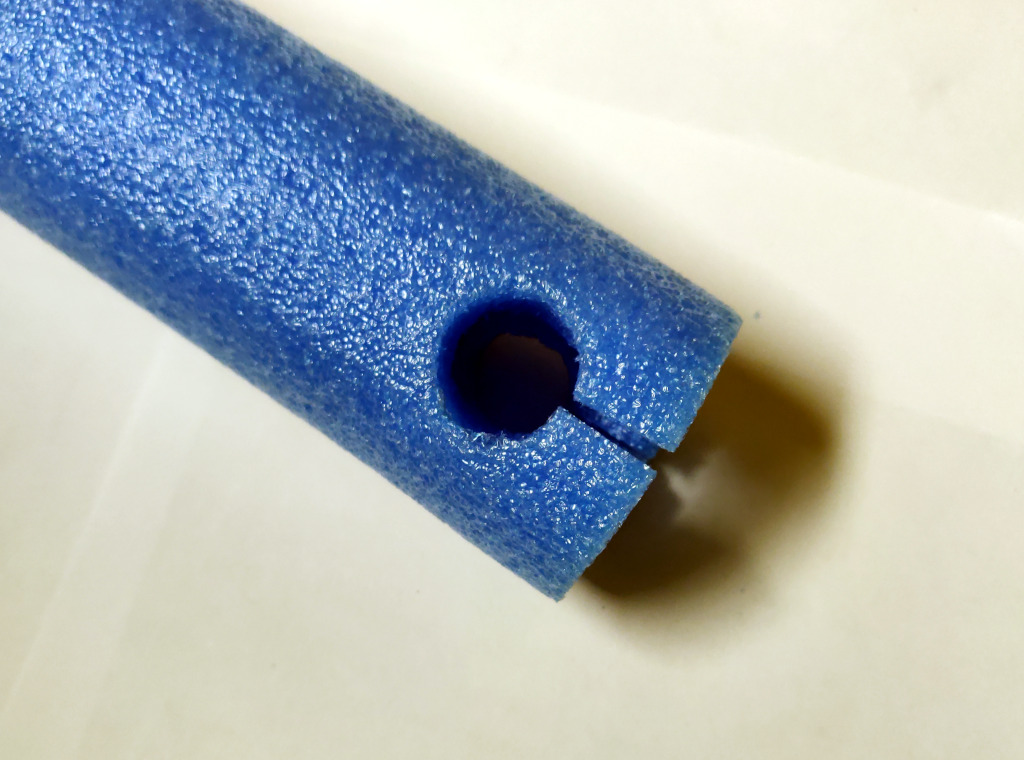

The main complaint with the pool-noodle-as-sword approach is that swinging a limp noodle does not work well. So let's reinforce the noodle with a section of PVC pipe. But if we do that, we can add a sword hilt while we're at it. We also want to make sure that the PVC pipe end won't injure a playmate from an enthusiastic thrust.

Materials

Parts to make 5 swords:

| Item | Price | Qty | Total |

|---|---|---|---|

| 10' 1/2" PVC pipe (pressure pipe, thinner wall than schedule 40) | 2.98 | x 2 | 5.96 |

| 1/2" PVC cross fitting | 1.51 | x 5 | 7.55 |

| 1/2" PVC end cap | 0.51 | x 20 | 10.20 |

| pool noodle | 0.97 | x 5 | 4.85 |

| duct tape, 45yards | 5.28 | x 1 | 5.28 |

| 4oz PVC primer+cement pack | 7.70 | x 1 | 7.70 |

| Grand total | $41.54 | ||

That works out to $8.31 per sword. (Prices circa 2021-Q3.) This should not consume the entire roll of tape, nor all the of the PVC adhesives, so if you have those on hand or have additional uses for them you can reduce your costs accordingly.

If I were to do this over again, I would also buy a single 1/2" PVC coupling to turn into a cutting tool instead of using a short bit of PVC pipe.

Assembly

Cut one of the 10' PVC pipes into four 30" sections. Cut a 30" section off the other pipe. That gives you 5 blades.

Then cut ten 3-3/4" sections to use as hilts.

Then cut five 9-1/2" sections to use as handles.

Cement an end cap to one end of each PVC section.

Cement two cross-guard assemblies opposite each other in each PVC cross fitting.

Cement one handle assembly in each PVC cross fitting.

Cement one blade assembly in each PVC cross fitting.

Measure the shoulder of the cross fitting. Cut a circle near one end of the pool noodle, so that the edge of the hole is that distance from the end of the noodle. There are a few options here. You can just hack a hole into it with a sharp knife, or you can get a 1/2" PVC straight coupler fitting, and sharpen one end of it, and use that to cut the circle. Or you can use the small leftover chunk of 1/2" pipe to cut the circle, though that yields a hole that is slightly under-sized.

Slit the pool noodle from the end to the hole so that it can be pushed over the cross-guard.

Slide the noodle down over the PVC blade.

Measure 40-1/2" from the base of the noodle, and cut the noodle to that length.

Tape the noodle around the base of the cross guard with a piece of duct tape, cut to about 1/3rd the typical width of duct tape.

Optionally, you can take the excess foam cut from the blade, cut it in half, and use it for a foam-ensconced hilt.

Optionally cover the entire noodle with duct tape to improve its durability. Without this, energetic sword fights can tear small bits of foam out of the blades.

This could be taken up a level with some tennis racket handle wrap on the handle to make it more grippy and less obviously made from PVC. In my hands, the 1/2" PVC pipe is a bit too small; were I to build one for myself, I would consider a larger diameter for the handle, which would imply a reducing coupling, and the junction with the cross piece might be awkward or look odd. The coupling, and a second size of pipe would also increase the cost.

As for concerns over durability, five kids playing with these for a couple months has yielded no broken swords or requests for repairs. I'd rank that as pretty good durability.

Ready. Set. Fight!

The Sabaton Index

I enjoy music that tells a story. True stories are even better. For what has probably been a couple of years, I have stumbled across references to Sabaton and their songs about historical events (particularly battles, wars, and related topics). But I only recently investigated and listened to their work.

They post videos on YouTube where they also have a Sabaton History channel that covers the history behind the songs. They also publish their discography with lyrics and some background.

Who would sing a song about the Holocaust? Sabaton did. Listen carefully to the lyrics, soak in the thunder of the music. Reflect on the history.

For me, a song is more meaningful when I know the story behind it and the words from which it is crafted. So I want to learn the history, read the lyrics, and then listen to the song. But Sabaton has created so much material, from lyrics to lyric videos to music videos to history videos, that it is hard to really wrap your head around it. It really needs some organization to navigate it.

So I made an attempt at it.

Generally, each row of the table lists the title of the song, a link to the official lyrics, links to the Sabaton History videos for that song, various videos of the song itself, and a brief comment noting the topic of the song. They publish multiple video variants for their songs, such as "lyric videos" which show the words as they sing, or "music videos" which sometimes take a more reenactment approach. Or a "story video", as with No Bullets Fly, or even a stop motion animation "block video" as with The Future Of Warfare. I've attempted to be fairly complete in terms of listing all songs for which I found information, even if I didn't find enough to fill out all the columns. I have listened to but a small fraction of the material indexed here, but I've found this structure to be useful in exploring Sabaton's work.

Find the full table at SabatonIndex.

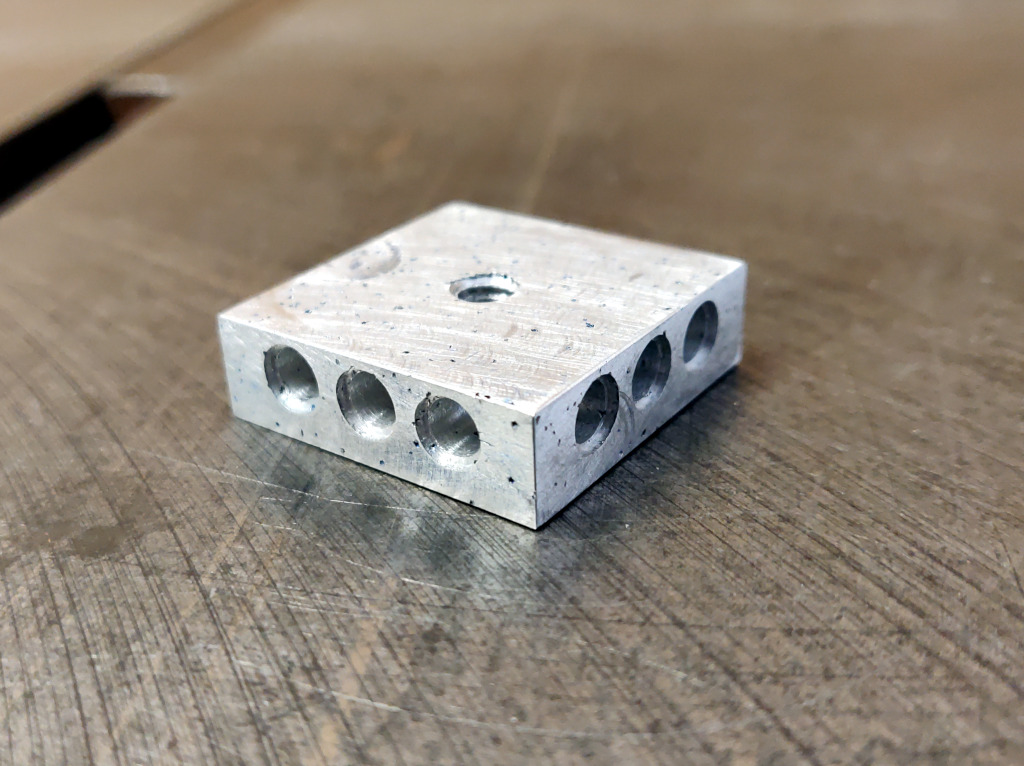

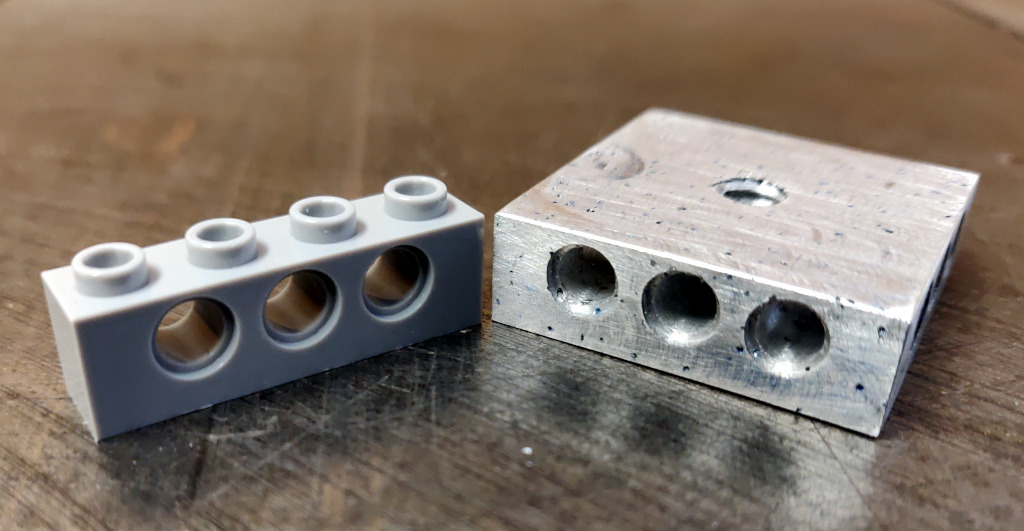

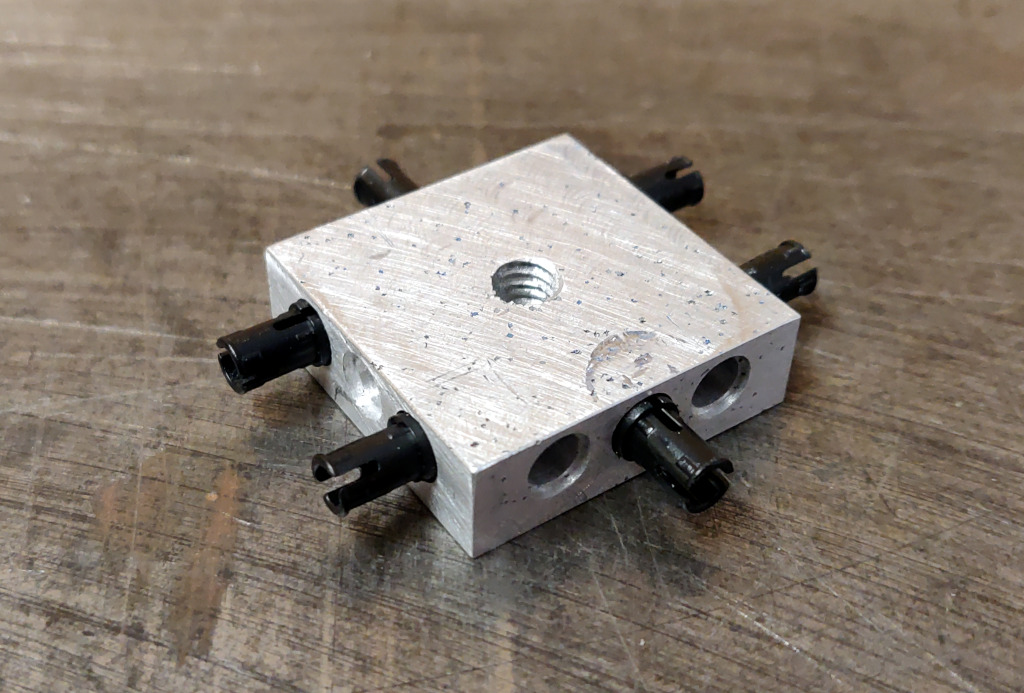

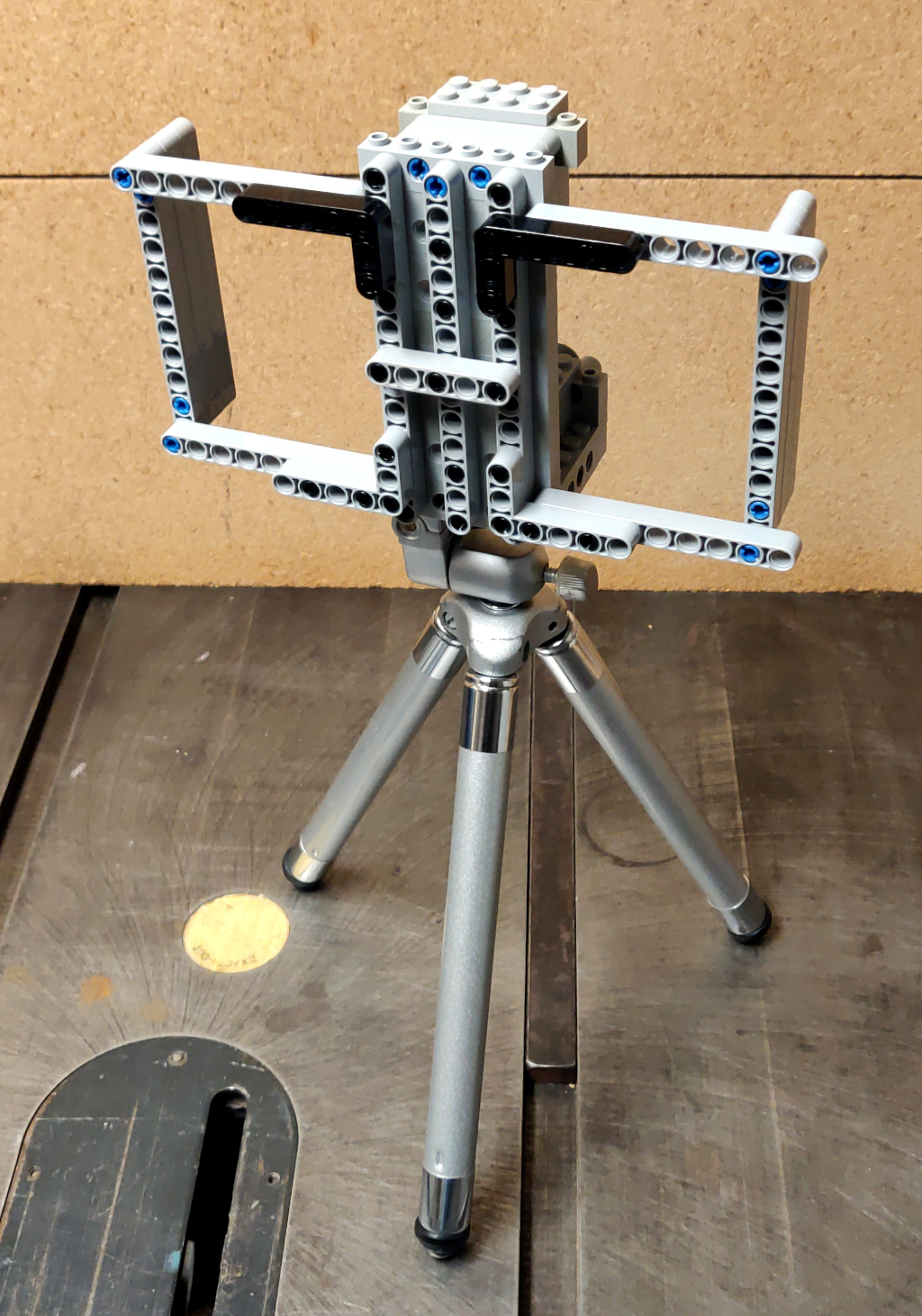

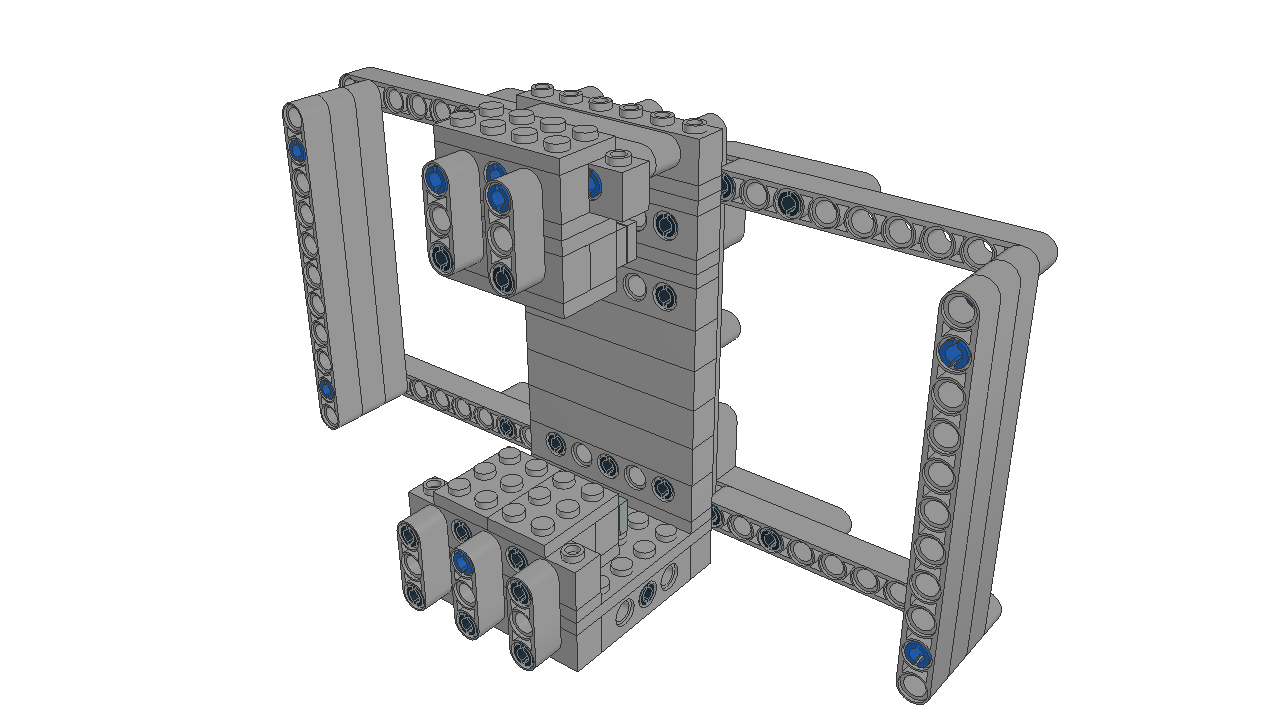

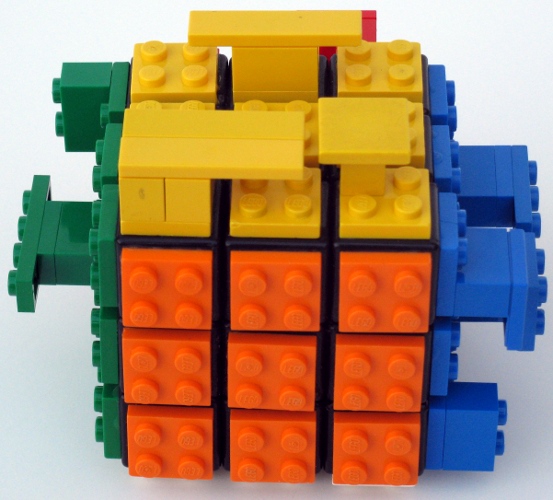

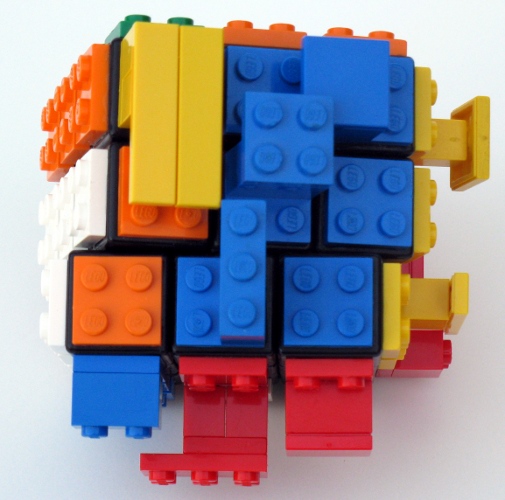

Lego and aluminum phone camera tripod mount

For a family project, we needed a way to take pictures with the stability of a tripod. The best camera we own happens to be the one in a OnePlus 6 cellphone. We have a collapsible tripod with a standard mounting plate and bolt.

Those don't exactly attach to each other.

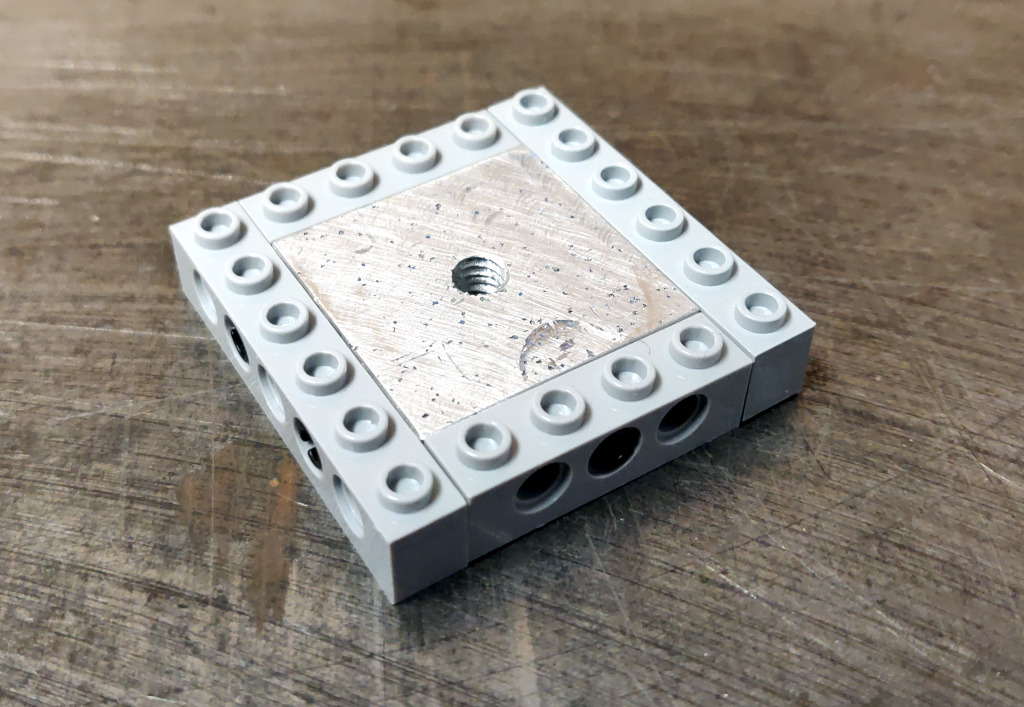

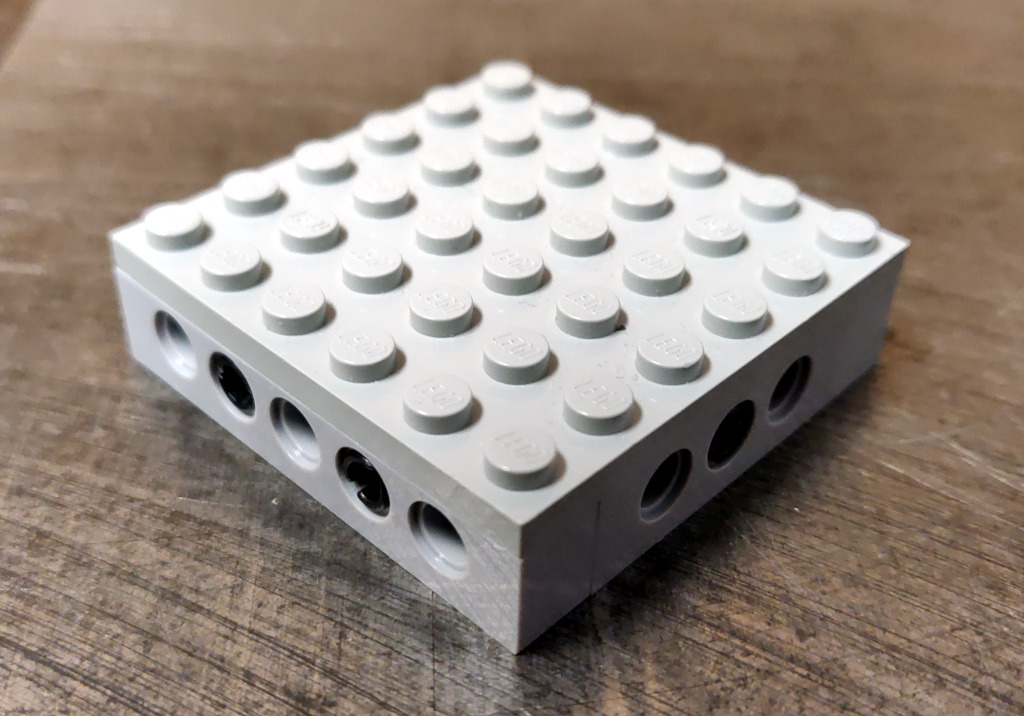

Ok, so how can we approach this? Well, the tripod has a 1/4" 20tpi captured bolt. So we need something rigid for it to screw into. I can drill and tap some aluminum to meet that interface. For holding the OnePlus 6, a frame built from Lego could work.

So all we need then is something that can bolt onto the tripod, and to which we can attach Lego pieces.

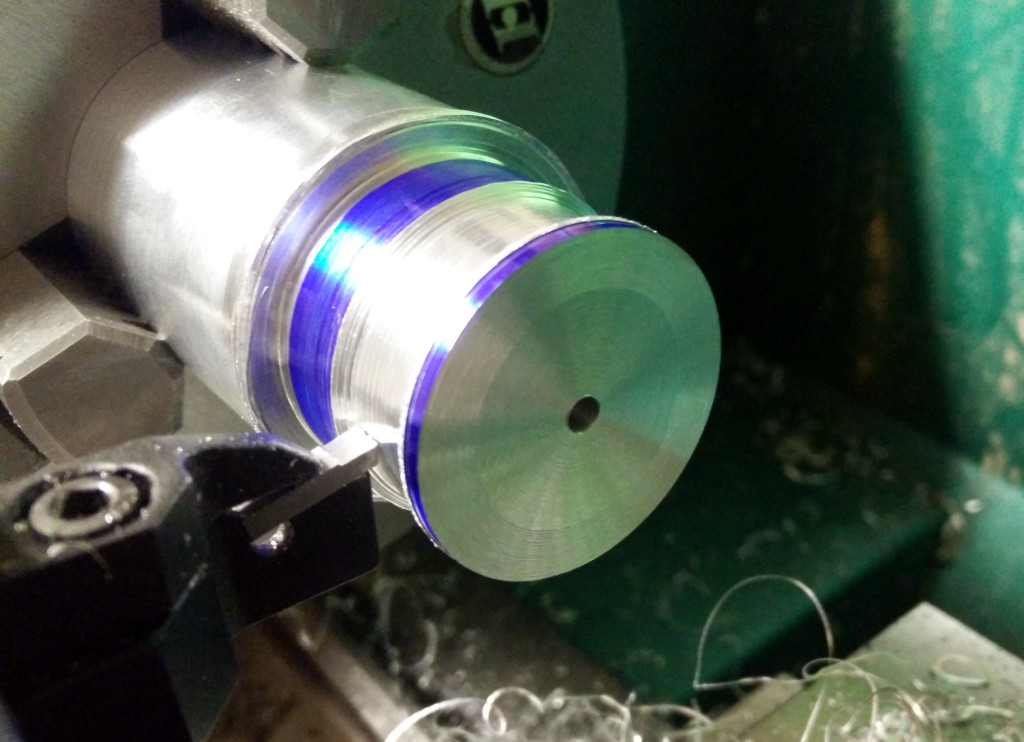

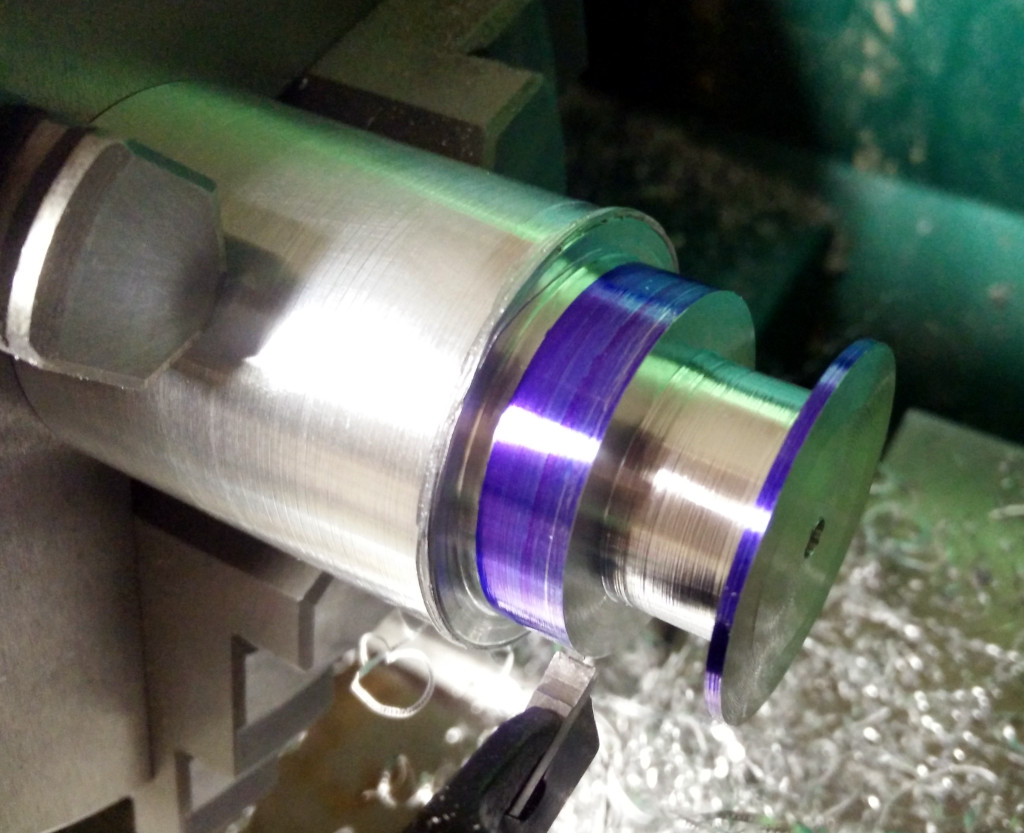

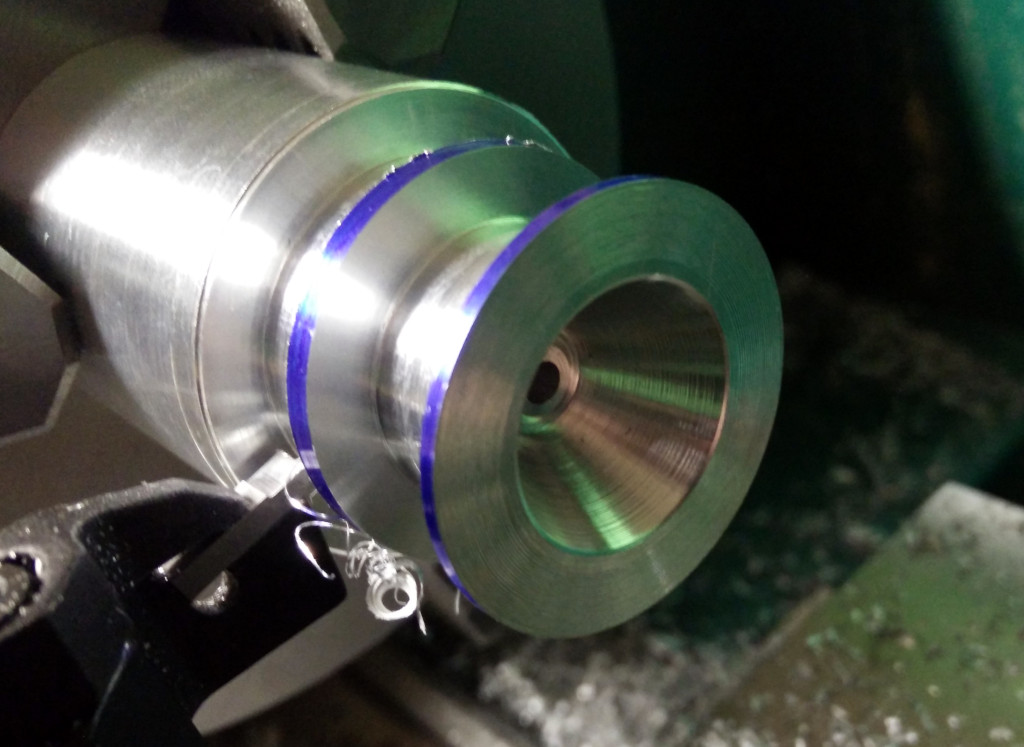

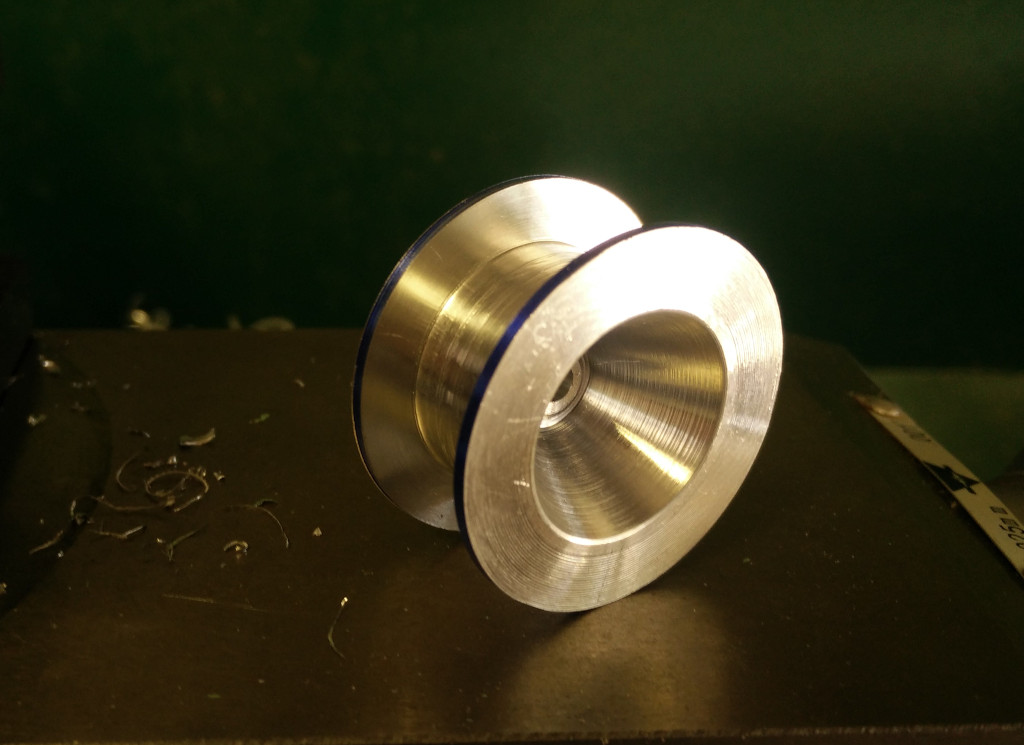

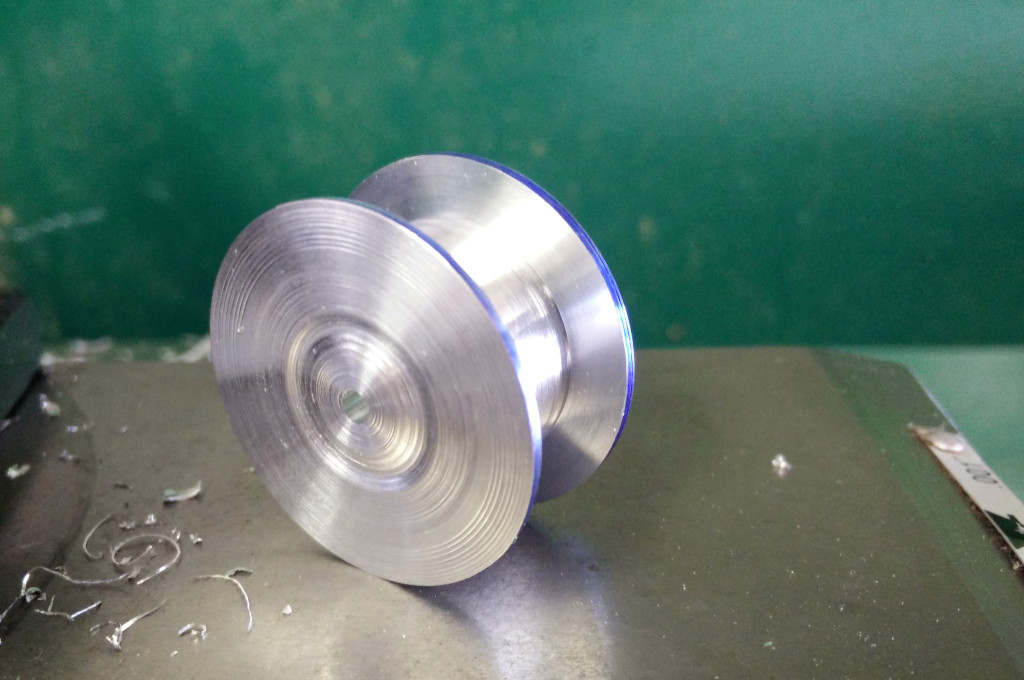

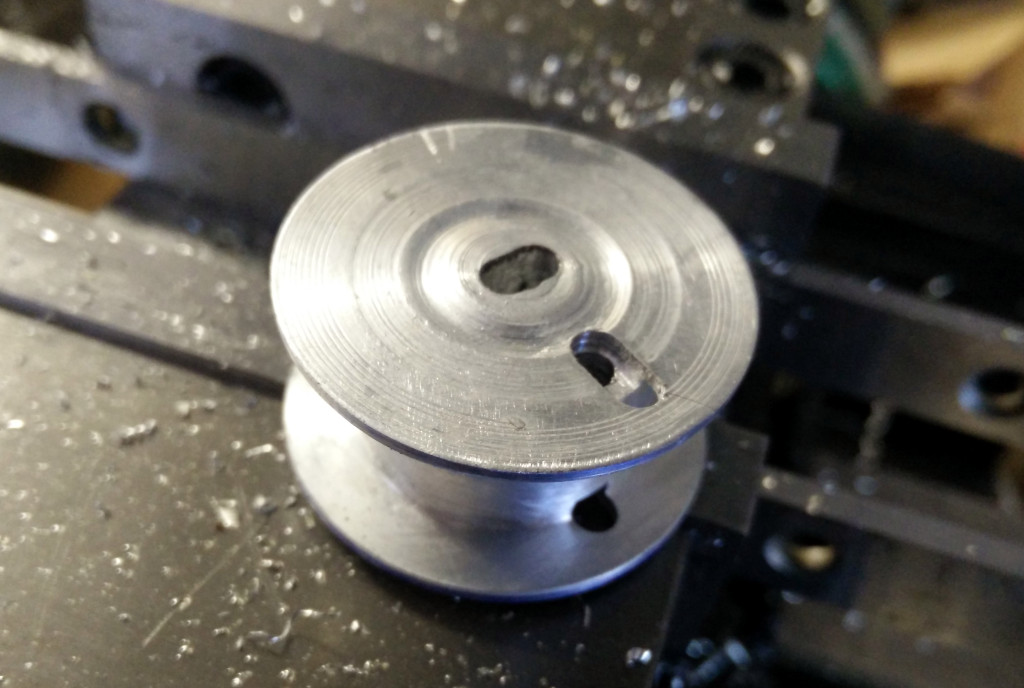

Studs look challenging to machine, and I expect they would be extremely finicky to get machined precisely enough to give the right amount of "clutch". And might be prone to damaging bricks, which we want to avoid. An easier approach is to drill holes compatible with Lego Technic pins.