LDraw Parts Library 2026-01 - Packaged for Linux

LDraw.org maintains a library of Lego part models upon which a number of related tools such as LeoCAD, LDView and LPub rely.

I packaged the 2026-01 parts library for Fedora 43 to install to /usr/share/ldraw; it should be straight-forward to adapt to other distributions.

The *.noarch.rpm files are the ones to install, and the .src.rpm contains everything so it can be rebuilt for another rpm-based distribution.

ldraw_parts-202601-ec1.fc43.src.rpm

ldraw_parts-202601-ec1.fc43.noarch.rpm

ldraw_parts-creativecommons-202601-ec1.fc43.noarch.rpm

ldraw_parts-models-202601-ec1.fc43.noarch.rpm

See also LDrawPartsLibrary.

LDraw Parts Library 2025-12 - Packaged for Linux

LDraw.org maintains a library of Lego part models upon which a number of related tools such as LeoCAD, LDView and LPub rely.

I packaged the 2025-12 parts library for Fedora 43 to install to /usr/share/ldraw; it should be straight-forward to adapt to other distributions.

The *.noarch.rpm files are the ones to install, and the .src.rpm contains everything so it can be rebuilt for another rpm-based distribution.

ldraw_parts-202512-ec1.fc43.src.rpm

ldraw_parts-202512-ec1.fc43.noarch.rpm

ldraw_parts-creativecommons-202512-ec1.fc43.noarch.rpm

ldraw_parts-models-202512-ec1.fc43.noarch.rpm

See also LDrawPartsLibrary.

LeoCAD 25.09 - Packaged for Linux

LeoCAD is a CAD application for building digital models with Lego-compatible parts drawn from the LDraw parts library.

I packaged (as an rpm) the 25.09 release of LeoCAD for Fedora 43. This package requires the LDraw parts library package.

Install the binary rpm. The source rpm contains the files to allow you to rebuild the packge for another distribution.

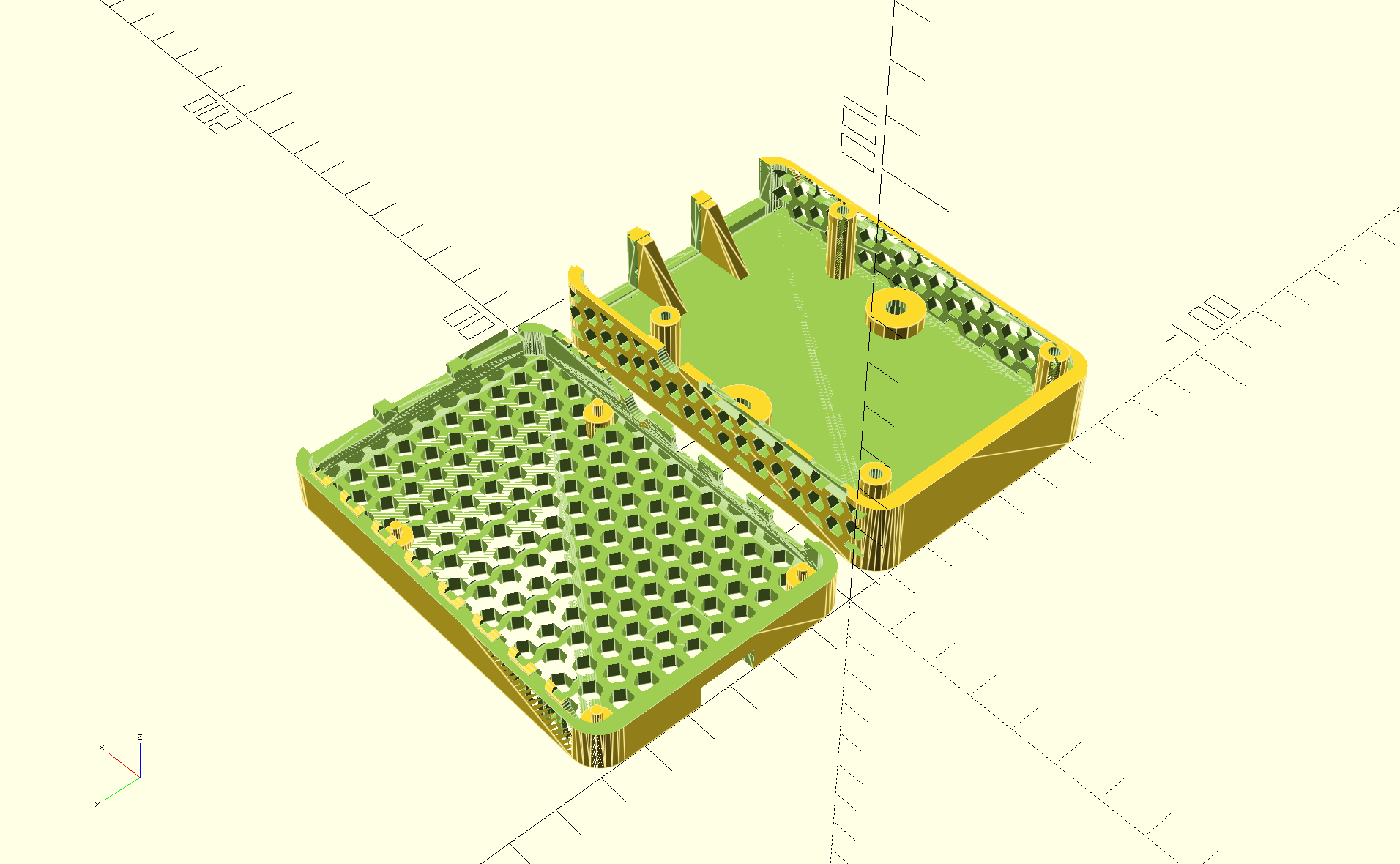

Yet Another Raspberry-Pi case in OpenSCAD

I needed a case for a Raspberry Pi 4B for a project, looked at those available in the usual places, and didn't find something that quite met my needs. I wanted a case which I could bolt to a sheet of plywood, so I wanted holes for a pair of 8-32 heat-set threaded inserts in the lid. I also wanted it to be well-ventilated to avoid overheating.

So I created one (from scratch) in OpenSCAD:

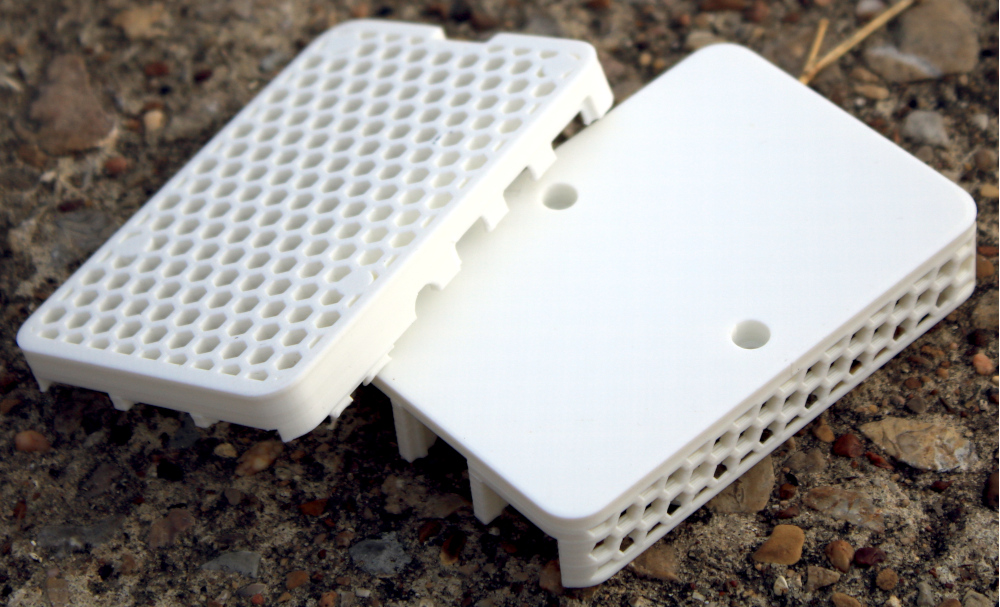

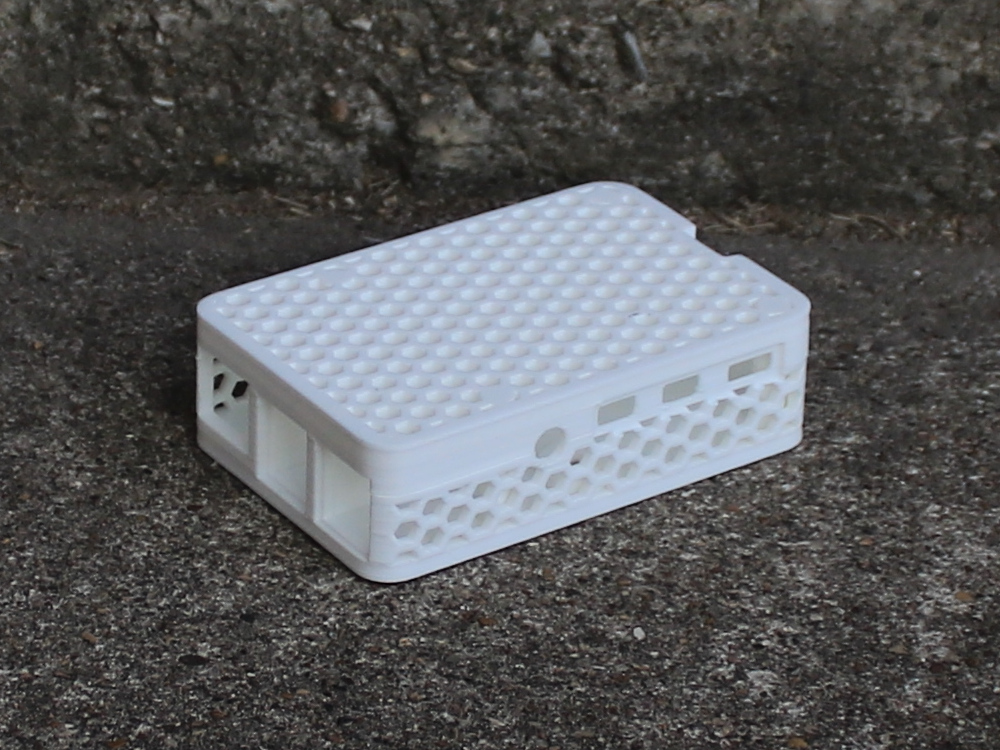

I think it turned out quite well:

And the two parts of the case snap together securely:

For those who would want to make use of it (CC BY-SA), the OpenSCAD and STL files are available in this archive. If you do make use of it, I'd love to hear from you.

(Photography by Joshua Carter.)

LDraw Parts Library 2025-09 - Packaged for Linux

LDraw.org maintains a library of Lego part models upon which a number of related tools such as LeoCAD, LDView and LPub rely.

I packaged the 2025-09 parts library for Fedora 42 to install to /usr/share/ldraw; it should be straight-forward to adapt to other distributions.

The *.noarch.rpm files are the ones to install, and the .src.rpm contains everything so it can be rebuilt for another rpm-based distribution.

ldraw_parts-202509-ec1.fc42.src.rpm

ldraw_parts-202509-ec1.fc42.noarch.rpm

ldraw_parts-creativecommons-202509-ec1.fc42.noarch.rpm

ldraw_parts-models-202509-ec1.fc42.noarch.rpm

See also LDrawPartsLibrary.

LDraw Parts Library 2025-08 - Packaged for Linux

LDraw.org maintains a library of Lego part models upon which a number of related tools such as LeoCAD, LDView and LPub rely.

I packaged the 2025-08 parts library for Fedora 42 to install to /usr/share/ldraw; it should be straight-forward to adapt to other distributions.

The *.noarch.rpm files are the ones to install, and the .src.rpm contains everything so it can be rebuilt for another rpm-based distribution.

ldraw_parts-202508-ec1.fc42.src.rpm

ldraw_parts-202508-ec1.fc42.noarch.rpm

ldraw_parts-creativecommons-202508-ec1.fc42.noarch.rpm

ldraw_parts-models-202508-ec1.fc42.noarch.rpm

See also LDrawPartsLibrary.

LDraw Parts Library 2025-07 - Packaged for Linux

LDraw.org maintains a library of Lego part models upon which a number of related tools such as LeoCAD, LDView and LPub rely.

I packaged the 2025-07 parts library for Fedora 42 to install to /usr/share/ldraw; it should be straight-forward to adapt to other distributions.

The *.noarch.rpm files are the ones to install, and the .src.rpm contains everything so it can be rebuilt for another rpm-based distribution.

ldraw_parts-202507-ec1.fc42.src.rpm

ldraw_parts-202507-ec1.fc42.noarch.rpm

ldraw_parts-creativecommons-202507-ec1.fc42.noarch.rpm

ldraw_parts-models-202507-ec1.fc42.noarch.rpm

See also LDrawPartsLibrary.

LDraw Parts Library 2025-06 - Packaged for Linux

LDraw.org maintains a library of Lego part models upon which a number of related tools such as LeoCAD, LDView and LPub rely.

I packaged the 2025-06 parts library for Fedora 42 to install to /usr/share/ldraw; it should be straight-forward to adapt to other distributions.

The *.noarch.rpm files are the ones to install, and the .src.rpm contains everything so it can be rebuilt for another rpm-based distribution.

ldraw_parts-202506-ec1.fc42.src.rpm

ldraw_parts-202506-ec1.fc42.noarch.rpm

ldraw_parts-creativecommons-202506-ec1.fc42.noarch.rpm

ldraw_parts-models-202506-ec1.fc42.noarch.rpm

See also LDrawPartsLibrary.

LDraw Parts Library 2025-05 - Packaged for Linux

LDraw.org maintains a library of Lego part models upon which a number of related tools such as LeoCAD, LDView and LPub rely.

I packaged the 2025-05 parts library for Fedora 41 to install to /usr/share/ldraw; it should be straight-forward to adapt to other distributions.

The *.noarch.rpm files are the ones to install, and the .src.rpm contains everything so it can be rebuilt for another rpm-based distribution.

ldraw_parts-202505-ec1.fc41.src.rpm

ldraw_parts-202505-ec1.fc41.noarch.rpm

ldraw_parts-creativecommons-202505-ec1.fc41.noarch.rpm

ldraw_parts-models-202505-ec1.fc41.noarch.rpm

See also LDrawPartsLibrary.

LDraw Parts Library 2025-04 - Packaged for Linux

LDraw.org maintains a library of Lego part models upon which a number of related tools such as LeoCAD, LDView and LPub rely.

I packaged the 2025-04 parts library for Fedora 41 to install to /usr/share/ldraw; it should be straight-forward to adapt to other distributions.

The *.noarch.rpm files are the ones to install, and the .src.rpm contains everything so it can be rebuilt for another rpm-based distribution.

ldraw_parts-202504-ec1.fc41.src.rpm

ldraw_parts-202504-ec1.fc41.noarch.rpm

ldraw_parts-creativecommons-202504-ec1.fc41.noarch.rpm

ldraw_parts-models-202504-ec1.fc41.noarch.rpm

See also LDrawPartsLibrary.

rss

rss